Lambert: Just when you need them the most….

By David F. Hendry, Director, Program in Economic Modeling, Institute for New Economic Thinking at the Oxford Martin School, Grayham E. Mizon, Professor of Economics and Fellow of Nuffield College, Oxford University. Originally published at VoxEU.

In most aspects of their lives humans must plan forwards. They take decisions today that affect their future in complex interactions with the decisions of others. When taking such decisions, the available information is only ever a subset of the universe of past and present information, as no individual or group of individuals can be aware of all the relevant information. Hence, views or expectations about the future, relevant for their decisions, use a partial information set, formally expressed as a conditional expectation given the available information.

Moreover, all such views are predicated on there being no unanticipated future changes in the environment pertinent to the decision. This is formally captured in the concept of ‘stationarity’. Without stationarity, good outcomes based on conditional expectations could not be achieved consistently. Fortunately, there are periods of stability when insights into the way that past events unfolded can assist in planning for the future.

The world, however, is far from completely stationary. Unanticipated events occur, and they cannot be dealt with using standard data-transformation techniques such as differencing, or by taking linear combinations, or ratios. In particular, ‘extrinsic unpredictability’ – unpredicted shifts of the distributions of economic variables at unanticipated times – is common. As we shall illustrate, extrinsic unpredictability has dramatic consequences for the standard macroeconomic forecasting models used by governments around the world – models known as ‘dynamic stochastic general equilibrium’ models – or DSGE models.

DSGE models

DSGE Models Play a Prominent Role in the Suites of Models Used by Many Central Banks (e.g. Bank of England 1999, Smets and Wouters 2003, and Burgess et al. 2013). The supposedly ‘structural’ Bank of England’s Quarterly Model (BEQM) broke down during the Financial Crisis, and has since been replaced by another system built along similar lines where the “behaviour of the central organising model should be consistent with the theory underpinning policymakers’ views of the monetary transmission mechanism (Burgess et al. 2013, p.6)”, a variant of the claimed “trade-off between ‘empirical coherence’ and ‘theoretical coherence’” in Pagan (2003).

Many of the theoretical equations in DSGE models take a form in which a variable today, say incomes (denoted as yt), depends inter alia on its ‘expected future value’. (In formal terms, this is written as Etyt+1], where the ‘t’ after the ‘E’ indicates the date at which the expectation is formed, and the ‘t+1’ after the ‘y’ indicates the date of the variable). For example, yt may be the log-difference between a de-trended level and its steady-state value. Implicitly, such a formulation assumes some form of stationarity is achieved by de-trending.1

Unfortunately, in most economies, the underlying distributions can shift unexpectedly. This vitiates any assumption of stationarity. The consequences for DSGEs are profound. As we explain below, the mathematical basis of a DSGE model fails when distributions shift (Hendry and Mizon 2014). This would be like a fire station automatically burning down at every outbreak of a fire. Economic agents are affected by, and notice such shifts. They consequently change their plans, and perhaps the way they form their expectations. When they do so, they violate the key assumptions on which DSGEs are built.

The key is the difference between intrinsic and extrinsic unpredictability. Intrinsic unpredictability is the standard economic randomness – a random draw from a known distribution. Extrinsic unpredictability is an ‘unknown unknown’ so that the conditional and unconditional probabilities of outcomes cannot be accurately calculated in advance.2

Extrinsic Unpredictability and Location Shifts

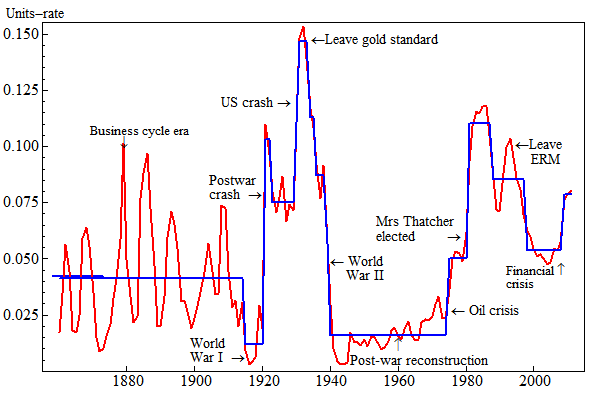

Extrinsic unpredictability derives from unanticipated shifts of the distributions of economic variables at unpredicted times. Of these, location shifts (changes in the means of distributions) have the most pernicious effects. The reason is that they lead to systematically biased expectations and forecast failure. Figure 1 records UK unemployment over 1860–2011, with some of the major historical shifts highlighted.

Figure 1 Location shifts over 1860–2011 in UK unemployment, with major historical events

Four main epochs can be easily discerned in Figure 1:

- A business-cycle era over 1860–1914

- World War I and the inter-war period to 1939 with much higher unemployment

- World War II and post-war reconstruction till 1979 with historically low levels

- A more turbulent period since, with much higher and more persistent unemployment levels

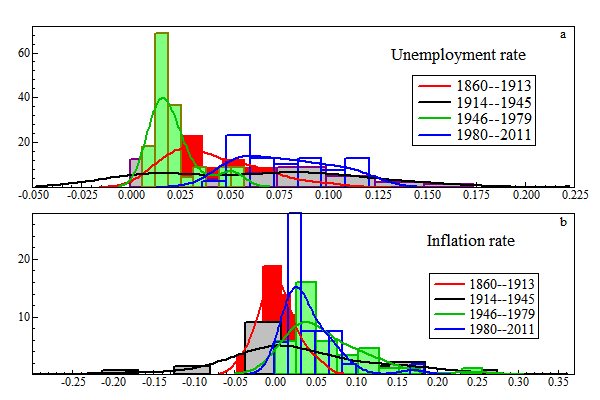

As Figure 2, panel (a) confirms for histograms and non-parametric densities, both the means and variances have shifted markedly across these epochs. Panel b shows distributional shifts in UK price inflation over the same time periods. Most macroeconomic variables have experienced abrupt shifts, of which the Financial Crisis and Great Recession are just the latest exemplars.

Figure 2 Density shifts over four epochs in UK unemployment and price inflation

Extrinsic unpredictability and economic analyses

Due to shifts in the underlying distributions, all expectations operators must be three-way time dated: one way for the time the expectation was formed, one for the time of the probability distribution being used, and the third for the information set being used. We write this sort of expectation as EDεt [εt+1|It−1], where εt+1 is the random variable we care about, Dεt (·) is the distribution agents use when forming the expectation, and It−1 is the information set available when the expectation is formed. This more general formulation allows for a random variable being unpredictable in its mean or variance due to unanticipated shifts in its conditional distribution.

Conditional Expectations

The importance of three-way dating can be seen by looking at how one can fall into a trap by ignoring it. For example, conditional expectations are sometimes ‘proved’ to be unbiased by arguments like the following. Start with the assertion that next quarter’s income equals expected future income plus an error term whose value is not known until next quarter. By definition of a conditional expectation, the mean of the error must be zero. (Formally the expectation is denoted as E[yt+1|It] where It is the information set available today.)

Econometric models of inflation – such as the new-Keynesian Phillips curve in Galí and Gertler (1999) – typically involve unknown expectations like E[yt+1|It]. The common procedure is to replace them by the actual outcome yt+1 – using the argument above to assert that the actual and expected can only differ by random shocks that have means of zero. The problem is that this deduction assumes that there has been no shift in the distribution of shocks. In short, the analysis suffers from the lack of a date on the expectations operator related to the distribution (Castle, Doornik, Hendry and Nymoen 2014).

The basic point is simple. We say an error term is intrinsically unpredictable if it is drawn from, for example, a normal distribution with mean µt and a known variance. If the mean of the distribution cannot be established in advance, then we say the error is also extrinsically unpredictable. In this case, the conditional expectation of the shock needs not have mean zero for the outcome at t+1. The forecast is being made with the ‘wrong’ distribution – a distribution with mean µt, when in fact the mean is µt+1. Naturally, the conditional expectation formed at t is not an unbiased predictor of the outcome at t +1.

Implications for DSGE models

It seems unlikely that economic agents are any more successful than professional economists in foreseeing when breaks will occur, or divining their properties from one or two observations after they have happened. That link with forecast failure has important implications for economic theories about agents’ expectations formation in a world with extrinsic unpredictability. General equilibrium theories rely heavily on ceteris paribus assumptions – especially the assumption that equilibria do not shift unexpectedly. The standard response to this is called the law of iterated expectations. Unfortunately, as we now show, the law of iterated expectations does not apply inter-temporally when the distributions on which the expectations are based change over time.

The Law of Iterated Expectations Facing Unanticipated Shifts

To explain the law of iterated expectations, consider a very simple example – flipping a coin. The conditional probability of getting a head tomorrow is 50%. The law of iterated expectations says that one’s current expectation of tomorrow’s probability is just tomorrow’s expectation, i.e. 50%. In short, nothing unusual happens when forming expectations of future expectations. The key step in proving the law is forming the joint distribution from the product of the conditional and marginal distributions, and then integrating to deliver the expectation.

The critical point is that none of these distributions is indexed by time. This implicitly requires them to be constant. The law of iterated expectations need not hold when the distributions shift. To return to the simple example, the expectation today of tomorrow’s probability of a head will not be 50% if the coin is changed from a fair coin to a trick coin that has, say, a 60% probability of a head.

In macroeconomics, there are two sources of updating the distribution.

- The first concerns conditional distributions where new information shifts the conditional expectation (i.e., Eyt[yt+1|yt−1] shifts to Eyt [yt+1|yt]).

Much of the economics literature (e.g. Campbell and Shiller 1987) assumes that such shifts are intrinsically unpredictable since they depend upon the random innovation to information that becomes known only one period later.3

- The second occurs when the distribution used to form today’s expectation Eyt[.] shifts before tomorrow’s expectation Eyt+1[.] is formed.

The point is that the new distributional form has to be learned over time, and may have shifted again in the meantime.4 The mean of the current and future distributions (µt and µt+1) need to be estimated. This is a nearly intractable task for agents – or econometricians – when distributions are shifting.

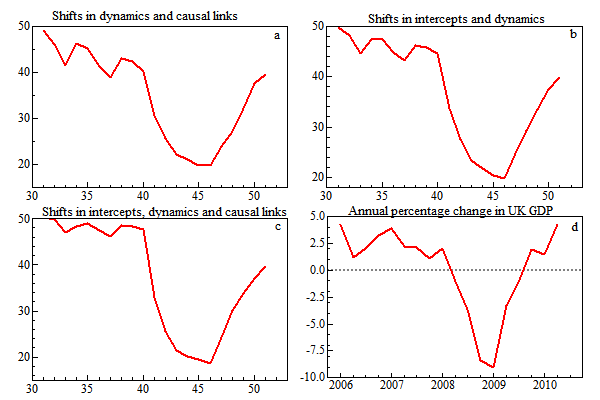

Using artificial data from a bivariate generating process where the parameters are known, Figure 3, panels a–c, show essentially that the same V-shape can be created by changing many different combinations of the parameters for the dynamics, the intercepts, and the causal links in the two equations, where panel d shows their similarity to the annualized % change in UK GDP over the Great Recession.

For example, the intercept in the equation for the variable shown in panel a was unchanged, but was changed 10-fold in panel c. A macro economy can shift from many different changes, and as in Figure 3, economic agents cannot tell which shifted till long afterwards, even if another shift has not occurred in the meantime.

Figure 3 Near-identical location shifts despite changes in many different parameter combinations

The derivation of a martingale difference sequence from ‘no arbitrage’ in, for example, Jensen and Nielsen (1996) also explicitly requires no shifts in the underlying probability distributions. Once that is assumed, one can deduce the intrinsic unpredictability of equity price changes and hence market (informational) efficiency. Unanticipated shifts also imply unpredictability, but need not entail efficiency. Informational efficiency does not follow from unpredictability per se, when the source is extrinsic rather than intrinsic. Distributional shifts occur in financial markets, as illustrated by the changing market-implied probability distributions of the S&P500 in the Bank of England Financial Stability Report (June 2010).

In other arenas, ‘location shifts’ (i.e. shifts in the distribution’s mean) can play a positive role in clarifying both causality, as demonstrated in White and Kennedy (2009), and testing ‘super exogeneity’ before policy interventions (Hendry and Santos 2010). Also, White (2006) considers estimating the effects of natural experiments, many of which involve large location shifts. Thus, while more general theories of the behaviour of economic agents and their methods of expectations formation are required under extrinsic unpredictability, and forecasting becomes prone to failure, large shifts can also help reveal the linkages between variables.

Conclusions

Unanticipated changes in underlying probability distributions – so-called location shifts – have long been the source of forecast failure. Here, we have established their detrimental impact on economic analyses involving conditional expectations and inter-temporal derivations. As a consequence, dynamic stochastic general equilibrium models are inherently non-structural; their mathematical basis fails when substantive distributional shifts occur.

See original post for references

1 Burgess et al. (2013, p.A1) assert that this results in ‘detrended, stationary equations’, though no evidence is provided for stationarity. Technically, while the ‘t’ in the operator Et usually denotes the period when the expectation is formed, it also sometimes indicates the date of information available when the expectation was formed. The distribution over which the expectation is formed is implicitly timeless – the ‘t’ does not refer to the date of some time-varying underlying probability distribution.

2 This is akin to the concept in Knight (1921) of his ‘unmeasurable uncertainty’: unexpected things do happen.

3 To give a practical example, consider an unknown pupil who takes a series of maths tests. While the score on the first test is an unbiased predictor of future scores, the teacher learns about the pupil’s inherent ability with each test. The teacher’s expectations of future test performance will almost surely change in one direction or the other. After the first test, however, one doesn’t know whether expectations will rise or fall.

4 Even if the distribution, denoted ft+1 (yt+1|yt), became known one period later, the issue arises since: Eyt+1[yt+1| yt] − Eyt[yt+1| yt−1] equals Eyt+1 [yt+1|yt] − Eyt+1[yt+1|yt−1]+(Eyt+1[yt+1|yt−1] − Eyt[yt+1|yt−1]) which equals νt + (µt+1 − µt).

New Economic Thinking ?!?

I know this may not seem daring enough to you, but since every central bank (along with lots of other official forecasters) relies on DSGE models, this is basically saying the emperor has no clothes. But it’s doing it in economese, which means the message may reach the intended audience, but to the layperson, it reads like typical impenetrable (by design) Serious Economist work.

But the emperor is also corrupt, even when they know what theories are right. For example, they still base their policy on the Phillips Curve even though they misuse it and misunderstand it [ intentionally? ]

So in other words if they can’t be persuaded they’re wrong by reality, maybe theory can get through to them. But you still have the problem the article describes, the Expectation of Expectations of Economic Model Correctness at t+1 is a function of observations today and expectations of correctness at t+1. Since observations today are considered irrelevant to correctness both today and at t+1, and the expectation of future correctness is a probability distribution with a mean at 100% and standard deviation of zero, there’s not much wiggle room (no pun intended, get it: wiggle/variation/dispersion/variance/skew/etc. OK it’s not funny) its hard to be optimistic.. Oh well, at least the authors didn’t mention Paul Krugman once! They get 3 stars just for that.

Necessary for understanding, and assumed, so therefore not provided, is the formal definition of expectation or conditional expectation (the notation here is the ‘E’ in the article) as taken from probability theory. If you already have that then I expect (pun intended) that the rest of the article isn’t so bad, although requiring a bit of work.

It is inevitable DGSE models don’t work in crashes.

How is it you can write a complete article about modelling the future and not even mention Chaos Theory or non-linear feedback? Non-linear feedback, chaos, most certainly modifies underlying probability distributions.

A crash is a chaotic event, a strange attractor or a catastrophic event. It is possible to predict such an event will occur, it is not possible to predict when, nor is it possible to predict the state of the system after the catastrophic event.

Not only is the economic math flawed, that is incomplete, but it is also trivial to a significant degree.

well, nobody’s perfect. at least they mentioned martingales and didn’t once mention Krugman. He’s the most talked about man on the planet. How did that happen? You’d think it would be somebody like Adele, even though she’s not a man. Why talk about Professor Krugman? I have no idea, that’s why I don’t. But Adele, that’s another issue entirely. Martingales are another thing somebody can legitimately talk about. You’d think they were birds of some kind, just going by the name on its own. All these guys really need to start over, frankly. Get out the coffee and the blackboard and say “OK, let’s pretend we’re going to make sense. What would we do?”

That was clear immediately after the bubble burst – because virtually all economists (the exceptions can be counted on, literally, one hand) either ignored or, worse, denied it. Even though, as Dean Baker repeatedly pointed out, it was plain as the nose on your face.

Baker never quite admitted it, but that meant the whole profession – no, that’s the wrong word: the whole field is invalid.

You don’t start over with the same guys. You start over with the 5 or 6 who got it right, and you can a large portion of the math, which I strongly suspect is fake.

One needs quite a lot of data to estimate whether a crash is a chaotic or stochastic event, as I recall –unless there are some new high-power/low-data tests out there, which might well be, several orders of magnitude more observations at the relevant time interval than exist for macroeconomies. I think that’s the biggest reason that chaos modelling never took off, because there certainly was interest about 20 years ago.

A recent article on chaos theory in economics, which has a nice survey of the area:

http://aripd.org/journals/jeds/Vol_2_No_1_March_2014/3.pdf

Note that these authors’ emiprical example is a daily exchange rate series from which they used about 7000 ovservations for testing. Also, these tests are only for the possibility that chaos exists in the series. Being able to make even a short term forecast from a possibly chaotic series is something else again.

I’d assert they are all chaotic. Never stochastic, because of the feedback and non-linearity (Humans, greed and fear in the feedback loop)

Synoia, Thanks. +1,000 (Also, I love artistic depictions of chaos/fractals.)

Vulnerability to errors presented by unforeseeability or intentional disregard of material exogenous factors (i.e., “Assume them away!” or ideological views re fraud, criminality, etc.), selection of variables (correlation vs causation), number and weighting of variables, iterative compounding errors in multivariate analysis over time.

Modeling is driven in part by a very human desire for certainty and predictability, as well as to support policy biases. For example, I suspect the models B-squared used as Fed chairman as support for QE-ZIRP policy do not show the compounding magnitude of the imbalances that develop over time stemming from the policy, or maybe they do and there was an “I’ll be gone, you’ll be gone” attitude in play.

All this is not to say that economic modeling is without value over short-term time horizons. Just that the results need to be considered with a healthy degree of skepticism, and policies formed and implemented, and affairs conducted with prudent respect for “unforeseen” risks.

Technical question: does all the “math” actually add anything, or is it hand-waving?

Because it sure looked like decoration to me – but my math tolerance is a bit limited.

Haha, that is what Deidre McCloskey argues, that in most economics papers, the critical part of the argument is actually in the narrative, and the mathed-up parts are trivial. But economists convince themselves that their use of math = rigor.

Classic problem, that is, difference between accuracy and precision.

I learnt about accuracy and precision at university, and never really grasped the full implication until it hit me in the face one day. We had 9 digit digital frequency meters (precision 1 part in 10 to the 9th) with an accuracy of 1 in 10 to the 5th.

The DGSE models are precise when chaos is not evident (and thus of little use except to keep management happy).

The models are never accurate. Nor are they precise over chaotic events.

One could run massive Monte Carlo simulations of chaotic events, however, how would one pike the correct answer from multitudes of results before the event?

Or, there is no way to calibrate the models.

A living example of this is US Foreign Policy. It’s full of non-linear events which yield strange results. Or its full of wishful thinking which prove false when reality (Putin) happens.

Better to use a dartboard. Less expensive, especially if someone else is buying the beer.

“Unanticipated changes in underlying probability distributions – so-called location shifts – have long been the source of forecast failure.”

In other words, we have no clue concerning anything.

No, physics, chemistry, and even much of biology are both precise and accurate. There are exceptions – cosmology, for instance, is mostly speculation inspired by a the latest data, which often contradict the last batch of speculation.

Economics is another matter, both because it covers a chaotic system and also, even more important, because it isn’t science; it’s political ideology, lightly disguised – the real purpose of all the math. Plus a large dollop of wishful thinking.

Personally, I loved Econ 210. The basic market mechanism is just applied feedback theory. Tricky in practice, but valid. Then the corruption sets in…

Terrific responses by Synoia and Chauncey Gardiner. I second Chauncey Gardiner’s statement regarding the value of economic modeling over short-time horizons and think that means that in the short run, economic models can be both accurate and precise. This is largely due to the fact that most economists are running similar versions of the model and that leads to a self-fulfilling prophecy (until it doesn’t), at least when dealing with financial economic models.