By Lambert Strether of Corrente.

To start, I had wanted to give you the origin for the post title, which is a famous parable in marketing, but I can’t find an attested, authoritative source (not even at Language Log), so I’ll settle for the oldest example I can find, 2004’s Publishing Confidential: An Insider’s Guide to What it Really Takes to Land a Non-Fiction Book Deal, by Paul Brown, where two would-be authors have just focus-grouped their manuscript:

If you are writing a service book, and potential readers tell you the title is awful, they want more callouts and checklists, and they wouldn’t mind if the book were completely modular so they could concentrate on the stuff that they thought would help them and be able to skip everything else, then you might want to listen.

We did.

As [my co-author] told me at the end of the focus group, when we were scrambling to find anything positive that had come out of the experience: “If the dogs won’t eat the dog food, it is bad dog food.”

From there, the phrase seems to have migrated to the business school/venture capital nexus (2010), for obvious reasons, and thence, for really obvious reasons, to the world of political operatives and pundits (2012).

Now, self-driving cars are, so we are told, an inevitability, and — like Strong AI or the Paperless Office — the next big thing (though to be fair, there’s been some pushback to the relentless PR in recent weeks). It’s also worth noting that David Plouffe, Obama’s campaign manager in 2008, went to work for Uber doing public relations, that the Obama administration just issued “light touch” regulations for self-driving cars, and that any number of Democrat (and Republican) operatives are doubtless preparing to help Silicon Valley smooth away tiresome bureacratic obstacles (and allocate any coming infrastructure monies). A more-or-less random selection of recent technological triumphalism:

- Uber Chooses Self-Driving Cars Now Forbes

- Lyft’s president says ‘majority’ of rides will be in self-driving cars by 2021 The Verge

- California Eyes Self-Driving Cars to Help Meet Emission Goal Bloomberg

- Self-driving cars are almost here, but are Canadians ready to take the wheel? Global News

- Bright Box uses realistic video games to train its self-driving car retrofit kit VentureBeat

- How Federal Policy Is Paving the Way for Driverless Cars Wharton School

- Self-Driving Cars Gain Powerful Ally: The Government New York Times

- Driverless highway from Vancouver to Seattle proposed CBC[1]

Fascinatingly, all this breathless coverage assumes that self-driving cars are a thing, as opposed to a thing that might or might not one day be. And in my Ahab-like pursuit of the bezzle, I’ve been doing a good deal of reading up, trying to figure out if the tech for “autonomous vehicles” is truly there, what the business models for selling self-driving cars might be (if indeed they are to be sold, as opposed to being rented), effects on political economy (income inequality, public works, insurance), incremental approaches (trucks on highways first), and social benefits (for example, lives saved). Those posts are coming soon, but not now. In this post, I want to focus on the question of whether consumers, in the marketplace, will accept self-driving cars at all. That is, will the dogs eat the dog food?

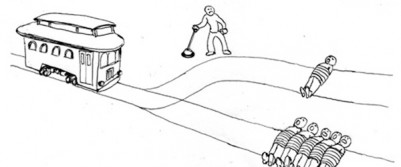

That’s where “The Trolley Problem” comes in. Here is the obligatory image (via):

And here’s an explanation (from 2015; for some reason, the “Trolley Problem” had a moment in 2016, and then went away, as so many problems do):

In a simple formulation of the Trolley Problem, we imagine a trolley hurtling toward a cluster of five people who are standing on the track and facing certain death. By throwing a switch, an observer can divert the trolley to a different track where one person is standing, currently out of harm’s way but certain to die because of the observer’s actions.

Should the observer throw the switch — cutting the death toll from five to one? That is the “utilitarian” argument, which many people find persuasive. The obvious problem is, it puts the observer in the position of playing God — deciding who lives and who dies.

So, would you throw the switch? Gruesome, eh?

Altnough I don’t drive, I can see that the Trolley problem is a potential problem inherent to driving, as when a driver must decide whether to swerve to avoid hitting the five people, even if at the expense of the one, and that the algorithmic “driver” of an “autonomous” vehicle — were there to be such a thing — would have to make the same sort of “decision” (or be programmed to avoid making it, which is another decision).

Of course, one can — indeed, probably should — go meta on the entire Trolley Problem, because it abstracts away from social relations. For example, suppose the “one person” were the academic ethicist who gave you this Sophie’s Choice, who put you in this impossible dilemma. Would that make throwing the switch easier? Or suppose one of the “cluster of five people” was Baby Hitler. Would that make throwing the switch easier? Or suppose we put ObamaCare into the frame of the Trolley Problem: Aren’t the “observers” — a curiously neutral term, come to think of it — equivalent to the policy makers who send some people to HappyVille, and others to Pain City, randomly? Wouldn’t a good measure of the just society be that it minimizes Trolley Problems altogether, instead of tasking meritocrats with devising the best algorithmical “solution” for them?

But even if we accept that the hard case of the Trolley Problem can make good ethical algorithms, three issues remain. First, not all agree with us on the question of throwing the switch:

[I]n a survey of professional philosophers on the Trolley Problem, 68.2% agreed, saying that one should pull the lever. So maybe this ‘problem’ isn’t a problem at all and the answer is to simply do the Utilitarian thing that ‘greatest happiness to the greatest number.’

Trivially, if we are to remake the American transportation system, do we need more than a supermajority of professional philosophers to determine its ethical foundations?[2] Less trivially, what about the 31.8% who are going to have the switch thrown on them whether they like it or not?

Second, is the question of throwing the switch really one that we want to leave to software engineering firms?

[C]an you imagine a world in which say Google or Apple places a value on each of our lives, which could be used at any moment of time to turn a car into us to save others? Would you be okay with that?

Yes, I can, and especially when I use Apple or Google’s increasingly crapified software.

Third, the question of having software throw the switch has no precedent:

John Bonnefon, a psychological scientist working at France’s National Center for Scientific Research, told me there is no historical precedent that applies to the study of self-driving ethics. ‘It is the very first time that we may massively and daily engage with an object that is programmed to kill us in specific circumstances. Trains do not self-destruct, no more than planes or elevators do. We may be afraid of plane crashes, but we know at least that they are due to mistakes or ill intent. In other words, we are used to self-destruction being a bug, not a feature.’

At this point, we might remember social relations once again and reflect that — rather like TSA Pre✓®, with whose “$85 membership, you can speed through security” — there will doubtless be ways to buy yourself out of the operations of the Trolley Problem algorithm altogether. Plenty of historical precedent for that!

Fortunately, we don’t need to answer any of these questions to know that the Trolley Problem is real. Given that reality, are self-driving cars marketable? Will consumers buy them? A study from Jean-François Bonnefon, Azim Sharif, and Iyad Rahwan in <em>Science (PDF) suggests that the answer is no, in their article “The social dilemma of autonomous vehicles”: Here is the abstract:

Autonomous Vehicles (AVs) should reduce traffic accidents, but they will sometimes have to choose between two evils—for example, running over pedestrians or sacrificing itself and its passenger to save them. Defining the algorithms that will help AVs make

these moral decisions is a formidable challenge. We found that participants to six MTurk studies approved of utilitarian AVs (that sacrifice their passengers for the greater good), and would like others to buy them; but they would themselves prefer to ride in AVs that protect their passengers at all costs. They would disapprove of enforcing utilitarian AVs, and would be less willing to buy such a regulated AV.

So it seems that the dogs won’t eat the dog food. Whatever choice the ethical algorithm controlling the self-driving car is to make, how do you market it? It’s hard to imagine what the advertising slogan would be — I’m imagining YouTubes for all these scenarios, here — for a car that puts your baby’s head through the windshield to save (say) five nuns. But the slogan for a car that flattens five nuns to save your baby isn’t that easy to imagine, either. And yet the advertising slogan for a car that isn’t programmed to protect your baby or the nuns also seems problematic. Perhaps there could be an emergency switch that lets the driver take back control. But then the vehicle isn’t really autonomous at all, is it?[3]. Perhaps the real ethical problem was removing the driver’s autonomy in the first place…

Bonneton et al. conclude:

Figuring out how to build ethical autonomous machines is one of the thorniest challenges in artificial intelligence today. As we [who?] are about to endow millions of vehicles with autonomy [There Is No Alternative], taking algorithmic morality seriously has never been more urgent. Our data-driven approach highlights how the field of experimental ethics can give us key insights into the moral, cultural and legal standards that people expect from autonomous driving algorithms. For the time being, there seems to be no easy way to design algorithms that would reconcile moral values and personal self-interest, let alone across different cultures with different moral attitudes to life-life tradeoffs [23]—but public opinion and social pressure may very well shift as this conversation progresses.

Ah. A “conversation.” I told you the Democrats were involved. It is bad dog food, then. Perhaps the precautionary principle should be deployed.

NOTES

[1] I would have thought it’s just a little early in the marketing cycle to propose appropriating public goods, but then again Always Be Closing.

[2] If the field were anything other than professional philosophy, I would want to know who funds the dominant 68.2%.

[3] Of course, the switch could be the automotive equivalent of a “placebo” button in an elevator.

I don’t think we’ll ever have autonomous cars driving on crowded city streets with pedestrians everywhere. A good start would be cars that are autonomous only when they can be virtually guaranteed not to encounter pedestrians. Interstate highways, for example, are restricted in most states to motor vehicles only and walking or riding a bike on them is illegal. Cars could be programmed to take control away from the driver only while on an interstate and return it to the driver when leaving the interstate. That might be all the loss of control people are willing to accept, and doing it would create huge savings in fuel and people’s lives.

Context switching for the driver would be a problem I would have thought.

If drivers drive less, their skills will probably atrophy/never develop.

If you want to save fuel,reduce accidents, public transport networks are a better bet. Most European cities have excellent and well used ones.

Might be a bit far fetched for the american market, though.

+1 Odd how public and mass transit never seems to come up in these debates.

Yep….. when someone oohs and ahs over how the auto cars will be so efficient and miraculously flow together, I always note they are actually talking about the benefits of mass transit and the solutions are already here, carpooling, buses and trains.

To me the autopod discussion is just the oligarchs fighting over who is going to monetize and parasite the mundane activity of getting to work and getting the kids to school.

“…solutions are already here..”

Like dealing with global warming, some use it to sell more technology and get richer.

Pretty much. That and figuring out how to spend less money transporting goods by removing the human element, aka truck drivers. And as others point out as well, mass transit is largely less polluting and less of a contributor than ‘autopods’ would be. It is true that because our mass transit has been neglected or outright sabotaged by our leaders for the purpose of greed or cowardice or both, and as a result is uncomfortable or inconvenient. But if we put as much effort into it as is going into the driverless idiocy we might see some improvement on that.

I remember an interview on george kenney’s late lamented podcast where that rarest of creatures, an american rail expert, said the real solution to transport problems was not to make people move about so much.

Pretty sensible, when you think about it.

+1

But how can you have Progress™ without motion? Said expert (the only one I know of is Alan from Big Easy) have offended the Cult of Doing.

I argue, rather, that making people move about so much under their own endogenous power is a real solution to that and so many other problems They’d rather not see solved.

By instead having progress through virtual motion. There is absolutely no reason I can’t do my work at home. The reason my employer wants me there each day is to enhance collaboration.

I’m not sure my employer has really tired hard enough to solve the remote collaboration problem, though. I imagine if employers had to pay 10% more to reimburse employees for their commute time, these problems would get solved quickly.

For most employers, the issue is not collaboration but surveillance, and it isn’t working anyway because most people, at most jobs, are killing time and exaggerating their workload all day.

Apart from work, there’s also the issue of the highly concentrated infrastructure in the US – community grocers replaced by huge mega grocery stores, whole downtowns and disparate networks of small businesses replaced by WalMarts, giant consolidated schools, mega hospitals, etc. There are benefits to some or all of these things, but they dramatically increase total transport time, especially semi-long distance, car only transport time. Ka-ching

It’s rhetorically sabotaged by the “trolly problem” example, I suspect that’s why that meme is pushed.

Lambert’s “Wouldn’t a good measure of the just society be that it minimizes Trolley Problems altogether, instead of tasking meritocrats with devising the best algorithmical “solution” for them?” obviates the whole class of problems, which is what civilization used to be for, making systems that are more than the sum of their parts and benefit everyone in some previously impossible way.

And notice the ubiquitous illustration Lambert has lifted for us shows people tied to the tracks. Don’t tie people up and this whole problem goes away. It is possible to devise safe systems if thats what you want, but they tend to be public goods and hard to extract wealth from without destroying their efficacy.

The pathology of power since the Thatcher/Reagan reaction has been something like this: market outcomes are conceptualized as moral judgements; psychopaths, who genetics tell us make up about 1% of our population, are attracted to market power and once they have it they use it to deploy their instrumental vision of their fellow man.

Lacking in empathy and moral imagination they imagine a world of people like them and set about, with their power, institutionalizing that vision in all the economic systems they influence. Once started, the perks of the system create a sociopathic environment that attracts ambitious people of all sorts but particularly those lacking a strong moral vision. As market power concentrates, the sociopathic rot migrates deeper and deeper into political institutions where the rot has been supported from the top down since Maggie and Ron.

Once the institutions and culture are in place, the powerful are institutionally/systemically incentivized to take the instrumentalist view embodied in the “trolly problem” now rounded out with the arrogance of unrestrained power (that’s how those people got tied up). This is how we came to be a society governed by those that must have wealth extraction even if they have to tie people up to get it and who can’t see the point of having people if they can’t extract wealth from them.

My sense as a software engineer is that autonomous cars are not trolleys, nor are they on rails. This problem and other similar ethical dilemmas probably occur with such rarity that for all practical purposes the engineer can safely just program the car to attempt to avoid collisions and leave it at that.

How often have you been forced to decide who to kill while driving? This just doesn’t happen. Also, when humans make that decision I’d wager money that most would steer towards the softest looking target to avoid getting hurt themselves. That is the five pedestrians on the sidewalk rather than the car in front of them. My bet is a autonomous car would be more likely to get that one right.

At this point I have to inject a joke about automation in the cockpits of modern commercial aircraft (it’s sort of mass transit). Someone was speculating on how personnel should be deployed in the cockpit when autopilots and automated landing and takeoff systems are widely used. There would be one pilot and a dog. The job of the pilot is to feed the dog. The job of the dog is to bite the pilot if he tries to touch any of the controls.

We need more marathon-distance capable self-walking humans.

Of course, self-flying humans would be nice too.

Then, we can do away with cars, self-driving or otherwise.

If men were angels, no self-driving cars would be necessary. –James Madison

So if a toddler wanders onto an interstate highway, you’re OK with the car being programmed to run her over?

I think the point here is there’s no way to create “ethical software” good enough to replace human judgement that will apply to every possible driving scenario.

Ethics are a cultural matter (a “problem” the empire is vigorously working to solve). Driven to Kill: Why drivers in China intentionally kill the pedestrians they hit (Slate)

That said, I’m not beyond suspecting that biometrics and political “threat scores” vs. the status quo might quietly factor in to the switch question.

We used to mainly worry about drunk driving and fatigue at night. Now we have distracted driving due to cell phones as wells as an increasing number of elderly drivers as our population ages. My spouse and I don’t ride our bikes on the streets around us due to the high incidence of inattentive or elderly drivers hitting people – we know (or knew) many more people hit by cars while riding bikes along the shoulder of a suburban/rural road than we know people injured or killed in car-car or car-pedestrian accidents.

So I think the “trolley dilemma” is a bit of a canard related to driverless cars as many human drivers don’t appear to be concerned about the ethics of driving when they should not be or not paying attention when they are driving. I think the bigger issue is more of a technical one related to having adequate sensors and processing for many conditions (including snow) as well as being programmed with sufficient aggressiveness so that pedestrians and human drivers won’t simply keep going through red lights knowing the driverless vehicle will stay stationary.

I think the interstate trucking industry and aging baby boomers are going to drive this forward over the next two decades (2021 is just Lyft talking their book). Baby boomers have watched their parents struggling with having a car, not having a car etc. in their old age – driverless cars are going to give the elderly unprecedented safe mobility. Millenials (like two of my 25 to 30 yr old kids who don’t own cars) will also play a major role in cities. I think my daughters would far prefer to jump into a driverless car than one with an unknown driver, even if an app is vouching for the driver.

We just bought new cars in 2015. We keep our cars for about a decade. It would not surprise me if our next car purchase is a driverless one as we would be in our mid-60s then. I think it will be a while before many suburban people give up car ownership as they won’t want to wait 20 minutes for a car to drive to their location or not be able to get one during commuting periods.

Internet security of the cars will be a huge issue and will probably be far more important to me than the “trolley problem”. So far, I have had no interest in my home being part of the “Internet of Things” as I don’t want some bored Russian teenager controlling my furnace in the dead of winter. Corporate America is going to have to demonstrate that they give a damn about Internet security. So far they have not.

Not to mention hacking by terrorists or just badies. When this was pointed out in another discussion on self-driving cars, discussion came to a halt.

Maybe this is a more fun-filled continuation:

The Computer Voting Revolution Is Already Crappy, Buggy, and Obsolete

Bloomberg

https://www.bloomberg.com/features/2016-voting-technology/

I’m picturing Manhattan brought to a halt with X number of cars simultaneously bricked. (A brute force attack would halt everything; a more clever attack would brick cars at certain intersections, creating massive gridlock.)

I suppose it would be a simple calculation to figure out how long it would take to get Manhattan traffic moving again by starting from the number of tow trucks available off the island, times tow time times number of bricked cars to tow. My guess would be days.

Until, of course, the tow trucks are automated.

The “human” problem is the biggy. If we program cars to not harm humans and we all know it, what’s to stop us from j-walking whenever we want? If they are as good as they say we can trust that the cars will stop for us. And during the transition cars with drivers will routinely cut-off or otherwise take advantage of driverless cars because they can. The solution is to program cars to run over every thousandth or so human. That way, pedestrians will have an incentive to not wander into the streets. Or we can give out tickets. Perhaps put facial recognition software in the cars that sends the identity to a database to issue a j-walking fine. Either way, it is problematic.

Not sure why (other than practical safety) a human being engaged in a physical activity that is good for him and causes almost no environmental harm shouldn’t be the default. People ideally should be able to walk where they want, and the pilots of 4,000 pound weapons should be constrained.

> If they are as good as they say we can trust that the cars will stop for us.

I’m reminded of the Boston Dynamics robots being knocked over.]

“Kids, go play in the traffic!” “OK, Mom!!!”

I don’t see truly autonomous vehicles operating in uncontrolled environments coming any time soon. So far as I can tell, nobody serious does believes that; it’s a case of strong AI, so far as I can tell, and nobody delivered on that, either.

So what we have are incremental improvements in automotive computing, not necessarily a bad thing. But all the benefits of autonomous vehicles are framed using the strong case (especially saving lives). That’s the PR, and that’s the valuation, a.k.a. the Bezzle.

The trolley example is even too simplistic. Nearly 15 years ago on what was a routine commute for me on the NYS Thruway I was nearly involved in a major accident – I could have at one moment reached my hand out the window of my car and touched another less fortunate person’s car as it spun around on the highway. What had happened…? It was about 8:30 on a bright and sunny mid-March morning. It had snowed some that night and while there was probably 2” of wet snow just beyond the lane lines, the highways lanes were nothing more than damp so traffic was moving along at normal speed (70-75mph). I was traveling in a pocket of traffic that was occupying both lanes and the lead car in the left lane had not noticed that a state trooper was behind her. At some point the trooper gets upset enough that she isn’t allowing him to pass that he turns his lights on and pulls her over. By now they are down the road a tiny bit from the pocket of cars I am traveling in which is still consuming both lanes. The trooper and the lady don’t fully pull off the road and are still basically occupying the part of the right lane. At 75 miles per hour there is no time for people to come to a complete stop and the left lane is also occupied by vehicles. One of the vehicles in the right lane, right ahead of me, with limited options is an 18 wheeler. To this day I feel bad for the driver. He did his best to not run down the trooper, but he was forced to invade the left hand lane. I’m sure he was praying to god that those in the left hand lane would notice and also move left to yield him some room. It seemed to have worked but shortly after passing the stopped trooper and car one of the left hand lane cars – a yellow Dodge Neon – got unlucky and got caught under the trucks trailer. The car was shot back out; hit the guard rail and bounced back into traffic clipping cars and starting a chain reaction of accidents. It was miraculous that I was able to drive through this without being a casualty. Had there been no WET snow on the shoulders I am sure the driver of the Dodge Neon would have yielded further room to the truck and this never would have happened, but what kind of AI is going to be able to make these kinds of “bets” and value judgement.

Agreed. One of the very first conclusions one must draw from exercises such as the Trolley Problem is that there is no right or wrong answer to the problem. This is what I have heard some programmers refer to “fuzzy logic”; where 1 + 1 “might” be 2, or it “might not” be not be 2. It involves the principle of uncertainty as well as chaos.

Thus trying to give an AI hard rules on how to deal with questions like this are bound to lead to contradictions that the AI will not be able to resolve.

Code Name D, there is no uncertainty in fuzzy logic, which was born from fuzzy set theory, whose great revelation was that some object’s degree of membership in a set is not strictly a yes-or-no proposition, and that those degrees can be processed similarly to probabilities with useful results. It’s still deterministic symbol manipulation up to such point as a “crisp” on-off decision must be output, where a designer has some choices in how to reduce those degrees of membership down to some more quantized control effort.

It seems to me that a computer, given the distances, sectors, speeds and headings of some number of people relative to its own, could attempt to evaluate the physics as quickly as it can acquire data, and at all times try to drive the predicted number of life forms in danger, as represented by animal-like objects, probably weighted by mass, and certainly including occupants, as close to zero as possible, which is roughly what most humans would probably attempt.

Better to skip AI entirely and just treat this as a control problem, like the rest of the car. But perhaps self-driving cars and maximizing life safety aren’t the ultimate goals, being born of DARPA and all.

Ya, but it doesn’t have to be perfect, it just has to be better. In this instance with autonomous vehicles the whole issue with the reckless police officer getting annoyed by inconsiderate driving would likely have been averted, and the truck and car would have known what the other was seeing and planning. That self driving vehicles will make difficult decisions wrong sometimes is unavoidable, but if we only let perfect cars drive will we only let perfect people drive?

Why do you assume people should submit to machines or algos? I value autonomy and I’ve never had a accident, even a minor fender denting. Why should I be required to surrender my control over a vehicle?

I don’t buy your underlying premise at all. Go read Cathy O’Neil’s Weapons of Math Destruction. Cathy is a Harvard PhD mathematician (pure math, much more daunting than applied math) and a data scientist. She describes long form how algos are not at all as reliable and beneficial as the hypesters would have you believe.

I have been hit now 5 times in the past 10 years 4 of them rear end collisions while I was stopped at a stop sign or red light. I read last year that auto accidents are the number one killer of Americans under 50 and hospitalize many millions a year. We can’t stop people from texting and driving they are just to stupid. I can’t see any way that automated cars would be more dangerous than what we have now. I like driving but I would accept computer directed vehicles if they would save lives and make our streets safer.

So you are claiming to have never texted/iphone scrolled while driving? Driving remains extremely safe, crashes per trip are very rare and cars today are pretty highly engineered for safety. Bicycles, on the other hand…and what do you do at stop signs that makes people hit you so often. Hospitalize many millions a year? Citation please.

I have never texted or even attempted to read a text while driving. So Yes that is what I am saying. Even when I was a very busy contractor I did not answer my phone while driving. Just like everyone did before smartphones.

The phone/car manufacturers can easily disable a phone’s texting capabilities once entering a vehicle. But, they don’t.

No, guns are now a bigger source of fatalities than cars. Cutting the data to make cars look worse than other causes of death is spurious.

Go look at this table, which is way more granular.

http://www.worldlifeexpectancy.com/usa-cause-of-death-by-age-and-gender

However, its defect is that it does not seem to include gun deaths (they are presumably included in suicide, homicide, and “other injuries”.

According to the NSC 38000 people died in traffic accidents in 2015. The number I keep finding for gun related deaths is 33,000 from numerous sites. I did look at your link.

It is not that I have any trust in Silicon Valley or the Tech gods but after 50 plus years I have have no faith in people.

The lives saved argument applies to fully autonomous self-driving vehicles, not incremental improvements in automotive computing, or infrastructural improvements that could be justified on their own (like better lane markings and signage). But fully autonomous self-driving vehicles aren’t coming any time soon.

So I don’t buy the “lives saved” argument, and would also like very much to know how the calculations are done.

Not just “fully autonomous self driving cars” but “fully autonomous self driving cars” that function at six sigma reliability. Pray tell where do we have that in IT? Maybe the Unix kernel, but tell me where else.

It is intriguing how the proponents have gotten the media to accept that “fully autonomous self driving cars” means the perfect fantasy version, not the real life version.

“Meet George Jetson…”

NASA

Airplane guidance system

Public transport guidance systems

Ship guidance systems

Stock exchanges

Banking

…basically anywhere where it is important enough. 95% of programmers like people are buffoons. It doesn’t mean that we as a race can’t do great things.

Most and maybe all of the things on your list run on mainframes. We’ve written at length at how creaky that code is and how it is in fact starting to break all over the place in banking. Stock exchanges and banks also have procedures to capture outtrades and errors, so the results aren’t necessarily the results of the functioning of the code, but of the code + the systems around the core programs to flag probable errors. There are large back office departments at every major capital markets firm that handle trade processing errors, for instance. As in real humans doing the work.

There will also be problems with hardware defects. I have spent time chasing unusual defects that only manifest in a particular lot code of a microcontroller, under rare circumstances.

Then there are manufacturing defects, as the complexity of circuit assemblies can be large: high layer count PCBs (frequently more than 10 layer boards, very fine PCB geometries (0.004″ wide traces/spaces or smaller) and ball grid arrays with hundreds of solder connection points.

With thermal cycling in the environment, soldering and assembly defects can manifest that even perfect software cannot compensate for.

Here is a link to a problem from FPGA manufacturer Xilinx about a lot problem with an old FPGA family.

http://www.xilinx.com/support/documentation/customer_notices/xcn06018.pdf

Of course, even NASA has occasional problems:

From: http://mars.jpl.nasa.gov/msl/news/whatsnew/index.cfm?FuseAction=ShowNews&NewsID=1432

03.04.2013

Curiosity Rover’s Recovery on Track

PASADENA, Calif. – NASA’s Mars rover Curiosity has transitioned from precautionary “safe mode” to active status on the path of recovery from a memory glitch last week. Resumption of full operations is anticipated by next week.

Controllers switched the rover to a redundant onboard computer, the rover’s “B-side” computer, on Feb. 28 when the “A-side” computer that the rover had been using demonstrated symptoms of a corrupted memory location. The intentional side swap put the rover, as anticipated, into minimal-activity safe mode.

Curiosity exited safe mode on Saturday and resumed using its high-gain antenna on Sunday.

“We are making good progress in the recovery,” said Mars Science Laboratory Project Manager Richard Cook, of NASA’s Jet Propulsion Laboratory. “One path of progress is evaluating the A-side with intent to recover it as a backup. Also, we need to go through a series of steps with the B-side, such as informing the computer about the state of the rover — the position of the arm, the position of the mast, that kind of information.”

I believe the insurance industry will decide when/if self-driving cars happen.

But software is only part of the picture.

An argument could be made that more lives would be saved by better pay at work and policies that promote a less stressful life. Most accidents occur when people are rushing thru heavy traffic for various reasons or distracted.

Better public transportation is the answer, along with more rational city layouts.

Over time, I think this will be inevitable.

It’s the seeking of the ultimate binary decision. If it is wrong from a faulty input, the error compounds infinitely. The chances are high that we are all on a negative feedback loop from full on faith in the power of machine computing. Every decision can be reduced and further reduced to yes or no again and again – further into sub atomic somethings and nothings. There is no end, yet humans for some reason need to believe there is, therein lies the fault.

Reason cannot exist without the desire to call it such.

Adam1 you are using exactly the right sort of example. We are so incredibly far from software capable of making decisions like this correctly that there is no point in implementing any approximation now.

So where does that leave self driving cars? I think they’re still likely to be a good idea. Cases like the one you describe are, thankfully, not common. Far more common are driver errors that lead to accidents which self driving cars should be able to improve on (we shall see).

The trolley problem remains as an excellent case to discuss in an ethics class.

It’s all a moot point if the dogs won’t eat the dog food. And you can’t market a self-driving car without taking a position on the Trolley Problem, explicitly or implicitly.

Maintaining safe following distance precludes wrecks like these. Clearly, AI is more able and willing to do that than you and your fellow drivers are.

Snow? I don’t think they got to snow yet. Aren’t the cars going to kick to manual if they can’t see the lines on the road? Big wide white lines maybe? Herd mentality too, that will be a hard one if the AI car is supposed to learn from the drivers in some cities I’ve been in. Perhaps you live in one of those cities where a light turning to red means just a few more cars though the intersection?

Back in the late 1980’s I remember sitting through a lecture given by a transport engineer about a major EU project he’d been involved in for self driving cars the Prometheus project. It was for a slightly more limited type – essentially cars and trucks that would essentially go into auto mode on major highways in order to increase road capacity. We were assured this would be the norm in 10 years or so. I’ve no idea what happened to the project, but its not a new idea – the technology for limited (i.e. sticking to simple lane manoeuvres on major highways) has been around for a long time. But then again, so has the technology for flying cars.

So I’m really interested in what Lamberts conclusions on this will be. I’m naturally a sceptic, although what I’m most interested in about the current proposals is whether its all a con of some sort, or whether the promoters are well aware of the massive issues, but are trying to create a sort of juggernaut where it becomes ‘inevitable’ and so all the practical, legal and ethical issues can be steamrolled in their favour. I suspect the latter.

As for the trolley problem, I don’t actually think this is an unsurmountable problem. One existing model would be (to bring up a favourite NC topic) in single payer health care, and the so-called ‘death panel’ . Although rarely brought out in public, public bodies in Europe regularly set up ‘expert’ panels which generally adopt a mixture of utilitarianism and some subjective ethical judgements to the question of whether a particular drug or treatment should be recommended or favoured over another. This issue is raised all the time if, to take one example, a liver becomes available for transplant. Three patients are suitable – who gets it? The brilliant scientist who’s also an alcoholic? The mother of three children? The 12 year old with autism? A decision has to be made, but the reasoning behind it is often fudged.

I could well envisage a quasi public panel involving ‘experts’ or some type of citizen jury being given the job of assessing each algorithm to decide if its acceptable, and in the name of public interest keeping the precise decision secret. The public justification for secrecy could be that if, for example, the algorithm prioritised saving the life of a child who steps in front of a car, then it would result in lots of kids jumping in front of driverless cars just for the fun of it (anyone who thinks this won’t happen has never looked after a certain type of 10 year old boy).

I’m not suggesting, btw, that this is the right or appropriate approach – just to argue that the trolley problem is not, in itself, an insurmountable obstacle.

Self-driving cars: 0bamacare on wheels.

Let’s pass it to find out what’s in it. /sarc

Even the engineers don’t know what’s in it. Neural networks can be rather inscrutable in figuring out how they decide to do what they do.

This morning a train crashed into a station in Hoboken. There was much speculation about automated systems on the train. One person I saw interviewed talked about the fact that it took 10 to 15 seconds for the systems to kick in and the train was very heavy. I realize that the system will have already been clicked in, but this was an already tested already approved automated system that failed to stop something it was intended to stop.

Cars with drivers are often dangerous. But somehow I don’t think cars without them is going to solve this..

It appears that the train did NOT have positive train control and there wasn’t an automated system at all.

Libertarian Silicon Valley is naturally attracted to automation. But libertarians watch out when the first one hits a schoolbus.

The deployment of artificial “intelligence” is vastly premature.

…would like others to buy them; but they would themselves prefer to ride in AVs that protect their passengers at all costs.

To me, this gets to the root of the question. I don’t think I would buy a self driving car. I don’t need to, you see, because I am an above average driver. Right, just like you and everyone else. A real life bit of A Prairie Home Companion is happening on our streets. But all those idiots around me, oh, they need some schooling in how to drive in traffic. It’s maddening.

Maybe the policy should be that one does not buy a self driving car for oneself but for some one else.

On the topic of selling these cars… Car ads are all sizzle and no steak. They are all about how the car makes you feel when you drive it — specially luxury cars. And it’s been like that since at least the 1950s. I am curious what Madison Avenue will come up with. See the USA and your Facebook page?

A ‘reboot’ of Bullitt would be a rather dismal affair, as would one for the french connection. The fast and furious will be forgotten and furious!

Carnage in motoring journalism, trade shows etc

So many downsides, and I can’t even drive.

“We found that participants in six Amazon Mechanical Turk studies approved of utilitarian AVs (that is, AVs that sacrifice their passengers for the greater good) and would like others to buy them, but they would themselves prefer to ride in AVs that protect their passengers at all costs.”

This is a thing? Using Amazon Mechanical Turk to conduct a survey study? The full article is paywalled. Does anyone have access to its Methods section?

There is a link to a PDF of the full article in the post.

I get around by bicycle. And, let me tell you, driverless cars scare the hell out of me.

Like the ones where you glance over and see a person vacantly staring down into their smartphone?

Thank You!!!!!!

Can’t imagine a robot chucking a full can of beer at me. Or “rolling coal”

Wouldn’t it be cheaper and simpler to develop software that disabled smartphones in cars? It’s surely possible to sense when one is in a vehicle, after all.

I actually think the one area where driverless cars would help is with cyclists. A ‘never go within X feet of a bike’ algorithm is quite simple (assuming of course that the sensors can reliably identify a cyclist). I’ve had far, far to many close calls with cars on my bike to fear an autonomous system more than regular drivers. Just this week a woman in a very large Merc practically flattened me at a junction while staring intently at her mobile. In London once a driver (another Merc, what is it about German cars and jerks?) once quite deliberately tried to push me against some pedestrian railings. My early morning rides to the gym are particularly torrid this time of year with dark mornings and drivers racing to get to work before the traffic builds up. All things which presumably would not be an issue with driverless cars.

In driver-less cars who is liable in an accident?

Historically the driver, then the owner, and finally the manufacturer have liability, roughly in that order.

Drivers and owners can, and mostly do, buy insurance.

Will the AI providers for cars buy insurance for all their so-equipped cars? Or is the owner required to insure the driver (the software), which would be a unprecedented transfer of liability from the software producers to its licensed users, the car owners

In an accident, who is the neutral 3rd party who can examine the logs from the cars? I cannot see the software writers as impartial, the owners do not have the skills, and others on the roads, pedestrians, cyclists, and non automated cars have rights.

Litigation futures appear to abound. Law is not good law until tested in court.

The CEO of the AV company needs to be accountable (sent to prison or get the death penalty). Monetary penalties are not enough.

CEOs are never responsible. The Law is for the Takers, not the Galts.

I think that one key task for the Democrats now migrating to Silicon Valley to feed from this particular trough will be to make sure that the ethical algorithm designers and developers can’t be held liable. (Under neoliberalism, the rules never apply to the people who write the rules.)

The investment to make driverless cars a reality is well beyond the vehicles as it will require a massive infrastructure investment.

Don’t see that happening without a huge reallocation of resources seamlessly redirected to the usual suspect Pentagon contractors as a “homeland security” meme. Even then, I don’t see how the maintenance of such infrastructure would be sustainable.

Since we do like the concept of The Overton Window here, I do see the long play being the desensitization of ppl to the concept of “autonomous vehicles” applied to over the road trucks in dedicated highway lanes. I see that as a manageable engineering challenge.

Autonomous vehicles on arterial streets? not so much.. Think of NYC, Boston or Chicago, now add weather. It’s a laughable notion akin to Elon Musk’s habitation of Mars voodoo. Some serious physics problem at the conceptual starting gate.

Isn’t that what we have been pushing for – for years? Massive infrastructure spending? I guess to get it done simply means waiting for the neo-liberal stars to alien just right.

This is one of the things that drives me absolutely crazy..

In Chicago there WERE electric trolley cars and buses.. all that infrastructure was ripped out

Presently we have a 4 diesel electric commuter lines that all fan out into suburbia, parts west southwest and north west. That could all be electrified and connected with circulators at the ends.

> Isn’t that what we have been pushing for – for years? Massive infrastructure spending

And it would be nice if it were done right… And I certainly don’t trust a pack of Silicon Valley glibertarians to do that.

For example, drunk driving disproportionally occurs in rural states, which are also disprortionately poor. So, the “lives saved” argument very probably depends on infrastructural improvements in those states: Lane markers, resurfacing, signage, yadda yadda yadda, and not just on the freeways, but everywhere. How likely is that to happen, given that it’s Silicon Valley and the Acela corridor that’s driving this? Like Uber, and like not investing in public transportation, a lot the motivation is making sure that 10%-ers remain segregated from smelly proles.

Autonomous cars are like Ronald Regan’s Star Wars proposal, mostly dreams, which suggests to me that there must be lots of government grant money planned to be handed out for academic research and product development.

On the other hand all the business/investor hype is much like the push for 3-D printing, trying to incite spending and investment just for the sake of GDP.

All I can see in the far future are a bunch of road-based F-35s. Ever more complicated and expensive technology in order to solve small problems caused by previous iterations of the same technology.

Silicon Vally Heaven.

Should the observer throw the switch — cutting the death toll from five to one? That is the “utilitarian” argument, which many people find persuasive. The obvious problem is, it puts the observer in the position of playing God — deciding who lives and who dies.

===========================================

I consider myself super rational – yet I almost when careening off a cliff with my mother in the car to avoid hitting a squirrel. And of course after wards I thought that squirrel certainly wasn’t worth risking my life for (of course, I don’t know if was the squirrel destined to save the earth) but my autonomic response was don’t hit that squirrel!!!

The question isn’t about how many people must die to save some other people – the question is how many people will die to save dogs, cats, and any other critter that wanders upon the road ways…

Well the squirrel certainly agreed with your decision. And what god made humans more important than squirrels? Ethics are indeed relative, the closer the relationship, the more important. Personally I would take many dogs etc over certain people.

This, “Perhaps the real ethical problem was removing the driver’s autonomy in the first place…”

Ironically, “trolley cars” ( light rail ) are a real and technically mature solution.

On the “trolley car problem” In lieu of primum nil nocere.(“Do no harm”), instead “do the least harm”.

So Lambert, let me raise the game.

So what if at the last second, it is revealed one of the five was Hitler? Ah these nettlesome theoretical posits….

Baby Hitler is in there.

I don’t think it’s a soluble problem. Those are the kinds of problems that should be left to humans, in all their messy (but adaptive) imperfection.

There is plenty of evidence that removing road signage and markings (as is common in the Netherlands and Belgium) actually increases traffic safety as it forces drivers to be more aware of their driving. In this sense, increased autonomy for drivers is a good thing.

Yet somehow people have no trouble getting on “planes that practically fly themselves”… The pilot just pushes a button and it does the rest!

Yes, the scales of time and space are somewhat different, but the ethics regarding the decision making algorithms are very similar, and one sees very little protest against the concept of an autopilot.

The well-known takeoffs and landings are when pilots earn their bread.

Even so lest we forget—a certain Air France flight out of Brazil years ago had the misfortune of an emergency at cruising altitude. Alas, the captain made the wrong choice of two conflicting instruments to trust.

It was the Co-pilot, the Captain was napping. The inquiry concluded that lack of actual flying experience whilst cruising, due to the high level of automation, was a major contributor to his failure to distinguish between the conflicting instrument read outs he was given, causing him to fly into the sea.

The Captain turn up in the cockpit too late.

Now imagine the carnage when people ,unused to driving, are suddenly confronted with a machine that stops functioning at speeds where the stopping distance requires reflexes faster than biologically possible.

A colleague of mine died on that flight, tragically he changed from his scheduled flight to get home sooner.

I stand corrected. Thank you.

There were in fact two co-pilots and captain on board.

from wiki:

https://en.wikipedia.org/wiki/Air_France_Flight_447

25 Years ago there were 2 or 3 airline crashes per year. Today there are 2 or 3 crashes per decade. The main changes have been in cockpit automation, ground radar, improved weather avoidance, mechanical reliability and crew training techniques.

The attitude I hear expressed in this forum is that machines can fail but people in control do better. That is just wrong where it is feasible to let a computer take over. People are the most fallible part of most things that go.

1) I don’t think the complexity of air transport is anywhere near the complexity of automotive transport.

2) As you point out, air transport has been incrementally improved, as what I’ve been calling automotive computing. That’s not at all the same as fully autonomous transport, whether by air or land.

3) If there were fully autonomous air transport, you can bet that the extremely wealthy would avoid it, in favor of private jets piloted by humans.

The quality of the pilots is also way better in air transportation than ground transportation.

The extremely wealthy are frequently an eccentric bunch. I don’t see the point of looking to them for much except a charitable donation.

I love this blog, but Lambert you are really not qualified to assess computers/software/electronics at anything approaching the level of expertise you bring to questions about economics. Here, the comment “I don’t think the complexity of air transport is anywhere near the complexity of automotive transport.” would be laughable if it didn’t evince such a profound lack of understanding.

Sorry, Lambert is right on this one. He’s discussing the complexity of inputs and the environment. This has nothing to do with “computers/software/electronics” but the design conditions.

Planes, which take off and fly routes that have to be pre-approved by the FAA (Norad will scramble a jet if even a private plane goes off route) has vastly fewer degrees of freedom and unexpected events than driving a car (airplanes can be downed by birds on takeoff or landing, but how active a risk is that versus having children, animals, or pedestrians wander into your path, or being hit by another car?). That’s one of the big reasons travel by car the most dangerous thing people do on a regular basis, by far.

Its worth pointing out that while the planes themselves are largely automated, air traffic control is still very much a hands on human activity. I believe there is very little automation involved in it.

In commercial aviation, the ‘big decisions’ are largely made by air traffic control, and in terms of emergency manoeuvres, there are mostly set rules all pilots are trained to follow.

Far fewer things to hit 30,000 feet in the air. If something does go wrong there is also more time to correct it.

Which is why I clearly and explicitly stated that the scales of time and space are different. The ethical questions and how to resolve them are however far more similar. A good example of this is the logic in aircraft anti collision systems, which have never failed due to technical flaws in the implementation, only due to operator error.

This whole discussion is misguided. Why are we assuming vehicles are going too fast to respond safely to hazards, and why are we OK with vehicles moving too fast through their environment to safely respond to hazards? These things have more advanced sensory input than humans, are more capable of processing all that input and making decisions based on that input, and can more quickly and with more surgical precision manipulate the systems of the vehicle than a human. AV’s should complement Vision Zero, not shrug it off.

I suggest you read up on where the technology is. I’ve seen this again and again how the hype regularly exceeds reality with NewTech, not just reality in the field, but what is actually possible to be achieved. And whiz bang action movies with lots of tech razzle dazzle add to the exaggerated faith in what is viable.

These systems can’t even handle basic stuff like rain and snow. And how do you want it to respond to small animals running in front of it? I guarantee these cars will be programmed to run over anything smaller than a small dog.

It is inarguable that a computer that is literally integrated with the systems of a car will be able to manipulate these systems with greater precision and in a far shorter time than a human manipulating steering wheels and pedals with their feet and hands. These types of systems already exist in mainstream consumer vehicles (traction control, ABS, etc.). Having better than two cameras positioned within a few inches of each other high up inside the cabin of the vehicle will provide better visual input than a human is equipped with.

Other than that, slow down until you can safely respond to any hazard you can detect. That part, although often disregarded by human drivers, is the same for AVs or humans.

Having better than two cameras positioned within a few inches of each other high up inside the cabin of the vehicle will provide better visual input than a human is equipped with.

You are assuming that the image data can be accurately and rapidly processed to a greater degree of accuracy than the human visual cortex achieves. Good luck with that.

Only a free association—it was John v. Neumann who wondered if machine visual hardware complexity might not be any simpler than the human visual cortex itself to perform as well. (or words to that effect).

What you are missing is that it isn’t the computer that is reacting to a hazard, it is the software running on that computer. And that software is written by humans that are just as fallible as the ones that are supposedly to be replaced. But they are actually less fallible, because the reactions have to be thought about and programmed before the actual hazard is encountered.

Cars aren’t conscious, the most cutting edge AI isn’t anywhere near it. Computers don’t know how to automatically react to a given situation, they still have to rely on a human to program them, and this will be true for the foreseeable future. And if you think any programmer out there can think up foolproof ways to handle any situation a car might encounter then you haven’t thought this situation through enough.

No, that’s nonsense. Computers can react to only inputs and scenarios they have been programmed to handle. The complexity of what can happen in a car in a dynamic situation is vastly too complex. The scenarios become insanely bushy and the decision rules start contradicting each other. Computers are faster ONLY in identified, known scenarios. Humans can handle novel scenarios.

Separately, i’s astonishing that people who use computers and see how unreliable and buggy they are are willing to put their lives at risk (or worse, advocate that others do the same) out of blind faith in the Technology Gods.

You’re confusing improved automotive computing with self-driving cars.

I’ve wondered what the near-term effects of “autonomous-lite” vehicles are going to be of rapidly deploying tech like “self-parking” vehicles and “lane detecting” cars in terms of liability and legal actions. It seems to me there have already been situations where a human driver thinks they were in control and the vehicle contradicted or alarmed, and which the driver overreacted or overcompensated, or else simply assumed that the vehicle would take care of it by itself. This goes way beyond the recent Tesla tragedies, I’m sure.

How do you respond to small animals running out? What if we add an oncoming truck in the other lane? At that point are you making instinctual decisions, or ethical ones?

Insurance costs are based on actuarial experience. As more and more driverless cars hit the road, there will be more experience on which to base insurance costs. If the driverless cars are better than cars with drivers, then eventually drivers won’t be able to afford the insurance necessary to operate a car. Google and Apple are rich enough to self-insure. You are not.

The problem with the trolley example lies in the word “hurtling”. What are you really supposed to do when the driving situation gets dicy? Slow down, goddammit. Stop if necessary. Driverless cares will not be driving at 75 mph unless they’re quite certain it’s okay … as in all the cars talking to each other and to the cars that are half a mile ahead so have already scanned the road and can report back to the cars behind them that the road is clear. That trooper? He won’t even be on the road pulling people over for being dick-head drivers because there won’t be any dick-head drivers. And if he is on the road, he will be able to STOP ALL THE CARS – safely – with the flick of a switch. Driverless cars will be slower when the situation calls for it and will stop when the situation calls for it. No human decision required.

Motorcycle riders and bicycle riders (like me) can barely wait until driverless cars are the norm. I can barely wait until I no longer have to wonder if some car is actually going to stop at a stop sign because the pseudo-AI driving the car will unquestionably stop, every time.

> he will be able to STOP ALL THE CARS

He, or any hacker….

I get a kick out of you defending self driving cars then claiming you won’t use one, and also that tech that has yet to be fully created will save your life. Also, I get a kick out of how you figure we will be forced into self driving cars because apple and google with all their “offshore” cash hordes will make it so we have no choice but to use their product because we’re not rich enough to compete. I wonder how many people you drive with because quite a few think they need to be in front of everyone else in spite of that being impossible. The egalitarian future you envision is being born out of a non egalitarian society, how do you think that imposition is going to go over on the type A crowd? I get a kick out of how all the self driving car advocates claim it’s inevitable and I need to get in line.

oops, reply to MRLost

Saw the aftermath of a pedestrian hit by a car last night. He was ambulatory but roughed up bleeding and limping. I didn’t see the accident but I was there just after he was hit. I see these guys all the time dart into traffic, crossing against the light, wearing dark clothes at night. It was a women who hit him, I am sure she did not see him. A driverless car in the same scenario won’t have stopped in time either.

With lidar it might have. There is no reason to assume AVs need illumination from the sun to see.

Two things. I am cynical enough to figure AV’s are about saving the owners a few bucks in shipping costs, kicking labor in the scrotes and if a few proles die along the way, bonus points.

WRT the Trolley Problem, are we not already doing a variation of this with vaccines? A relatively few people will die from an allergic reaction, but the benefit to the herd is so substantial, we absorb that cost, noted ethicist Jenny McCarthy to the contrary.

The entire discussion is off-base.

Cars are (in all ways) unsustainable as a mode of transport. What cannot continue, will not continue – and the timeframe to get this to a v1.0 release is longer than the timeline at which the car fails (even given the demands to have cake and eat it that continually drown out rational, informed voices..)

This is a topic for a separate post. It seems to me that Silicon Valley, generally, assumes infinitely increasing computer capacity. That’s surely not a good assumption to make. Facebook’s data center in the arctic, for example…

Self driving cars either by GAS or electricity – will be a blessing for the very elderly whose driv lics taken away, for poor vision or health reasons, those who lose Driv Lics for varied reasons – temp or permanently.

I for one, look for it, when I will be unable to drive for poor vision etc! I welcome any viable and significant technology which will preserve my independence!

You might consider public transportation or a taxi.

The whole argument is mostly nonsensensical! Autonomous cars are just a Fantasy

for ignorant yuppie-nerds and their rich patrons looking for the next big thing- coming right up – oh yeah! Fantasy!

Good public transit is what we need not self driving gas buggies!

What about self-driving chairs? If they can go to a restaurant dining room, why not down the street to the grocery store? Or even the liquor store?

You can get around without any effort at all and if you crash, it’s your bacon in the pan.

Is everybody OK with self-driving chairs? Let Google put wheels and a motor on an easy chair and use a remote to steer and roll around. Or maybe the Apple iChair. That would be pretty funny. The iChair. They need some creativity at Apple. There you go!

From high chair to iChair in one lifetime.

*bangs spoon on iChair tray*

LOL!

American anti-gravity. Is this a thing yet?http://www.americanantigravity.com/

Self driving chars! Time to break out WALL-E again!

I guess on the plus side, a self-driving chair likely won’t weigh more than a ton, or travel at 70 mph. Let’s get to work on road infra around that!

For “more than a ton” we can design self-driving beds and couches and so forth.

Can’t intimidate other people with the iChair. Maybe a mod to (using the same comment twice in the same thread) blow coal as you roll down the street. Or maybe this?

https://www.youtube.com/watch?v=FTMhdL_dCEk

Hate standing in line? Japan now has self-driving chairs Phys.org. I believe I linked to a version of this story recently.

However, I doubt very much you can take your chair out for a spin on the open road….

“AV will have fewer accidents than human drivers.”

This assumption needs to be challenged because not all human drivers are equal. If you are comparing against your average Cletus and Claudia, this is a low bar indeed.

Part of the problem is that so little energy is focused on properly training drivers. In most states, obtaining a driver’s license is a mere formality. Being more accretive with driver’s education, higher standers on driving tests and renewals, and strict enforcement of the rules of the road, basically washing out those who have no business being behind the wheel, would do a great deal to improve driver safety.

Yeah, but that would mean more government regulation which is bad.

* * *

However, I should dig out a study on licensing policy and accidents. I wonder if the numbers vary, globally, as wildly as health care costs, or prisoner populations, do, two cases where America is truly exceptional.

It is interesting that a standard is being set for automation that we don’t apply to people. The tone of the discussion suggests that many think the solution to the trolley problem is obvious, but in real time a significant number of people will simply panic and either fail to make a decision or a bad one. And, as the philosophers show, the rational conclusions that people reach can naturally vary in even slightly complex situations.

We’re not there yet, and may not be soon, but at some point automated driving systems will reach the point where they save more lives through prevention of simple accidents than are at risk from (rarer) complex situations such as the trolley problem. At that point it makes sense (from a utilitarian perspective) to implement them widely as they will save lives. Maybe marketing will really become the issue then, or perhaps this is a more sophisticated version of the trolley problem. Either way it isn’t clear to me that we need to solve the trolley problem, though many clearly want to.

Tangent: wikipedia has an article on “Eating your own dog food” which discusses (speculates on) the origins of the term. The first place I remember seeing it in print was in Showstopper! by G. Pascal Zachary from 1994 referring to the event at Microsoft in February 1991 referenced in the wikipedia article.

Different cliche entirely!

Speaking as someone with a background in AI, I see two misconceptions.

The first is that good AI always takes longer than everyone thinks. By this I mean the first 80% of the solution comes much faster than everyone predicts and the last 20% of the solution takes MUCH longer.

Second, people already delegate “trolley” type decisions to third parties when riding in a taxi or a bus. I expect a user’s profile will allow for some level of customization. But just like everything in civil life, as the flaws in various decision making schemes are discovered and weighed by the populance, they will evolve or people wont use them and insurers wont insure them.

I have no doubt that they will slowly become an everyday occurrence in our society. A bigger challenge in the long run will be for those who want to continue driving themselves. Driving yourself in the future will be like using cash today. All kinds of extra scrutiny will fall on you if you drive long distances without assistance.

I guess we’ll be ditching the old “land of the free and the home of the brave” slogan. The lure of the open road is also apparently headed for the dustbin. Of course your own having or not of doubts doesn’t mean much regarding the boundless future. Your taxi /bus analogy fails as the driver of the bus and taxi are humans who are currently in the present day making choices that your AI can’t, and as you astutely point out the last leg will be hard if not impossible, we don’t know yet. What about motorcycles? Dustbin? You’re living in a fantasy world. You assume that everyone wants the tech centric world in which you get your paycheck. The point of the post is that sure, technoids can make a self driving car, will anyone other than those who plan to make a lot of money from them drive them? Do you plan to have the gov’t force people into self driving cars ala obamacare? For gov to build the infrastructure for your own little toy? What about when you get on the driverless bus and a crazy is on there and there’s no driver to intervene? It sounds like a truly horrible world to me.Personally I have no doubt that robots and self driving cars will hit the wall due to a lack of resources to make and power them, but also because people like to do things.. Also mentioned in the post is the crass assumption that richer people can buy more safety and the cars will be programmed to recognize the value of the various beings and kill the poorest one. Do you have thoughts on this?

Concepts like “the open road” are advertising fantasies for most metropolitan people most of the time. “Ah the joy of the open road as I crawl along the monster sign-laden post industrial hellscape of the Nimitiz Freeway in Scenic San Leandro”

No way will the user profile allow for customization. That would be smoking gun for liability purposes – “ladies and gentlemen of the jury, he had his driver ‘bot set for ‘kill everybody but save me at all costs!'”

All moral choices will be obfuscated by the software so nobody knows what is actually happening, just like all legal choices are obfuscated by software (or hidden in the unread user agreement).

> like everything in civil life

I think it’s the integration of the algorithm into civil life that’s the issue. If I don’t know what the algorithm is, and I can’t affect it in any way, the algorithm* isn’t really part of civil life at all.

Is that really how we want important ethical questions settled?

* Which you can bet will be corporate IP and a trade secret, right?

But those hidden algorithms are already all around us. Self driving cars are not the cross-over product. It is just one more in the long line of many. NC posts demonstrate this problem in all its glory from Wells Fargo, to mortgage processing, to flash crashes, to the issues surrounding black lives matter and the Sanders campaign, and the list goes on.

Self driving cars will happen (no idea on timing), but how much control the general populace has over the evolving morals embedded into the algorithm will depend on whether we get control over these bigger issues. Otherwise it will be just one more nail in the coffin.

I agree that the will happen, my misgivings are based on the issues you raised re control issues (“Wells Fargo, to mortgage processing, to flash crashes, to the issues surrounding black lives matter and the Sanders campaign, and the list goes on.”) where as a society most choices are made for us behind the scenes to benefit the few, and I don’t see that changing but we can always hope? Embedding morals in an algorithm seems like a bridge too far and in that respect I think it’s too complicated but I’m pretty sure that we’re going to find out whether we like it or not. Needless to say I’m not optimistic.

And coincidentally….

http://m.heise.de/newsticker/meldung/Unfall-mit-Autopilot-in-Deutschland-Tesla-faehrt-auf-der-Autobahn-auf-Bus-auf-3337662.html

Don’t forget one of the last centuries biggest lies; can anyone remember, back in the 1950’s, “Nuclear Power Will Be to Cheap to Meter” ? How did that one work out? With all of the legacy environmental waste sites still with no clean-up solutions and costs rising exponentially. Those areas such as Rocky Flats and Hanford will be uninhabitable for the next 100,000 yearsI Nice job, Atoms for Peace!

Mere teething troubles, Phil.

Silicon Valley can fix this in a couple of years with disruptive technology, once venture capitalists are mobilized. /sarc

Lovely cliché medley, Jim.

To be fair, the waste problem was human-created and actually avoidable. Reactors can be built that are:

1) inherently safe: even if they lost ALL cooling water (or sodium or whatever) they cannot melt down because their core is kept below the critical threshold for a true melt-down; and 2) reactors can be made to consume/use their own waste as fuel, such that the end product/waste has a half-life measured in a few 100 years vs thousands…and the radioactivity is much less damaging than current standard.

There’s no real reason why number 1 hasn’t already become standard except inertia. There is a purely political reason number 2 hasn’t come forward: fear of nuclear weapon proliferation. A reactor that creates and burns its own nuclear waste as fuel is also a reactor that can be used to generate weapons-grade nuclear material. A political decision was made (by Carter in the USA and then by the apparatchiks in government dealing with the Soviets) that we would forego safer/superior technology and instead stick with ancient, WWII era nuclear reactor design with all the nasty, LONG-lived nuclear waste.

This can be undone. ALL the nuclear waste in storage CAN be used as fuel in a truly modern nuclear reactor. The benefit would be elimination of the very long-half-life nuclear waste as it gets converted into short-lived waste that is SO much easier to deal with.

Alternatively, we also decided to blow off thorium reactors that are orders of magnitude safer and do not produce weapons-grade waste at all. Thorium is also much more abundant than usable uranium, and thus cheaper. Know why we blew off thorium? BECAUSE WE COULDN’T MAKE NUKES IF WE WENT THAT ROUTE. So, to avoid nuke proliferation, we decided to go with filthy nuclear reactor designs that produce unmanageable waste (but also do produce waste that can be used to make weapons)??!!! All while NOT going with the designs that use up their own waste products as fuel (because it would be hard to track whether the loss of plutonium, etc, in waste actually went into fuel or went into warheads). Yet we went with dirty reactors for the express purpose of producing weapons materials!!!! WTF?

Today isn’t the Cold War. We should build a few new reactors, open to international inspection (and encourage the Russians and French to do the same) to specifically burn up the long-lived nasty waste to get rid of it. We should also encourage thorium reactor development and building to give us green energy without fears of nasty nuclear waste and weapons coming out of it. When all the nasty waste is used up by the burner reactors, they get shut down and mothballed. Then we stick with thorium only. Fixed.

The irony of all this is how the car for “Americans” was (is?) a symbol of freedom. Driving the vehicle a measure of the individual’s control over their lives and where they want to be in it. Now that seems to have died for the sake of convenience (laziness). More time to stare at mobile devices. Hence why Google is so enthusiastic about pushing the technology. WRT the safety angle, I have a suspicion most people won’t be lining up for self driving cars because they, can be/might be, safer. We should all remember that engineers program the algorithms, and last I checked rockets still explode on the launch pad.

The tragedy of all this is that it is driving innovation in the complete wrong direction to deal with resource and ecological problems. It all perpetuates a model of city design, commerce (particularly the auto industry), and transit that is sprawling and inefficient. All for the sake of maintaining first world delusions.

Maybe depending on the ethical model you choose you can pay extra…like an option on a car today.

Self driving cars are already among us being tested successfully. However, I can give two instances that reduce their value in a total automated mode (ie where a human cannot intervene).

First: Assume a commercial driver less truck traveling the byways carrying precious cargo. What is the easiest way to hijack this truck? Park an obstacle in front of it. Take the cargo. Even if you have a totally driver less truck you will need a security guard and someone to figure out what to do in interesting situations.

Second: The above case also applies to driver less cars which may operate in more hazardous parts of metropolitan areas. Again, how do you hold up a driver less car? Park in front of it.

I’m going to keep this short since I don’t want to invest a lot of time on a post that will just get either dogpiled or ignored but two quick points: companies are investing enormous resources into autonomous cars, and they aren’t generally naive about such investments; they wouldn’t be spending the billions unless they had good confidence in likely outcomes. Secondly, we are all top ten percent drivers or better, that’s a given and we’ve all either never had accidents or if we were involved it was definitely someone else’s fault. But most people aren’t so expert and if autonomous cars are significantly safer than human drivers (and they will quickly fail if they aren’t) all the cute and clever hypotheticals with school buses and cliffs and children chasing balls in the snow and personal anecdotes won’t mean much.

> companies are investing enormous resources

I’d argue that the investments that will pay off are going into automotive computering, and not autonomous vehicles. That leads directly to the question of valuation (i.e., the bezzle). These companies should be valued like GM or Delco, and not like, oh, Uber.