This is Naked Capitalism fundraising week. 690 donors have already invested in our efforts to combat corruption and predatory conduct, particularly in the financial realm. Please join us and participate via our donation page, which shows how to give via check, credit card, debit card, or PayPal. Read about why we’re doing this fundraiser and what we’ve accomplished in the last year, and our current goal, funding comments section support.

By Dan McQuillan, a Lecturer in Creative & Social Computing, who has a PhD in Experimental Particle Physics. Prior to academia he worked as Amnesty International’s Director of E-communications. Recent publications include ‘Algorithmic States of Exception’, ‘Data Science as Machinic Neoplatonism’ and ‘Algorithmic Paranoia and the Convivial Alternative’. You can reach him at d.mcquillan@gold.ac.uk or follow him on Twitter at @danmcquillan. Originally published at openDemocracy

There’s a definite resonance between the agitprop of ’68 and social media. Participants in the UCU strike earlier this year, for example, experienced Twitter as a platform for both affective solidarity and practical self-organisation[1].

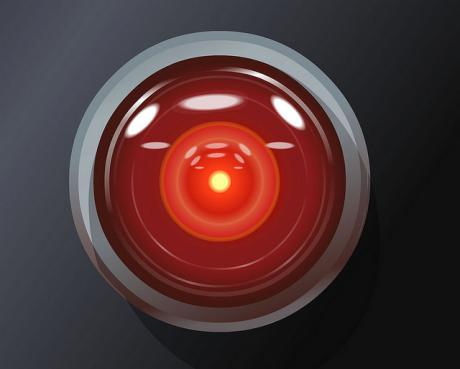

However, there is a different genealogy that speaks directly to our current condition; that of systems theory and cybernetics. What happens when the struggle in the streets takes place in the smart city of sensors and data? Perhaps the revolution will not be televised, but it will certainly be subject to algorithmic analysis. Let’s not forget that 1968 also saw the release of ‘2001: A Space Odyssey’, featuring the AI supercomputer HAL.

While opposition to the Vietnam war was a rallying point for the movements of ’68, the war itself was also notable for the application of systems analysis by US Secretary of Defense Robert McNamara, who attempted to make it, in modern parlance, a data-driven war.

During the Vietnam war the hamlet pacification programme alone produced 90,000 pages of data and reports a month[2], and the body count metric was published in the daily newspapers. The milieu that helped breed our current algorithmic dilemmas was the contemporaneous swirl of systems theory and cybernetics, ideas about emergent behaviour and experiments with computational reasoning, and the intermingling of military funding with the hippy visions of the Whole Earth Catalogue.

The double helix of DARPA and Silicon Valley can be traced through the evolution of the web to the present day, where AI and machine learning are making inroads everywhere carrying their own narratives of revolutionary disruption; a Ho Chi Minh trail of predictive analytics.

They are playing Go better than grand masters and preparing to drive everyone’s car, while the media panics about AI taking our jobs. But this AI is nothing like HAL. It’s a form of pattern-finding based on mathematical minimisation; like a complex version of fitting a straight line to a set of points. These algorithms find the optimal solution when the input data is both plentiful and messy. Algorithms like backpropagation[3] can find patterns in data that were intractable to analytical description, such as recognising human faces seen at different angles, in shadows and with occlusions. The algorithms of AI crunch the correlations and the results often work uncannily well.

But it’s still computers doing what computers have been good at since the days of vacuum tubes; performing mathematical calculations more quickly than us. Thanks to algorithms like neural networks, this calculative power can learn to emulate us in ways we would never have guessed at. This learning can be applied to any context that is boiled down to a set of numbers, such that the features of each example are reduced to a row of digits between zero and one and are labelled by a target outcome. The datasets end up looking pretty much the same whether it’s cancer scans or Netflix-viewing figures.

There’s nothing going on inside except maths; no self-awareness and no assimilation of embodied experience. These machines can develop their own unprogrammed behaviours but utterly lack an understanding of whether what they’ve learned makes sense. And yet, machine learning and AI are becoming the mechanisms of modern reasoning, bringing with them the kind of dualism that the philosophy of ’68 was set against, a belief in a hidden layer of reality which is ontologically superior and expressed mathematically[4].

The delphic accuracy of AI comes with built-in opacity because massively parallel calculations can’t always be reversed to human reasoning, while at the same time it will happily regurgitate society’s prejudices when trained on raw social data. It’s also mathematically impossible to design an algorithmto be fair to all groups at the same time[5].

For example, if the reoffending base rates vary by ethnicity, a recidivism algorithm like COMPAS will predict different numbers of false positives and more black people will be unfairly refused bail[6]. The wider impact comes from the way the algorithms proliferate social categorisations such as ‘troubled family’ or ‘student likely to underachieve’, fractalising social binaries wherever they divide into ‘is’ and ‘is not’. This isn’t only a matter of data dividuals misrepresenting our authentic selves but of technologies of the self that, through repetition, produce subjects and act on them. And, as AI analysis starts overcode MRI scans to force psychosocial symptoms back into the brain, we will even see algorithms play a part in the becoming of our bodies[7].

Political Technology

What we call AI, that is, machine learning acting in the world, is actually a political technology in the broadest sense. Yet under the cover of algorithmic claims to objectivity, neutrality and universality, there’s an infrastructual switch of allegiance to algorithmic governance.

The dialectic that drives AI into the heart of the system is the contradiction of societies that are data rich but subject to austerity. One need only look at the recent announcementsabout a brave new NHS to see the fervour welcoming this salvation[8]. While the global financial crisis is manufactured, the restructuring is real; algorithms are being enrolled in the refiguring of work and social relations such that precarious employment depends on satisfying algorithmic demands[9]and the public sphere exists inside a targeted attention economy.

Algorithms and machine learning are coming to act in the way pithily described by Pierre Bourdieu, as structured structures predisposed to function as structuring structures[10], such that they become absorbed by us as habits, attitudes, and pre-reflexive behaviours.

In fact, like global warming, AI has become a hyperobject[11]so massive that its totality is not realised in any local manifestation, a higher dimensional entity that adheres to anything it touches, whatever the resistance, and which is perceived by us through its informational imprints.

A key imprint of machine learning is its predictive power. Having learned both the gross and subtle elements of a pattern it can be applied to new data to predict which outcome is most likely, whether that is a purchasing decision or a terrorist attack. This leads ineluctably to the logic of preemption in any social field where data exists, which is every social field, so algorithms are predicting which prisoners should be given parole and which parentsare likely to abusetheir children[12][13].

We should bear in mind that the logic of these analytics is correlation. It’s purely pattern matching, not the revelation of a causal mechanism, so enforcing the foreclosure of alternative futures becomes effect without cause. The computational boundaries that classify the input data map outwards as cybernetic exclusions, implementing continuous forms of what Agamben calls states of exception. The internal imperative of all machine learning, which is to optimise the fit of the generated function, is entrained within a process of social and economic optimisation, fusing marketing and military strategies through the unitary activity of targeting.

A society whose synapses have been replaced by neural networks will generally tend to a heightened version of the status quo. Machine learning by itself cannot learn a new system of social patterns, only pump up the existing ones as computationally eternal. Moreover, the weight of those amplified effects will fall on the most data visible i.e. the poor and marginalised. The net effect being, as the book title says, the automation of inequality[14].

But at the very moment when the tech has emerged to fully automate neoliberalism, the wider system has lost its best-of-all-possible-worlds authority, and racist authoritarianism mestastasizes across the veneer of democracy.

Contamination and Resistance

The opacity of algorithmic classifications already have the tendency to evade due process, never mind when the levers of mass correlation are at the disposal of ideologies based on paranoid conspiracy theories. A common core to all forms of fascism is a rebirth of the nation from its present decadence, and a mobilisation to deal with those parts of the population that are the contamination[15].

The automated identification of anomalies is exactly what machine learning is good at, at the same time as promoting the kind of thoughtlessness that Arendt identified in Eichmann.

So much for the intensification of authoritarian tendencies by AI. What of resistance?

Dissident Google staff forced them to partly drop project Maven[16], which develops drone targeting, and Amazon workers are campaigning against the sale of facial recognition systems to the government. But these workers are the privileged guilds of modern tech; this isn’t a return of working class power.

In the UK and USA there’s a general institutional push for ethical AI– in fact you can’t move for initiatives aiming to add ethics to algorithms[17], but i suspect this is mainly preemptive PR to head off people’s growing unease about their coming AI overlords. All the initiatives that want to make AI ethical seem to think it’s about adding something i.e. ethics, instead of about revealing the value-laden-ness at every level of computation, right down to the mathematics.

Models of radical democratic practice offer a more political response through structures such as people’s councils composed of those directly affected, mobilising what Donna Haraway calls situated knowledgesthrough horizontalism and direct democracy[18]. While these are valid modes of resistance, there’s also the ’68 notion from groups like the Situationists that the Spectacle generates the potential for its own supersession[19].

I’d suggest that the self-subverting quality in AI is its latent surrealism. For example, experiments to figure out how image recognition actually works probed the contents of intermediary layers in the neural networks, and by recursively applying filters to these outputs produced hallucinatory imagesthat are straight out of an acid trip, such as snail-dogs and trees made entirely of eyes[20]. When people deliberately feed AI the wrong kind of data it makes surreal classifications. It’s a lot of fun, and can even make art that gets shownin galleries[21]but, like the Situationist drive through the Harz region of Germany while blindly following a map of London, it can also be a poetic disorientation that coaxes us out of our habitual categories.

Playfully Serious

While businesses and bureaucracies apply AI to the most serious contexts to make or save money or, through some miracle of machinic objectivity, solve society’s toughest problems, its liberatory potential is actually ludic.

It should be used playfully instead of abused as a form of prophecy. But playfully serious, like the tactics of the Situationists themselves, a disordering of the senses to reveal the possibilities hidden by the dead weight of commodification. Reactivating the demands of the social movements of ’68 that work becomes play, the useful becomes the good, and life itself becomes art.

At this point in time, where our futures are becoming cut off by algorithmic preemption we need to pursue a political philosophy that was embraced in ’68, of living the new society through authentic action in the here and now.

A counterculture of AI must be based on immediacy. The struggle in the streets must go hand in hand with a detournement of machine learning; one that seeks authentic decentralization, not Uber-ised serfdom, and federated horizontalism not the invisible nudges of algorithmic governance. We want a fun yet anti-fascist AI, so we can say “beneath the backpropagation, the beach!”.

References

[1] Kobie, Nicole. ‘#NoCapitulation: How One Hashtag Saved the UK University Strike’. Wired UK 18 Mar. 2018..

[2] Thayer, Thomas C. A Systems Analysis View of the Vietnam War: 1965-1972. Volume 2. Forces and Manpower. 1975. www.dtic.mil..

[3] 3Blue1Brown. What Is Backpropagation Really Doing? | Deep Learning, Chapter 3. N.p. Film.

[4] McQuillan, Dan. ‘Data Science as Machinic Neoplatonism’. Philosophy & Technology (2017): 1–20.

Exactly.

AI is to consciousness what conjuring is to magic.

While I agree in essence with the main thrust of your statement I feel compelled to quibble with some of the detail of your analogy. The operations of AI as implemented using neural nets is every bit as, if not more occult than the operations of magic. Magic almost always has some perceptible logic to it. The how is mysterious but you know you must close the circle of blood, speak the right words, invoke the right spirits by their magical names and so on. I don’t think neural net based AI can claim even that much transparency into its workings. Bayesian nets might offer a little more insight into their operation but I think that’s a reflection of the level of insight that must be designed into the networks by their human designers grasp of a problem and understanding of the statistical data used to construct the Bayesian nets.

Discussions of AI and consciousness parallel discussions about the nature of humankind and our peculiar language abilities and what they suggest about the nature of consciousness. The anatomy of modern humans seems to have fully evolved some time around 2,000,000 years ago. One school of thought argues that around 100,000 years ago, perhaps as recently as 70,000 years ago, humankind changed radically as reflected in the human artifacts from that era to the present. It were as though humans suddenly became conscious in a new unprecedented way. Other schools of thought argue nothing changed suddenly. Human capabilities evolved. Nothing radically changed 100,000 years ago.

I find the idea of a sudden change most compelling. An important tenet underlying this view is the idea that the human mind is structured and that innate structure is inborn and serves as a tool for making the world intelligible to a human newborn. But if there is an innate structuring of the human mind that enables the rapid learning of language our infants demonstrate — then what is that innate structure? Accepting an innate structuring of the human mind for language acquisition and performance easily shifts to notions of an innate structuring of the mind as the basis for vision and other capabilities … perhaps for consciousness.

I believe many of the early initiatives in AI grew from studies of how our vision works or how we recognize and create speech. These initiatives pursued the idea that we might better understand humankind through study of effective simulation of human capabilities. David Marr’s book “Vision” encapsulated this approach for me as an effort to understand how vision works by creating computer algorithms to simulate vision. The idea is that we might learn how a process works by simulating its operation. Experiments in vision suggest ideas for the existence of some visual subprocess. An algorithm for simulating that visual subprocess as we understand its operation can serve as a confirmation of sorts for our intuitions — if it behaves in a fashion similar to the behaviors of vision demonstrated by test subjects. I believe this notion is a basic tenet of cognitive psychology.

In vision studies, the visual process was broken down into a series of subprocesses which when stacked together might simulate the vision process. In speech recognition the hearing and interpretation of speech sounds were mapped to grammar structures in efforts to automate the understanding of language although as these efforts ran into difficulties the problem of to mapping speech to representations of speech — the speech recognition problem per se took prominence. Gradually both efforts succumbed to the practical demands for speech recognition. Models of the innate structures of the mind grew seemingly without limits and without the kinds of practical success which might assure funding by those increasingly interested in applications rather than deeper understanding. Similar issues affected vision research and research into other areas of cognitive psychology.

Then along came neural nets as a tool for simulated computer learning. They ‘work’ … given enough nodes, enough training data, and training, and a relatively specific problem. But they don’t offer much insight into how they operate.

The Internet is balkanized. Every political persuasion can have its own dystopia. Ideological Second Life. The fullest development of individual libertarianism. L’Internet cest moi.

I read this essay as … advanced enough technology tends toward utopian socialism. Can’t get more Communist Manifesto than that. Tay says different.

The Google experiment produced a two way communication that was unintelligible to humans. That is usually called gibberish. For humans to aspire to a joint human-machine integration would be incoherent.

I read this essay as … advanced enough technology tends toward utopian socialism.

Where do you find that in the article?

(Emphasis in quotes is mine)

The author seems to disagree: “A society whose synapses have been replaced by neural networks will generally tend to a heightened version of the status quo.”

Status quo, in this case, being: neoliberalism, austeritry

And, as usual, the poor get poorer, “ The net effect being, as the book title says, the automation of inequality[14],” (not exactly utopian socialism).

Old style neural networks? You are right. Deep learning ™ claims otherwise. Basically deep learning ™ claims to have a way to defeat ossification, thru new data allowing the trashing of the current paradigm, provided that the new data is actually new, not just confirmation bias. A truly fluid algorithm would be chaotic, with punctuated equilibria, those equilibria points changing as the environment changes.

As for utopian socialism … Marxism has two things going for it. Technology will lead to utopia, except for ossified social structures, which need to be overthrown. Most technophiles agree with the first point, and many agree with the second as well. But updated from steam power age to the computer age.

AI is to consciousness what conjuring is to magic.

Really? How do you know when you can’t tell anyone what consciousness is and have to compare it to magic?

Because humans have consciousness, their species self-conceit leads them to think that it’s some majestic emergent property of higher intelligence, something magic — as you prefer to believe — which human brains manifest and animal brains don’t.

It’s really not. It’s an evolutionary hack or kluge to get around the fact that all animal brains – including ours – are made of soggy grey meat neurons doing electrochemical signaling at limited speeds of one-hundred Hz or less.

Millions of years ago, the first rudimentary nervous systems evolved behind eyes to guide organisms so they could find food and survive, and consisted of only a few thousand neurons. In Earth’s oceans, there’s still a very simple organism called a seasquirt — a tunicate — that starts out mobile with about eight-hundred neurons and then in its mature phase becomes sessile, rooted in one place, at which point it loses those neurons.

The sea suirt is an exception. Across the broad front of animal evolution, nervous systems gradually grew bigger and more complex, and modern human brains have a hundred times more neurons than our galaxy has stars. So evolution’s problem became keeping all those neurons bound together so the organism still functioned in real time despite that slow interneuronal signaling. Evolution’s answer was consciousness – a constant oscillation at forty Hz of the whole thalamocortical system, synchronizing all the neurons into more or less one brain. That synchronization is what we experience as ‘consciousness’.

As neuroimaging tech has shown for decades, nevertheless, almost all the human brain’s work is actually done by unconscious neuronal modules processing inputs and reaching decisions, which maybe then get relayed to our consciousness. There, one or several seconds later we interpret those decisions retroactively as our ‘free will.’ In a sense, consciousness is something of a bottleneck — about one-millionth or less of the processing going on in our brain at any one time.

What is important about consciousness is that it provides agency.

https://en.wikipedia.org/wiki/Agency_(philosophy)

AI doesn’t have that.

So epiphenomalism about the mental.

“There, one or several seconds later we interpret those decisions retroactively as our ‘free will.’”

Then what is the “we” being epiphenomenal?

Then what is the “we” being epiphenomenal?

That forty Hz cycle of the thalamocortical system, synchronizing all the neurons into more or less one entity acting in real time.

Yes, the semantics are difficult. That’s a failure of the semantics, not the neuroscience.

In one sense, the Buddhists come the nearest: the “we” or “I” that “we” experience is merely an illusion.

Machine learning and conjuring are both perfectly explicable mechanical processes.

Both can be quite breathtaking in their effects.

It’s the promotion of the idea of conscious machines (automata being aromatically superior) that is silly. I don’t think we are any closer to the forbin project than we were when the film was made.

I had no idea consciousness (which I have always seen as quite magical) had been explained so completely.

That is, if attributing a bunch of identified processes as consciousness is an explanation.

Neuroimaging has been great for colour supplements so far, but has yet to yield much insight,let alone clinical utility.

Thanks for comment. And humans have come to rely on mathematics because it seems to describe many natural processes in very precise ways while failing at some other natural processes. Arguably evolution itself is a kind algorithm. The conceit, the “magic,” is assuming we understand how it all works. Perhaps nature is just an algorithm far more complex than what we humans have so far been able to conjure up for ourselves. Therefore AI is just a model and like those economics models inevitably flawed when applied to real world applications.

It also,as the article above in its thorny way indicates, a kind of con that tries to cloak software created by humans with the authority of mathematics. Our behavior and our social systems are still governed by those not fully understood forces created through evolution.

Like “economics” is “created by humans” to cloak looting justifications with the authority of mathematics.

Jihad, Butlerian: (see also Great Revolt) — the crusade against computers, thinking machines, and conscious robots begun in 201 B.G. and concluded in 108 B.G. Its chief commandment remains in the O.C. Bible as “Thou shalt not make a machine in the likeness of a human mind.”

Herbert refers to the Jihad many times in the entire Dune series, but did not give much detail on how he imagined the actual conflict.[5] In God Emperor of Dune (1981), Leto II Atreides indicates that the Jihad had been a semi-religious social upheaval initiated by humans who felt repulsed by how guided and controlled they had become by machines:

“The target of the Jihad was a machine-attitude as much as the machines,” Leto said. “Humans had set those machines to usurp our sense of beauty, our necessary selfdom out of which we make living judgments. Naturally, the machines were destroyed.”[6] https://en.m.wikipedia.org/wiki/Butlerian_Jihad

And there is this set of obvious, and not-so, programming issues: http://codesnippets.wikia.com/wiki/Infinite_Loop Wonder What wonders are buried in those millions of lines of code, human-written and then machine-generated under the conditions and with the facilities that the human coders initiated things with?

What I find troubling is that while machine learning has been developed by simulating the pattern recognition mechanisms in the brain (i.e. neural nets), and gives results that are deeply analogous to human intuition or hunches (things we know, but don’t know how we know), the fact that a computer spits out these hunches gives them a veneer of objectivity. So the hunches coming from a computer, “knowledge” whose provenance is unknowable, emerging as it does from patterns or pattern-like coincidences in the human-selected training data, are privileged. If, OTOH, a human loan officer rejected an application based on a gut feeling we would consider that generally unacceptable.

This means that while AIs are probably more susceptible to apophenia than humans, we will accept the conspiracy theories they spin with less skepticism than those coming from a human.

That said, I, for one, welcome our new AI overlords.

It’s actually a lot closer to how human brains work than anything that’s come before it in the software space. The main danger is that because of how software has worked in the past, people have certain preconceptions about it. For example, we think that because software represents a faithful encoding of rules that its designers have given it, that means that it can’t be wrong (if programmed correctly). For machine learning and AI, that’s no longer true. Just like humans, it can be – and sometimes is – wrong.

We also think software is objective. That may or may not be true for AI, but typically won’t be. Instead, it will faithfully learn (and apply) any biases that are present in its training data, whether obvious or latent. Just as a human child raised in an upscale New York neighborhood is going to have a different outlook from one raised in Tehran (or ISIS-controlled Raqqa) AIs will have different outlooks and biases depending on how they are trained.

There are also some things that AI still can’t do that humans can. To take your loan officer example, a human would realize that they needed a sound legal reason for rejecting an application, and would also have to produce evidence in support of their decision that would stand up to scrutiny by a third party. While it’s theoretically possible to do this with AI, currently no models exist that support this kind of higher level abstraction. They can recognize low level patterns very effectively and do classification based on them, but inferring a decision methodology based on that classification and setting out a chain of reasoning in auditable fashion is a step too far at present.

This essay is packed with information and observations on AI, it’s weaknesses, and where it’s going (taking us). It’s also dense (though clear). Fortunately, it’s short enough so one can read it several times.

One might read as companion, Homo Ludens by Johan Huizinga.

I may be wrong on this point but I’ll run it up a flag-pole and see if anybody salutes it. For the past few decades the amount of data being collected has turned into a raging flood. The intelligence agencies alone collect so much information that I seriously doubt that they can find their proverbial pins in their digital haystacks. If any more data is collected by the NSA, they may have to build a second facility in Utah just to store all the selfies that they collect. So I was wondering if this was a major impetus for AI to come into vogue. That is, a piece of software that would be able to sift through all this colossal amount of data and make some kind of sense out of it all and make it more useful.

Since then it is being put to other uses though they do not seem to be doing such a great job of it. Read just yesterday that an AI was being used to recruit people by a major organization and the focus of the story was how because of the algorithms used, women were being excluded by it in favour of males. If you read the story closer, however, the real reason that they had to shut it down was that the quality of those candidates being chosen was just rubbish as compared to what a human would do. I do wonder if a new language needs to be put together to write these AIs. One in which logical flaws like this would more easily show up.

I think you are on to something. There is more data than anyone really knows what to do with and it’s in the hands of people who don’t really understand mathematics.

Humans like to find patterns. We look at random cloud configurations and find faces or animals, things we as humans find familiar. But clearly we also realize that there are not actual giant people or animals floating in the sky just because someone was able to discern a whimsical figure for a brief moment.

Humans program computers to use algorithms created with a human bias. Yet for some reason far too many humans (the vast majority who don’t really understand mathematics) are willing to believe that the computer is infallible when all it’s really doing is the human equivalent of finding the Virgin Mary’s face on a piece of toast.

Yes, the computer can very often be correct, but not always. It sometimes takes a human being to put the data into context and make sense of it.

One example that comes to mind is the Deflategate controversy a few years ago where Tom Brady was accused of letting the air out of the football in order to get a better grip. One statistical study crunched a lot of data regarding fumbles in the NFL and it showed that not only did the Patriots team as a whole fumble the ball less than other teams, but Patriots players had a lower fumble percentage playing with the Patriots than they had when playing for other teams before or after their Patriot tenure. The conclusion from the data was that this must be because the Patriots were playing with a different, less inflated ball than other teams.

What the data doesn’t take into consideration however is the coach of the Patriots, who has a very low tolerance for mistakes. So if you fumble with the Patriots once, you might find yourself on the bench while someone who can hold the ball better gets a shot to play. Do it again and you’re likely to be playing on another team. That is the kind of esoteric knowledge that doesn’t fit well with algorithms.

Rev Kev wrote: So I was wondering if this was a major impetus for AI to come into vogue. That is, a piece of software that would be able to sift through all this colossal amount of data and make some kind of sense out of it all and make it more useful.

Basically, yes. It’s the combination of both colossal quantities of data and the processing power to handle it.

Geoff Hinton — who’s probably the central figure responsible for the renaissance in AI/deep learning/neural networks — will tell you as much and also that he and the neural network pioneers back in the 1980s, like Dave Rumelhart, had a lot of these ideas then. But there wasn’t the massive processing power to make them work.

https://en.wikipedia.org/wiki/Geoffrey_Hinton

But people will bring their own values to that new language, too. And they will be people with privileges and power and representatives of specific institutions.

You’re absolutely right that the flood of data is insane. But it would be a mistake to characterize its use as just a lot of bungling. Some of it is being put to quite sinister purposes, plenty effective if not fair:

https://theintercept.com/2018/10/08/food-stamps-snap-program-usda/

https://theintercept.com/2016/11/18/troubling-study-says-artificial-intelligence-can-predict-who-will-be-criminals-based-on-facial-features/

I’m too pagan for all this. I’ll just make this observation about modern fiction. I’m a crime mystery addict. Serial crime; law, order, justice; poetic justice and Greek tragedy. One thing is eternally significant no matter how sophisticated (complex) we think we are. It is our Fate – our hapless flailing Fate. When big data can overcome the infinity of random variables that govern Fate we’ll already have become immune to mind control by virtue of our evolution. DNA is much quicker than math… plus it actually has significance.

That one is not what I would call a belief; It is reasonable solid and generally accepted physics that there is at least one, probably more, “hidden layers of reality” behind what we perceive (can experience and possibly measure) as the universe. Something generates all the particles!

There is a “too weird to be total coincidence” quality to the way that theorists cook up theoretical possibles in arcane maths like Quantum Information Theory and then experimenters prove that the effects indeed exists.

The grave risk with AI, as I, and the article see it, is that people believe that AI is smarter than people even though current AI is about as aware as a 1960’s mechanical calculator. By relying on AI in all forward decisions they will basically fix society and human development in rapid-hardening 1000-year concrete!

Stability is fine and all, when one is sitting on the top of society and the environment does not need to be adapted to because one has infinite resources and energy. Significant elite resources and energy are exactly directed at selling AI to decision makers, exactly because the elites know what their game is here.

The elites know this approach works. Calcification has already happened to some degree with “Dumb AI” and NPM – spread-sheet level economical modelling which model “society” and are used to “check” the financial viability of legislation. But just so happens to favour certain outcomes.

In Denmark the models are called ADAM and DREAM. The model-based revenue checking has, I.M.O. and with a grain of salt, lead to the parliament unthinking rubber-stamping of 99.5% of all public expenditure and fierce political argumentation the rest of the entire political calendar over the last 0.5%. Which is why good things rarely happen on a political level and every big change, like climate change or migration or fraud, leaves our leaders transfixed strains into the headlights of oncoming disaster.

With modern AI, people have real trouble understanding that it is still just “clockworks and wheels” inside, there is no insight or understanding in an AI (that we currently know how to build). This makes AI even more toxic than the “Dumb AI”, where people knew that “these are models built by our best experts, but, still models”.

—

If one wanted to build an AI with consciousness, I believe that it has to be based on quantum computing of some kind because quantum effects are the connection between consciousness and the universe. It might not even be that hard to do, there is a field in unconventional computing called “reservoir computing” where it seems pretty much any nonlinear medium can be applied for serious computing purposes, the “computer” usually kicking Sillicon by a couple of orders of magnitude in performance – with the downside of being limited to a dedicated algorithm, not really programmable.

The hard part is building a conscious AI that works for “us”, while that part is the easy part with the AI’s that we currently knows how to build. At least for a very, very, small subset of “us”, which is what matters to the “AI-pushers”.

Ethics conversations as applied to economics always remind me of that letter from Marx about how liberal economists approach big questions as opposed to how socialists do: “They start in the heavens and work their way down. We start from here on earth and work our way up.” I’m paraphrasing, but perhaps the point is clear. As idealistic overlay, brought in post hoc, laid like fairly blankets on a dying groaning contradictory beast of a system–or pile of them, as you prefer–how efficacious are your ethics going to be?

I’ll give them this: at least, from five thousand feet, they do seem to discern that there IS a system down here. Which is more than can be said for the patches and sticking plasters of most reforms.

A hint of the old liberal catch-all remedy also glimmers there: if only everyone will be nicer, we can dodder on! And let’s face it, that’s a consoling belief to hold.