By Lambert Strether of Corrente

We have called attention to the use of “dark patterns” on websites before (2013), but until now the literature has been confined to definitions, examples, and classifications from website developers and User Interface/User Experience designers. Now we have a full-fledged academic study (“Dark Patterns at Scale“) that quantifies the number of sites that use dark patterns, and puts some rigor into the definitions and example. I’m going to start with the original literature, where I’ll define terms, then summarize the study, and then briefly look at legislation introduced in the Senate (the “DETOUR” Act) to which the new study should lend support.

The term “dark pattern” was coined by independent user experience consultant in Brignull 2010; he set up the “Dark Patterns” website to present a classification and present examples. His definition:

Dark Patterns are tricks used in websites and apps that make you do things that you didn’t mean to, like buying or signing up for something.[1]

As a sidebar, the EIT Lab (“European IT Law by Design”, at the University of Louvain) gives a somewhat more evolved definition:

Dark patterns are choice architectures used by many websites and apps to maliciously nudge users towards a decision that they would not have made if properly informed. Such deceptive that exploit individuals’ heuristics and cognitive biases (e.g. interfaces designed to hide costs until the very end of the transaction, services and products added to the consumer’s basket by default, etc.) are already well-known and sanctioned in the consumer protection domain. Regrettably, dark patterns have become widespread also in the area of data protection, where users are de facto forced to accept intrusive privacy settings, because of the way the information is framed and privacy choices presented by website’s operators. When users are tricked by design not only they can be harmed but also their trust in the digital market is likely to be affected. Dark patterns are therefore posing an additional challenge to data protection law, that needs to be addressed in an interdisciplinary way.

I like the introduction of “choice architectures,” because that would make dark patterns a sort of bent nudge theory, assuming nudge theory is not itself bent.) End sidebar.

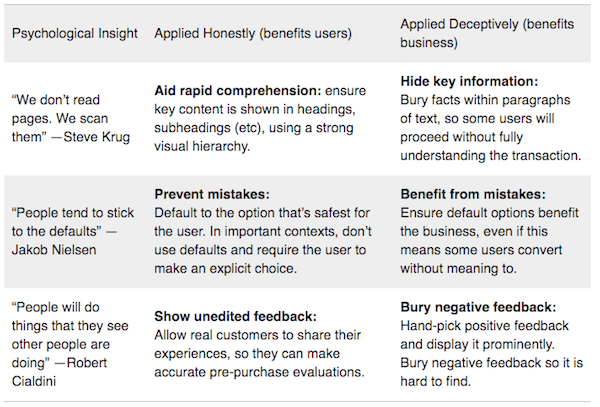

In 2011, Brugnell expanded on his ideas at the A List Apart webzine, writing:

Let’s continue a while as evil web designers: perhaps you’ve never thought about it before but all of the guidelines, principles, and methods that ethical designers use to design usable websites can be easily subverted to benefit business owners at the expense of users. It’s actually quite simple to take our understanding of human psychology and flip it over to the dark side. Let’s look at some examples:

Take the second example, “People stick to the defaults.” (Brugnell calls this a psychological insight, but I think the term of art is “cognitive bias.”) As a Mac user I was long ago trained — because the Apple Human Interface Guidelines were ethical — to go into any new software and adjust the user preferences because software works for me, not the other way round. However, if I were not a suspicious old codger, I wouldn’t have gone into the settings of my horrid Android phone (sorry), and turned off of the default stupidity and exploitation as I possibly could. (Sadly, in order to do that, I had to give the phone company an email account — a burner, naturally — which a fine example of the “forced enrollment” dark pattern, identified in the “Dark Patterns at Scale” study).

Importantly, firms don’t create dark patterns out of the sheer desire to be evil — leaving Facebook and Uber aside, of course — but for profit. Brugnell goes on:

[Business] can become accustomed to the resultant revenue, and unlikely to want to turn the tap off. Nobody wants to be the manager who caused profits to drop overnight because of the “improvements” they made to the website.

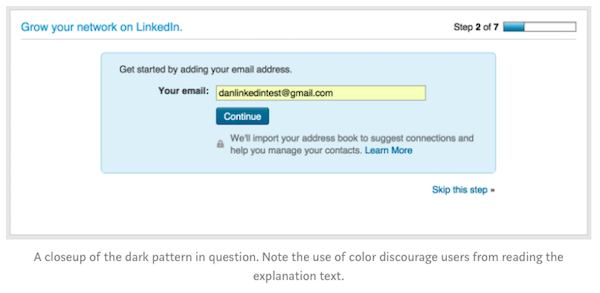

An example of a dark pattern (Brugnell calls this “Friend Spam“) at the user interface level comes from LinkedIn. Here’s the tricky screen:

Tricked user Dan Schlosser explains:

LinkedIn asks you to “Get started by adding your email address.” There is a note explaining what this button does, but because it is put in light gray text next to a bold blue “Continue” button, they get most people to blindly click ahead. This is definitely a dark pattern. In fact, it’s really a lie. This page is not for “adding your email address,” it’s for linking address books.

BWA-HA-HA-HA! You think you’re just signing up, but you’re giving LinkedIn your entire address book! (And constructing LinkedIn’s equivalent of Facebook’s social graph. To be fair, LinkedIn was fined for this and dropped the practice).

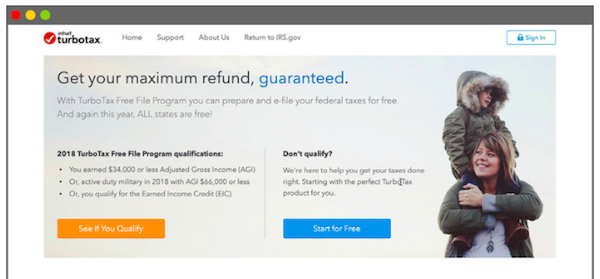

But dark patterns can also be devised at a level far above buttons in the user interface, at the site level, or even at the level of the internet at a whole, as TurboTax shows. From Pro Publica, “Here’s How TurboTax Just Tricked You Into Paying to File Your Taxes“, the background:

Intuit and other tax software companies have spent millions lobbying to make sure that the IRS doesn’t offer its own tax preparation and filing service. In exchange, the companies have entered into an agreement with the IRS to offer a “Free File” product to most Americans — but good luck finding it.

It’s a long article, because it details the really extravagant lengths that TurboTax went to, to stick their hand into your pocket. They made it impossible to get to the real free site from the paid site.They invented a fake “free” site that was confusingly similar to the real free site, but was not free. Then they made sure that the fake site came up first in Google searches, and the real free site was buried. If you knew what you were looking for, you could Google for the real free site. Going there, this dialog would appear:

Another Google search brought them to a page with two options: “See If You Qualify” and “Start for Free.” The “Start for Free” link brought them back to the version of TurboTax where they had to pay, but the “See If You Quality” link finally took them to the real Free File program.

BWA-HA-HA-HA! It’s GENIUS! (And an entire web development team and its managers were paid quite well to implement this crooked scheme, too. Intuit is, of course, located in Mountain View, California.)

So, with those definitions and examples under our belt, we can turn to Arunesh Mathur, Gunes Acar, Michael Friedman, Elena Lucherini, Jonathan Mayer, Marshini Chetty, and Arvind Narayanan, “Dark Patterns at Scale: Findings from a Crawl of 11K Shopping Websites” (PDF) Proceedings of the ACM on Human-Computer Interaction. November 2019. (Here is mobile-friendly version of the text.) Here is the conclusion:

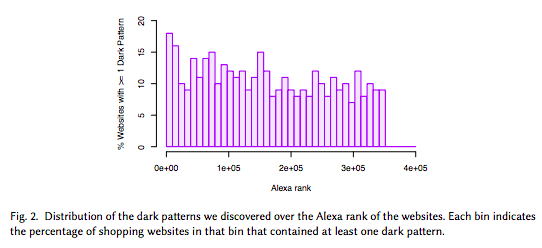

In this paper, we developed an automated techniques to study dark patterns on the web at scale. By simulating user actions on the ∼11K most popular shopping websites, we collected text and screenshots of these websites to identify their use of dark patterns. We defined and characterized these dark patterns, describing how they affect users decisions by linking our definitions to the cognitive biases leveraged by dark patterns. We found at least one instance of dark pattern on approximately 11.1% [1,254] of the [11K] examined websites. … Furthermore, we observed that dark patterns are more likely to appear on popular websites. Finally, we discovered that dark patterns are often enabled by third-party entities, of which we identify 22, two of which advertise practices that enable deceptive patterns. Based on these findings, we suggest that future work focuses on empirically evaluating the effects of dark patterns on user behavior, developing countermeasures against dark patterns so that users have a fair and transparent experience, and extending our work to discover dark patterns in other domains.

The “simulating user actions” part is really amazing and fun stuff. First, they built a crawler to detect shopping sites. Then, they curated the list of URLs the first crawler found, and fed that to a second crawler:

capable of navigating users’ primary interaction path on shopping websites: making a product purchase. Our crawler aligned closely with how an ordinary user would browse and make purchases on shopping websites: discover pages containing products on a website, add these products to the cart, and check out.

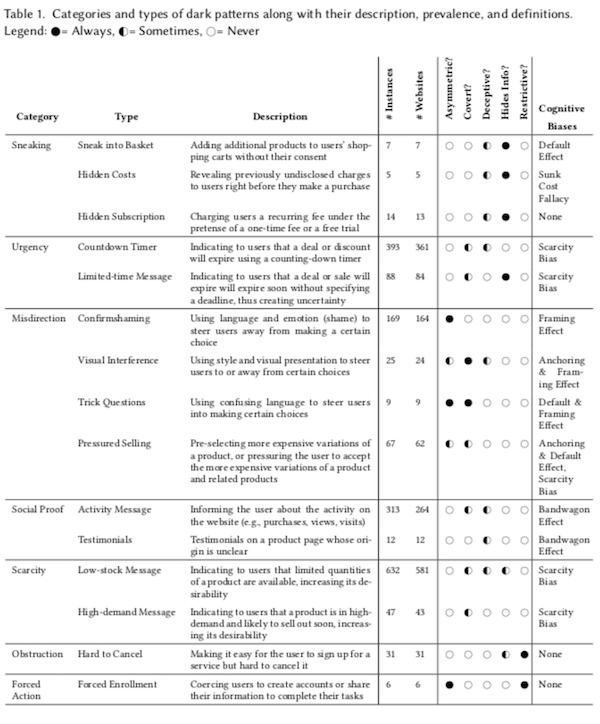

They then manually examined the primary interaction paths, curated them for dark patterns, and threw them into buckets. Table I:

Table 1 summarizes categories (for example, “Obstruction”), Type (“Hard to Cancel”), giving a description, quantification of sites and usages, and its place in the author’s taxonomy (whether asymmetricak, covert, deceptive, information hiding, or restrictive). Using their categories, I suppose that the LinkedIn example above would be classified as “Visual Inference,” because users would skip the grey type that explained what the prominent button really did. The TurboTax example would be “Trick Questions,” because of the deceptive language. Readers, you may test the robustness of these categories and types from your own online shopping experiences, if any.

Here is Figure 2, which shows that more popular websites are more likely to use dark patterns:

And then, there is “an ecosystem of third-party entities” that sells dark pattern development:

We discovered a total of 22 third-party entities, embedded in 1,066 of the 11K shopping websites in our data set, and in 7,769 of the Alexa top million websites…. Many of the third-parties advertised practices that appeared to be—and sometimes unambiguously were—manipulative: “[p]lay upon [customers’] fear of missing out by showing shoppers which products are creating a buzz on your website” (Fresh Relevance), “[c]reate a sense of urgency to boost conversions and speed up sales cycles with Price Alert Web Push” (Insider), “[t]ake advantage of impulse purchases or encourage visitors over shipping thresholds” (Qubit)…..

In some instances, we found that third parties openly advertised the deceptive capabilities of their products. For example, Boost dedicated a web page—titled “Fake it till you make it”—to describing how it could help create fake orders. Woocommerce Notification—a Woocommerce[2] platform plugin—also advertised that it could create fake social proof messages: “[t]he plugin will create fake orders of the selected products”

Finally, note that the problem may be even worse than the authors describe, because the scope of the study is limited:

1,818 represents a lower bound on the number of dark patterns on these websites, since our automated approach only examined text-based user interfaces on a sample of products pages per website.

In other words, if the dark pattern was implemented as a graphic, their crawlers wouldn’t catch it. Also, the study would not catch dark patterns above the UI/UX level, as with TurboTax creating an entire fake site, and then gaming Google search to point to it.

Finally, let’s turn to S1084, “Deceptive Experiences To Online Users Reduction Act” (DETOUR Act). Introduced by Senators Mark Warner (D-VA) and Deb Fischer (R-NE), the original co-sponsor, it sadly has gained no additional co-sponsors. (Senator Josh Hawley (R-MO) is also toiling in the same vineyard with the Social Media Addiction Reduction Technology (SMART) Act.) Warner’s bill has a behavioral or psychological research component, but here is the section relevant to dark patterns:

SEC. 3. UNFAIR AND DECEPTIVE ACTS AND PRACTICES RELATING TO THE MANIPULATION OF USER INTERFACES.

(a) Conduct Prohibited.—

(1) IN GENERAL.—It shall be unlawful for any large online operator—

(A) to design, modify, or manipulate a user interface with the purpose or substantial effect of obscuring, subverting, or impairing user autonomy, decision-making, or choice to obtain consent or user data;

(B) to subdivide or segment consumers of online services into groups for the purposes of behavioral or psychological experiments or studies, except with the informed consent of each user involved; or

(C) to design, modify, or manipulate a user interface on a website or online service, or portion thereof, that is directed to an individual under the age of 13, with the purpose or substantial effect of cultivating compulsive usage, including video auto-play functions initiated without the consent of a user.

Gizmodo describes the bill as follows:

According to ZDNet, practices that could be targeted under the bill include suddenly interrupting tasks unless users hit consent buttons, setting “agree” as the default option for privacy settings, and creating convoluted procedures for users to opt-out of data collection or barring access “until the user agrees to certain terms.”

In other words, this would radically change how a handful of massive companies whose entire business model relies on monetizing troves of user data operate.

“Any privacy policy involving consent is weakened by the presence of dark patterns. These manipulative user interfaces intentionally limit understanding and undermine consumer choice. Misleading prompts to just click the ‘OK’ button can often transfer your contacts, messages, browsing activity, photos, or location information without you even realizing it,” Fischer added. “Our bipartisan legislation seeks to curb the use of these dishonest interfaces and increase trust online.”

As we have seen, Fischer is quite correct. The industry critique I have found focuses, tellingly, not on the patterns themselves, but on the definition of “large online operator”. Will Rinehart of the American Action Forum:

While the Act’s goals are laudable, it suffers from a crippling fault: ambiguity. Its broad language would give the Federal Trade Commission (FTC) legal space to second guess every design decision by online companies, and, in the most expansive possible reading, it would make nearly all large web sites presumptively illegal.

You say “make nearly all large web sites presumptively illegal” like that’s a bad thing!

The Act defines large online operators as any online service with more than 100 million “authenticated users of an online service in any 30 day period” that is also subject to the jurisdiction of the FTC. Conspicuously, the Act doesn’t define what constitutes an “authenticated user,” which is important for understanding its scope. If authenticated user means that a site must have user profiles, then Google wouldn’t be included at all because it doesn’t require users to create a profile. Furthermore, if these 100 million users all had to be in the United States, then only Facebook, Instagram, and Facebook Messenger would be regulated because only these sites hit the threshold.

So strike out “authenticated.” Or are only authenticated users entitles to avoid trickery? The critique also urges that (tl;dr) “manipulation of user interfaces” is good, actually, and everybody does it, but I would urge that legislators crafting the bill simply talk to Mathur, Acar, Friedman, Lucherini, Mayer, Chetty, and Narayanan to find out what businesses do and do not do, and what is deceptive and what is not; in essence, Rinehart’s idea is that consumer fraud is indistinguishable from normal business practice in the digital realm. I don’t think the country has yet fallen that low.

Oh, the phishing equilibibrium part: I don’t want to become tedious by constantly hammering Akerloff and Shiller’s long definition[3], so I’ll use my “on a postcard” version: “If fraud can happen, it will already have happened.” It’s hard to think of a better example of this principle than dark patterns. The fact that there’s an entire ecosystem of third parties that supports scamming the users makes the entire situation all the more disgusting.

NOTES

[1] Akerlof and Shiller give an operational definitition of the outcome of a phishing equilibrium for users: “[M]aking decisions that NO ONE COULD POSSIBLY WANT” (caps in original); for example, retaining your subscription when you meant to cancel it.

[2] One might wonder if Adobe’s hilarious “Woo Woo” video is a sly insider reference:

[3] From NC, “Angling for Dollars: A Review of Akerlof and Shiller’s Phishing for Phools”

The fundamental concept of economics is … the notion of market equilibrium. For our explanation, we adapt the example of the checkout lane at the supermarket. When we arrive at the checkout at the supermarket, it usually takes at least a moment to decide which lane to choose. This decision entails some difficulty because the lines are — as an equilibrium — of almost the same length. This equilibrium occurs for the simple and natural reason that the arrivals at the checkout are sequentiallly choosing the shortest line.

The principle of equilibrium, which we see in the checkout lanes, applies to the economy much more generally. As businesspeople choose what line of business to undertake — as well as where they expand, or contract, their existing business — they (like customers approaching checkout) pick off the best opportunities. This too creates an equilibrium. Any opportunities for unusual profits are quickly taken off the table, leading to a situation where such opportunities are hard to find. This principle, with the concept of equilibrium it entails, lies at the heart of economics.

The principle also applies to phishing for phools. That means that if we have some weakness or other — some way in which we can be phished for fools for more than the usual profit — in the phishing equilibrium someone will take advantage of it[2]. Among all those business persons figuratively arriving at the checkout counter, looking around, and deciding where to spend their investment dollars, some will look to see if there are unusual profits from phishing us for phools. And if they see such an opportunity for profit, that will (again figuratively) be the “checkout lane” they choose.

And economies will have a “phishing equilibrium,” in which every chance for profit more than the ordinary will be taken up.

“Every” really meaning every. To put the idea in simpler terms with a more limited use case: “If fraud can happen, it will already have happened.”

Incentive compensation plans can now include dark pattern threshold levels to demonstrate mastery and reward or punish as desired. When such techniques are turned against employees, er, human resources, er whatever they may be called at the moment, then another barrier has fallen. Doesn’t that barrier and pattern schema seem like some kind of video game or LARP, leading once again toward the observation that we are all living in the matrix?

Radical thought exercise: have companies provide a Ten Commandmants-like score, UL-approved or whatever, for products and services. How well does that tax preparer company, for example, do on the Decalogue, with those strict injunctions? Maybe their board could use some refreshers via being voted out and the stock sold off.

Yes, that LinkedIn address harvesting one was truly evil. It caught me and I am usually very careful to check for this kind of thing (but adding an e-mail address is such a normal activity, I didn’t look carefully enough). Getting LinkedIn to forget the information again was a massive pain, and even now I’m pretty sure that it’s just pretending to have forgotten. Needless to say, I read LinkedIn disclaimers and security/privacy warnings like a hawk now.

I wonder if bit-and-switch is also an example of a dark pattern. Here I am thinking of when Microsoft was trying to force people to switch to their new Windows 10. For decades, if you saw a ‘X’ in the top right corner of a window, it meant when you clicked on it, it meant ‘close this window’. However in a total reversal of this decades old practice, when users were faced with a window asking if they wanted to completely change their operating system to Windows 10, clicking on the ‘X’ meant ‘Oh yeah, I’ve gotta get me some of that Windows 10. Start installing it straight away!”

That is, exactly, a dark pattern.

I’m not sure it fits exactly into the study’s taxonomy, though. It’s a form of visual interference that depends on your priors for “affordances” (“close box does this”), but I don’t know if that’s anchoring or not.

I don’t know if it rises to the level of ‘dark pattern,’ but recently, the Weather Channel now refuses to give me the Maps function, eg. radar etc. because now my “browser no longer supports” the maps function. Everything else works fine on the site for me. Also, I am getting an increasing portion of my cross links from websites refused because the site “does not use a secure protocol.” All of this because I still use old “reliable” Windows XP operating system to browse the web with. (I have a hard time switching over to Linux. So far, no version I have tried has ‘stuck.’ Such is the sad fate of a techno luddite.) Those ghouls at Windows have been trying to force us into their “new improved” corral for several years now.

Something related from ‘meatspace’ is the burgeoning practice of stores promoting app only specials. Even were I to tote around an iPhone, why would I want to clutter up my device with data stealing applications? I had originally thought that this was a function of ‘exclusivity,’ as in the first case of this I encountered was at Target stores. Now it is going ‘mainstream.’

Commenters have mentioned the practice of utilizing “burner” e-mail addresses. I can see the near future spawning a movement promoting the widespread use of “burner identities.”

windows 10 is far, far superior to linux. If you really want to play with linux you can now install linux inside windows as a native linux subsystem.

the problem with Windows that it is clearly turning evil – MS office is now an yearly subscription, something i object to. Hence i recommend switching to Google Office for casual users and LibreOffice for power users.

For kids i recommend Chromebook. Nothing gets close in ease of use and value for money.

Chromebook was made by Alphabet (nee Google). Nothing tracks your activity better. And use CCleaner often (every chance you get).

Don’t bother with MS Office; us LIbreOpenOffice (free w/donation) instead.

Windows 10 may be superior to Linux from certain applications but not for what I do. I know it’s a superior snooping and data farming system for Microsoft.

“burner identities”

There was a digital only comic released a few years ago (very mobile/tablet friendly) called “Private Eye.”

It takes place in the near-ish future with this premise:

All of this personal data that we put in the cloud. What if the cloud bursts?

Since all of our personal data has been breached and out in the open, people wear disguises in

real life to avoid any connections to their leaked data.

In your words, “burner identities.” :-)

You can actually get it here. For free (Name your own price. So free, if you so choose)

I think that’s a mixture of behaviors. The cross links are complaining that your URL protocol is ‘”http” not “https.” HTTPS is, in fact, a good thing. It may be that the Weather Channel is using browser technology that your old browser really does not support (not Flash, but like Flash, say).

On the app-only specials, very possibly. At a minimum they want to steal your data.

Since XP has gone out of support however many times, there have been several serious and theoretical vulnerabilities in SSL/TLS and underlying components that mandated changes in or deactivation of older protocols, or reissuance of server certificates. For example, older hashing algorithms like MD5 are relatively easy to spoof as hardware has gotten faster and cheaper (look up Logjam for one practical attack), so MD5 signatures on certificates are no longer considered secure, with almost all such certificates having been replaced by new ones with stronger signatures. An older browser’s error messages might not consider the possibility that the intersection between best-practice secure protocols/algorithms and your browser’s secure protocols/algorithms = {} because best practices are a lap ahead of your browser/OS cryptographic software.

It’s “forced enrollment” of a sort, and “hard to cancel” thanks to various deliberate “algorithmic” biases toward https URLs and the pride of place that online interaction has taken in the mainstream. As a matter of data security, it’s not a dark pattern. But when the existence of the certificate cartel and their ability to digitally sign money into existence are factored in, the intent is a bit less clear.

Windows 7 actually doesn’t suck that bad. The vast majority of useful software still runs on it and the interface is fairly similar to what you’re used to, yet the crypto is relatively up to date. It’s what I run on my development test machines.

You would do well to avoid reading comments which tell you Windows Version Whatever is better than GNU/Linux. Unless you must use Windows/Mac lock-in software such as Photoshop you are far better off with Linux. Try Linux Mint – extremely easy to install and a pleasure to use.

Similarly unless you love Google spyware you should avoid Chrome and the browsers built on it (Opera, Brave, etc) and stick to Firefox. And use Duck Duck as your search engine (something all NC readers already know).

A hearty thanks to one and all for the mini-tutorials. This old dog is presently eyeing new tricks.

My latest android photo requires audio to be enabled to take photos. If it’s disabled, every time you take a photo it will ask you, “allow phone to enable audio?” Which just means every time you take a photo, it’s also capturing audio, which is creepy.

It’s impossible to opt out.

Are you sure it’s capturing? In South Korea and possibly other jurisdictions, permanently enabled shutter sounds are required by law or industry agreement. Carriers and manufacturers associated with these jurisdictions (e.g. Sprint, Samsung) might not bother with a disable switch, even for export to markets where the misfeature isn’t required.

If there is a dark pattern in it, maybe “hidden subscription” qualifies. Sprint, in particular, did seem to support the attempt to have this misfeature encoded in US law, and requires it on their approved hardware regardless.

Wow, I just read the industry critique. Don’t read it if you don’t want your blood pressure raised. I’ve never seen so much bad faith argument in one place.

What’s wrong with starting with a general statement of principle, then refining it into specific cases later on (via amendments if needed) as relevant cases become apparent? If it’s the first time this has been done, naturally there is going to be some ambiguity. Is that a reason not to do it? Should everything be precisely defined on the first try? Or would you then critique the definitions as being inconsistent with reality?

Sounds pretty simple to me. An authenticated user is somebody with an identifier and a password or other secret-based authentication mechanism, to whom online activity can be attributed. Think of your users table (logically, if it’s not implemented as a single physical table). Does it have more than 100 million rows? If so, then the Act applies to you.* Next question!

* If you have finessed the definition of ‘users table’ such that one or more individuals are excluded even though you have the ability to reliably identify them and attribute online activity to them, smack yourself firmly in the head and return to step 1.

Come on, Will. Seriously? You’re suggesting that Gmail users, with addresses and passwords, should be considered unauthenticated because they haven’t created a profile? See note above (smack yourself in the head and return to step 1).

Why would they all have to be in the United States? Where in the act does it say that? It does say “subject to the jurisdiction of the FTC” and quotes a line/act reference. I think we can consider the FTC competent to figure that one out.

How about, oh, I don’t know – adding a disclaimer to the top of the page saying “this is a study” and a link to the experiment design? If it’s so hard, how come every university in the world manages to comply? Or if you are continuously and covertly collecting data from every page in the site as input to a behavioral experiment, are you really arguing that the Act should allow that?

The arguments presented are so woefully weak that I half suspect the author of pulling a Br’er Rabbit briar patch trick. Perhaps the Act ought to go even further.

Oops, I pasted the wrong quote for the second one. It should have been:

Thanks for this comment. Yes, I was pretty mild.

Not exactly the topic of this article, but related:

Home and Auto insurance companies (and others) offer attractive initial rates to get you to switch.

Then, each renewal year they begin increasing rates well above the inflation rate and adding additional coverage you didn’t ask for, often in expensive bundles that include things you might want. The contract is often 50-100+ pages of dense, legalise jargon, almost indecipherable. Sure, they offer replacement cost for home contents and other expensive coverage, but can you actually collect it. READ THE FINE PRINT (if you can…)

I carefully read the 90 page contract for a vacation cancellation policy for a 5-day Florida Gulf Coast holiday offered by a condo rental company. The $90 policy was encouraged and selected by default. I could see no way that I could actually collect on the policy for a weather event, and damage claims were stacked in their favor. I didn’t buy the policy, although our vacation was almost interrupted by a hurricane!

Cooluding with one another the home insurance companies have increased the deductible to at least $1000 and the fine print will state that it’s for each event but they will treat related events as single events. Yes, they keep ratcheting up the premium every year.

News sites and their drive for ad revenue via click thrus is my personal favorite. SFgate (which is the San Francisco Chronicles “free” site) sets up the “continue reading” button right below an ad… and if you click too fast on the continue button, it “reads” that click as a click on the ad. It’s not, in any way, a click on the ad but it is set up to drive “clicks” on the ad. Drives me nuts!

Amazon must be the king of the (dung)hill amongst e-tailers when it comes to using dark patterns. Just a few examples off the top of my head:

o On login, one has no choice along the lines of “display my orders page on login” – one always has to look at “Recommendations for you”;

o Bury negative feedback;

o Shove a Prime-membership signup window in non-Prime users’ faces at every opportunity. The one at checkout even requires the user to click a shaming “No, I don’t want fast free, shipping ’cause I’m a frickin’ freedom-hating moron” button in order to get past;

o Nudge users toward Prime even harder by reclassifying lots of items as “Prime only”;

o Offer free shipping for carts totaling $25 or more, but make it really, really hard to get by gerrymandering the item-eligibility for same and tweaking prices so users always seem to come up just short unless they buy at least one more item. Note that an e-commerce platform makes it easy – as in, inevitable – to fiddle proces on the fly – the AI sees that you’ve got $X worth of free-ship-eligible stuff in your basket, leaving you $Y short of $25. Presto! Any item that would normally price at or slightly above $Y is now real-time repriced to $Y-0.01, forcing you to buy another item.

o Even when you do manage to assemble $25 or more of free-ship-eligible items and you have a long history of taking advantage of such, the default at checkout time is *always* the standard fee-for-shipping. This is often preceded by the above Prime-in-your-face screen, for double the fun.

o For folks who choose a 30-day free trial of Prime, there is no e-mail reminder that the 30 days are about to end and that one is about to start getting charged.

It’s well nigh impossible to remove your credit card info from their site. I don’t use Amazon anymore for that and many other reasons.

More Dark Patterns: Car Lease law in California.

If you pay the lease ahead of time, and crash the car, you do not get the money back. I carefully examined the relevant Cali lease law and discovered that this case is not called out in the statutes. The other cases are called out and this just happens to fall through a crack between the other (less injurious) statutes.