By Lambert Strether of Corrente

I first wrote about robot cars back in 2016, in “Self-Driving Cars: How Badly Is the Technology Hyped?” (“Spoiler alert: Pretty badly”). Back then shady operators like Musk (Tesla), Kalanick (Uber), Zimmer (Lyft), and even the CEO of Ford were predicting Level 5 autonomous vehicles — I prefer “robot cars,” because why give these people the satisfaction — in five years. That’s in 2021, which is [allow me to check a date calculator] 42 days from now. So how’s that going?

Well, let’s go back to “Level 5.” What does that mean? Robot cars are (or are to be) classified with five levels, at least according to the Society of Automotive Engineers (SAE), the group that also classified the viscosity of your lubricating oils[1]. Here is an explanation of the levels in lay terms (including the SAE’s chart) from Dr. Steve Shladover of Berkeley’s Partners for Advanced Transportation Technology. I’ve helpfully underlined the portions relevant to this post:

[Driver Assistance]: So systems at Level 1 [like cruise control] are widely available on cars today.

[Partial Automation]: Level 2 systems are available on a few high-end cars; they’ll do automatic steering in the lane on a well-marked limited access highway and they’ll do car following. So they’ll follow the speed of the car in front of them. The driver still has to be monitoring the environment for any hazards or for any failures of the system and be prepared to take over immediately.

[Conditional Automation]: Level 3 is now where the technology builds in a few seconds of margin so that the driver may not have to take over immediately but maybe within, say, 5 seconds after a failure has occurred….

That level is somewhat controversial in the industry because there’s real doubt about whether it’s practical for a driver to shift their attention from the other thing that they’re doing to come back to the driving task under what’s potentially an emergency condition.

[High Automation]: [Level 4] it has multiple layers of capability, and it could allow the driver to, for example, fall asleep while driving on the highway for a long distance trip…. So you’re going up and down I-5 from one end of a state to the other, you could potentially catch up on your sleep as long as you’re still on I-5. But if you’re going to get off I-5 then you would have to get re-engaged as you get towards your destination

[Full Automation]: Level 5 is where you get to the automated taxi that can pick you up from any origin or take you to any destination or they could reposition a shared vehicle. If you’re in a car sharing mode of operation, you want to reposition a vehicle to where somebody needs it. That needs Level 5.

Level 5 is really, really hard.

As you can see, humans are in the loop up until level 5, albeit with decreasing levels of what I believe an airline pilot would call authority. However, the idea behind the SAE’S levels seems to be that human beings are static, will not adapt to a situation where a robot has authority by disengaging themselves from it.

The insurance industry, alive to the possibility that they may, one day, need to insure self-driving cars[2], has been studying how humans actually “drive” robot cars, as opposed to how Silicon Valley software engineers and Founders think they ought to, and have come up with some results that should be disquieting for the industry. Insurance Institute for Highway Safety has produced a study, “Disengagement from driving when using automation during a 4-week field trial” (helpfully thrown over the transom by alert reader dk). From the introduction, the goal of the study:

The current study assessed how driver disengagement, defined as visual-manual interaction with electronics or removal of hands from the wheel, differed as drivers became more accustomed to partial automation over a 4-week trial.

And the results:

The longer drivers used partial automation, the more likely they were to become disengaged by taking their hands off the wheel, using a cellphone, or interacting with in-vehicle electronics. Results associated with use of the two ACC systems diverged, with drivers in the S90 exhibiting less disengagement with use of ACC compared with manual driving, and those in the Evoque exhibiting more.

The study is Level 2 — that’s where we are after however much hype and however many billions, Level 2 — and even Level 2 introduces what the authors refer to as “the irony[2] of automation”:

The current study focuses on partial driving automation (henceforth “partial automation”) and one of its subcomponents, adaptive cruise control (ACC). Partial automation combines ACC and lane-centering functions to automate vehicle speed, time headway, and lateral position. Despite the separate or combined control provided by ACC or lane centering, the driver is fully responsible for the driving task when using the automation (Society of Automotive Engineers, 2018). These systems are designed for convenience rather than hazard avoidance, and they cannot successfully navigate all road features (e.g., difficulty negotiating lane splits); consequently, the driver must be prepared to assume manual control at any moment. Thus, when using automation, the driver has an added responsibility of monitoring it. This added task results in what Bainbridge (1983) describes as a basic irony of automation: while it removes the operator from the control loop, because of system limitations, the operator must monitor the automation; monitoring, however, is a task that humans fail at often (e.g., Mackworth, 1948, Warm, Dember, & Hancock, 2009; Weiner & Curry, 1980).

To compound this irony, ACC and partial automation function more reliably, and drivers’ level of comfort using the technology is greater in, free-flowing traffic on limited-access freeways than more complex scenarios such as heavy stop-and-go traffic or winding, curvy roads (Kidd & Reagan, 2019; Reagan, Cicchino, & Kidd, 2020).

And the policy implications:

One of the most challenging research needs is to determine the net effect of existing implementations of automation on crash risk. These systems are designed to provide continuous support for normal driving conditions, and they exist in tandem with crash avoidance systems that have been proven to reduce the types of crashes for which they were designed (Cicchino, 2017, 2018a, 2018b, 2019a, 2019b). There is support from field operational tests that the automated speed and headway provided by ACC may confer safety benefits beyond those provided by existing front crash prevention (e.g., Kessler et al., 2012), and this work exists alongside findings that suggest drivers remain more engaged when using ACC (Young & Stanton, 1997) relative to lane centering. In contrast, the current field test data and recent analyses of insurance claims are unclear about the safety benefits of continuous lane-centering systems extending beyond that identified for existing crash avoidance technologies (Highway Loss Data Institute [HLDI], 2017, 2019a, 2019b). Investigations of fatal crashes of vehicles with partial driving automation all indicate the role of inattention and suggest that accurate benefits estimation for partial automation will have to account for disbenefits introduced by complacency. This study provides support for the need for a more comprehensive consideration of factors such as changes in the odds of nondriving-related activities and hands-on-wheel behaviors when estimating safety benefits.

Shorter: We don’t know how even Level 2 robot cars net out in terms of safety[2]. That means that insurance company actuaries can’t know how to insure them, or their owners/drivers.

The obvious technical fix is to force the driver to pay attention. From Insurance Institute for Highway Safety, “Automated systems need stronger safeguards to keep drivers focused on the road” (and there’s your irony, right there; an “automated system” that also demands constant human “focus”; if a robot car were an elevator, you’d have to be monitoring the Elevator floor Indicator lights, prepared at all times to goose the Up button if the elevator slowed, or even stopped between floors (or, to be fair, the Down button)). From the article:

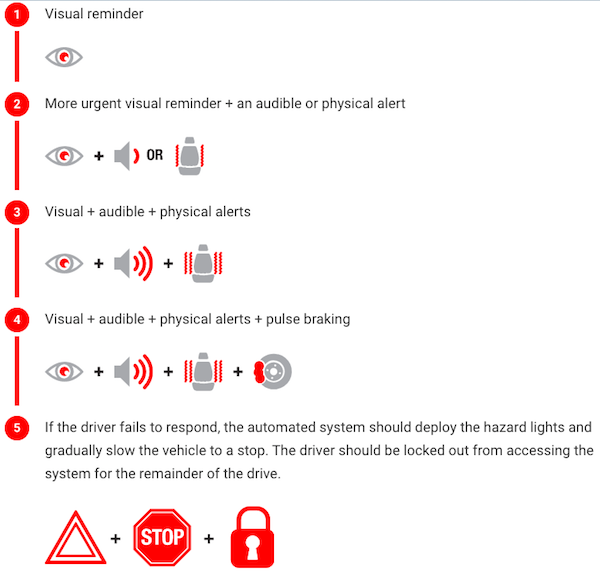

When the driver monitoring system detects that the driver’s focus has wandered, that should trigger a series of escalating attention reminders. The first warning should be a brief visual reminder. If the driver doesn’t quickly respond, the system should rapidly add an audible or physical alert, such as seat vibration, and a more urgent visual message.

They provide a handy chart of the “escalating attention reminders”:

(It’s not clear to me how the robot car “deploy the hazard lights and gradually slow the vehicle to a stop.” “Gradually slow” is doing some heavy lifting. Does the robot car stop in the middle of a highway? Does it pull over to the shoulder? What if there is no shoulder? What is the road is slippery with ice or snow? Etc. Sounds to me like they have to solve Level 5 to make Level 2 work.) I’m not sure what those “physical alerts” should be. Cattle prods?

Anyhow, I’m not a driver, but trying to imagine how a driver would feel, I’ve got to say that being placed in a situation where I have no authority, yet must remain alert at all times reminds me forcibly of this famous closing scene:

Granted, my robot car, having removed my authority, will demand constant attention so I can seize my authority back in a situation it can’t handle[3], so my situation would not be identical to Alex’s; perhaps I went a little over the top. Nevertheless, that doesn’t seem like a pleasurable driving experience. In fact, it seems like a recipe for constant low-grade stress. And where’s the “convenience” of a robot car if I can’t multitask? Wouldn’t it be simpler if I just drove the car myself?

NOTES

[1] “Heavy on the thirty-weight, Mom!”

[2] I’m not sure Silicon Valley is big on irony. They would, I suppose, purchase legislation that would solve the insurance problem by having the government take on the risks of this supposedly great technical advance.

[3] “Seize” because an emergency would be sudden. I would have to transition from non-engagement to engagement instantly. That doesn’t seem to be a thing humans do well either.

Readers, can you see the images in this post? Thank you.

Yes. On first load I couldn’t. But after refreshing I can. Revisiting the page even with a different browser works. So likely a sort term glitch.

Yes, seems to load OK.

I was noticing the goof ball web page load failure stuff, but I recently configured Firefox to use DNSSEC and the problems disappeared. I’m not sure if this is related, but I’ll put it out as a data point.

And I do recommend that everybody reconfigure their browser to use DNSSEC.

Do these vehicles make the user acknowledge a EULA yet?

I’d be surprised if that’s not common in a few years.

Railroad locomotives, which operate on fixed routes (much like geofencing) and have advanced signaling (Positive Train Control) demand the engineer’s engagement on a continuous basis. Most trains also have a second person, a conductor, in the locomotive. While failures occur due to equipment or human factors, they are thankfully rare.

One constant canard that over compensated railroad executives bring up the “threat” of driverless platooned trucks to the rail industry. This will require executives to impose one man or unmanned trains (which carry the equivalent of 400 tractor trailers).

PTC was to be fully operational in 2015. It is not finished yet. And it will be a long time before it is fully debugged.

The notion of Level 5 cars or trucks is complete rubbish. Thankfully insurers are drawing some lines in the sand.

Seems that insurance companies are the world’s canary in the coal mine. Perhaps because, oh I don’t know, that they have a financial stake in their assessments? So you have a bunch of corporations and people saying that sea level rises are not real. And when the insurance companies realize how much they are on the hook for with coastal properties, they start doing their own research

And the same here. Silicon valley is know for its, ahem, exuberance with its proclamations. Need it be said that ‘vaporware’ is a term that came out of Silicon valley? Self-driving cars are indeed hard. I would say that you either have cars driven by humans or you have cars that pick you up and do everything for you while you catch a nap. But cars like the later need extensive infrastructure built to support them – so long as somebody else pays for it all of course.

Anything else in-between is a gray foggy zone of dubious standards, legal minefields, dodgy judgements and enormous speculation. Unfortunately that is all the present state of the art can go it. To make it worse, places like America are being deliberately gutted from the inside so that the number of potential customers for these very expensive customers is steadily decreasing. In short, self-driving cars are a solution in search of a problem.

Another issue that I think insurance companies are going to spotlight is how much it will cost to repair cars that are stuffed with all the sensors needed to make these things into full-on robot cars. All those sensors and cameras are going to add to the final bill; not just in parts cost, but the labor that will go into calibrating them.

Also, have any of the companies that are trying to build a level 5 tech vehicle offered any figures as to how much these things are going to cost?

Excellent article. Thank you for the link to the “disengagement from driving” study.

The whole idea of robot cars is as nuts as The Singularity. Not wishing to offend anyone, but cars are intellectually inferior to us. They were designed to be operated and monitored by an “engaged” human being. The “engaged” human, formerly known as the driver, was intended to hold the steering wheel, deal with the brakes and the gas pedal, and push buttons or turn dials as needed. This isn’t hard and it keeps the driver engaged with the machine and its destination. Also, the tired eyes of the driver focus at infinity, or at least long distance, giving them a welcome respite from a more recent time-saving invention, the computer.

Human beings and cars go together like human beings and dogs. You cannot take the humans out of the equation.

PS yes, I could see the graphics!

Think about it : any move that one can conceive in less than 5 seconds is something that comes from “Type 1” unconscious reasoning. When a driver taps on the breaks, the foot press the brake pedal and then the conscious part of our mind becomes aware of it ! It is precisely the kind of reasoning modern machine learning is becoming good at. This is why I believe that level 3 is going to happen quite soon. Going to level 5 requires strategic planning that is more akin to “Type 2” general intelligence. Unless the environment is very controlled (like highways reserved for autonomous vehicles), that is not going to happen soon.

Let’s be frank about it : most car buyers are not interested by level 4 and 5. All they want is level 3 that don’t reengage them too often but engage them 100% the time it is needed. Such kind of negative knowledge is something that can be reached incrementally, and, more crucially, can be quantitatively assessed : causal machine learning that processes couterfactuals can produce statistics where autonomous driving disengaged when it should not have and where it stayed engaged when it should not have. I would say that a mature system should aim the first error to be once in a 10^2or 10^3 miles, and the second error to be once in 10^8 or 10^9 miles (10^-8 being the fatality rate per vehicle miles of human drivers, and assuming conservatively that any second error would lead to a fatality if it was not cured by human agency) To have a good estimate of the second error rate, a rule of thumb would be that we would have to collect around 100 events, so we would need 10^10 to 10^11 vehicles miles. With 10^5 miles per year per vehicle, that is a goal that is reachable in a year with a fleet of 10^5 to 10^6 vehicles, which is soon the order of magnitude of the Tesla worldwide fleet. This is why level 3 FSD seems achievable.

Once this is achieved, one could consider a driving license that is only focused on tackling the second error. Such a licensing process would make a large use of driving simulator because it would be focused on exceptional situations (like a tree falling on the road or a truck loosing its load, or the brakes failing downhill).

Actually, I expect that autonomous vehicle manufacturer will be leaders on this : one could imagine that they make a video game like software that would pop-up emergency situations at random time on a PC or Tablet screen and give you 5 seconds to react to it, similar to the random fitness app that ask you to perform a workout from time to time.

Such software would be sufficient to manage robotaxis fleets. If a taxi needs manual intervention say only a dozen time a day, 10 remote human operators could manage a fleet in the 100s. It would be a tough and critical job, like being an air traffic controller, which would need to be heavily regulated.

>. . . any move that one can conceive in less than 5 seconds is something that comes from “Type 1” unconscious reasoning. When a driver taps on the breaks, the foot press the brake pedal and then the conscious part of our mind becomes aware of it !

AI is delving into the realm of instinct, with shitty drivers only looking a couple of seconds ahead.

Are we to believe an amalgam of Chinesium wiring harnesses, motors and sensors hooked to the insides of a car body, controlled by a driving algorithm cooked up by Silly Con Valley is the future of transportation?

The Glass Cage by Nicholas Carr is a pretty good book about this phenomenon.

Pretty much. That is essentially the bottom line. This whole quest for level 5 automation is comically stupid. What a waste of resources.

It is indeed simpler to drive the car yourself. Driver aids with minor technological lift are helpful though. Blind sport warnings, lane drift monitors, back up cameras are all decent safety additions that still require the driver to keep their hands on the wheels and eyes on the road.

I agree to a point but my concern is that driver aides become crutches that worsen the driver’s skills, judgement etc. They are designed, after all, to replace skills which I was taught in my driving lessons (blind spot checking, road positioning, reverse parallel parking). On the other hand, a blind spot warning for those who find it difficult to turn their neck due to age or infirmity is undeniably useful and beneficial to the safety of all road users.

The other one I like is adaptive cruise control (and, heck, cruise control itself) but generally speaking I strongly dislike cars with the driver assist bells and whistles; I hate not feeling like I’m in total

control of the car at all times. (Thankfully they can be turned off)

Robot Finance hasn’t worked out too well. Robot Auto not looking much better.

And it might be useful to ask: Why is there such a demanding and insatiable need to to and fro? Possible answer: The installation of Auto into the human consciousness by the $$$$ chasers.

I dislike driving, and have managed to avoid doing it for some years now.

Because of that, I might conceivably be interested in a car which required me to do nothing at all: I just get in, give it a destination and off we go. The equivalent of having a personal chauffeur, in fact. But the idea of actually paying for a car which, left to itself, drives less well than I do, and so turns me into a kind of driving instructor or watchful parent, is ludicrous.

Good that you caught on to the actual situation: that the human has to train, interact with, learn with, tutor, teach, learn from, the robot in order to give it the ‘social’ space and platforming needed to learn to operate the car correctly. My years of working customer service boil down to that discovery with the added task (huge one in fact) of being patient enough to train the customers as well as the technology. Throw in the task of teaching management and fellow workers about my daily task (as well as endeavor to keep myself up to speed) and I was very happy to retire, lo, this half a decade ago.

I guess a general take away is that:

Every new level of technology, whether it be desktops, fax machines, cell phones, requires that the people using it acquire new skill sets so as to be able to successfully maneuver the humane/machine interface into giving desired outcomes.

Given the current level of mismanagement at levels 1 & 2 with automobiles, reaching level 5 will be similar to building robots that interface with humans like Data from the Star Trek series.

I’d say, offhand, we’re about five hundred years away from that level of machine intelligence.

Not mentioned: what we could call Level 0: automatic transmissions. I feel a lot more like dozing off on long straight flat highways in an automatic transmission car than in a manual on curvy hilly back roads with lots of stops. Just shifting keeps me more alert and engaged.

Outlaw automatic transmission! And while we’re at it, eliminate automatic turn-signal shutoff! And outlaw seat belts and cushions on dash boards and steering wheels. Get rid of cushions on seats too.

The more dangerous and uncomfortable driving is or is perceived to be, the more alert drivers will be.

I wish I had learned to drive with a stick. It does seem like you’d feel as if you were in more control.

You may not remember, but Silicon Valley was in a little slump when someone hatched the idea of blending the fun of DARPA’s robot car challenge in the Mojave Desert to the grind of the Bay Area’s traffic jams. Voila! A new gimmick to fund jobs for the next decade or two. The people behind this should be congratulated. They’ve created employment for thousands of programmers who might otherwise be programming military drones.

Humans are really lousy at driving. But each individual human thinks they are great at driving.

There are almost 40,000 deaths per year from auto accidents. In the top 3 of all unintentional injury deaths in the USA.

Automation has to just do better than that. Particularly for Actuaries.

Brute force machine learning capabilities is still advancing at an accelerating rate and there is no end in sight. Assuming no collapse of civilization, ML based automated driving will be statistically better than Humans within a decade.

Humans will at first hate it and make big stinks and then accept it totally. Look we accept 40k deaths / year driving for over a half century.

I think it’s a bit more complicated than just being safer than humans on average… If it’s your husband or wife who gets decapitated by a robot car, especially in a situation that any competent driver would have avoided, whether or not robot cars are safer on average is cold comfort, and a huge liability for the manufacturer. It may not be entirely logical, but I think that’s the standard people will expect.. that they won’t kill people in situations a human could have theoretically avoided.

Some of this tech will undoubtably make driving safer, and I’m all for those helpful features (co-pilot features if you will). But a lot could be done right now to prevent all the road fatalities that doesn’t involve a massive technological solution.. imo safety is not the primary reason level 5 robots are being pursued. I also tend to think anything that contributes to the persistence of cars as the central transportation in society is a net loss for culture and the environment.

So why not make features like blind spot monitoring, backup cameras/sensors, and forward collision systems mandatory? There are now even fancier systems like 360 panoramic camera systems?

Re: Footnote #1: Man, you are OLD!