Given how much is at stake for both the beneficiaries themselves and the various governmental bodies that fund public pensions, one would think transparent and forthright accounting for returns would be of paramount importance. But instead, public pension funds engage in all sorts of fudging, including to justify staff’s existence. Just like active fund managers, with very few exceptions, public pension fund investment offices do not generate enough in improved performance over simple public market index strategies to justify their existences.

A new paper by Richard Ennis, embedded at the end of this post, Cost, Performance, and Benchmark Bias of Public Pension Funds in the United States: An Unflattering Portrait documents widespread underperformance. While that may not be news to many of you, Ennis also shows that public pension funds mask this sorry state of affairs by employing special “custom” benchmarks, the rationale being that these measures allegedly better measure staff performance. The wee problem is the terrible incentives. The very same outside hired guns who create those benchmarks are also the people who helped devise the investment strategies in the first place. So they need to make their cooking look good. And they also know that making their clients look good is key to having their contracts renewed. The not-surprising result is pervasive camouflaging of lagging results.

For instance, recall that we publicized years ago that one group out of Stanford developed a five Vanguard fund strategy that beat the performance of 90% of public pension funds. And institutional investors like these giant retirement funds can presumably buy index fund products at even lower fee levels than Vanguard’s retail products. In other words, nearly all public pension funds would come out ahead if they fired their investment staffs, save a few people to buy the best index products.

Ennis’ latest paper is if anything more damning than it appears. He used the data from 24 state-level public pension funds. It was only 24 due to the need to have everyone use the same fiscal year end (June 30) and report performance net of costs (Ennis said 1/3 fell short on that criterion). These state funds will generally be larger and more professionally managed than smaller pension funds, particularly police and fire pension funds. In other words, the level of underperformance is certain to increase if smaller funds were included. It’s also a reasonable suspicion that bigger funds that did not report returns net of fees felt the need to exaggerate their performance.

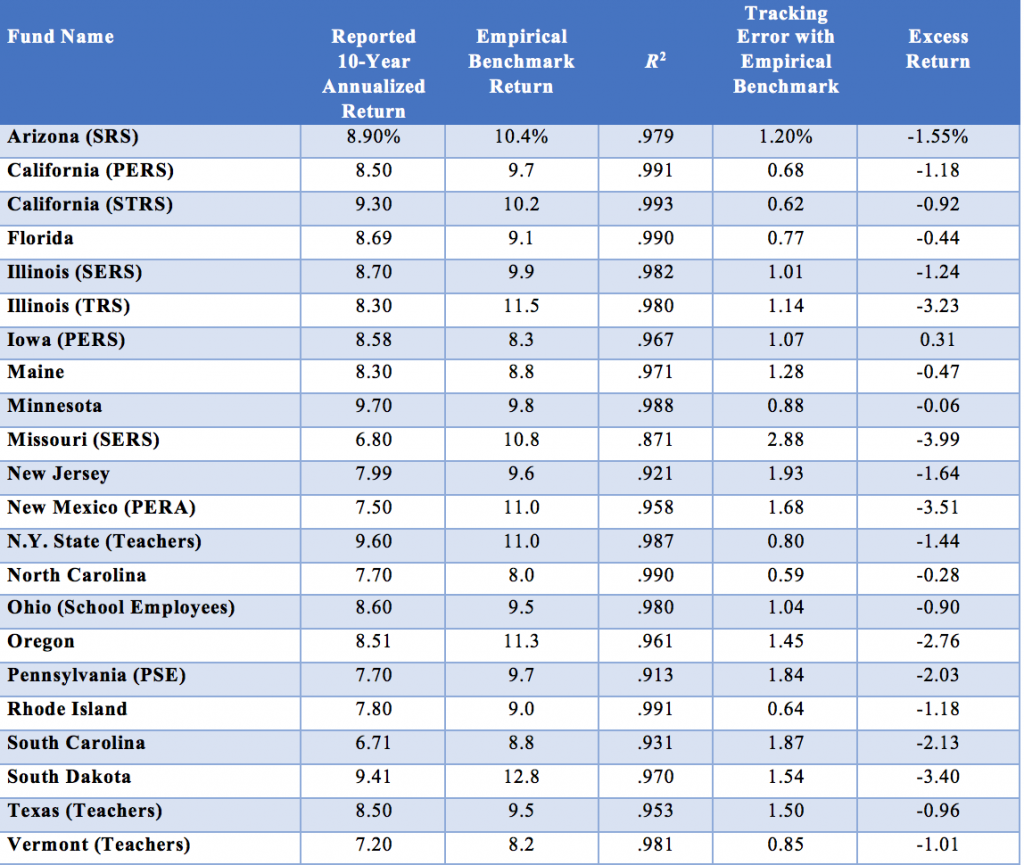

You can see Ennis’ conclusions in data form below. Negative excess returns means the funds would have done better with simple-minded indexing.

Note again his assumptions about investment expenses are conservative. Ennis shows in Table 1 the estimated cost of investing in various alternative investment strategies. Private equity is listed at 5.7%. CalPERS is continuing to use 7% as its estimate, per board member remarks at its last public meetings.

Ennis also documents how public pension funds lie to themselves, beneficiaries, taxpayers, and the press via flattering benchmarks. He explains how all-too-cooperative advisers feather their own beds:

Public pension funds use benchmarks of their own devising, describing them variously as “policy,” “custom,” “strategic,” or “composite” benchmarks. I refer to them as reporting benchmarks (RBs). …RBs are often opaque and difficult to replicate independently. RBs invariably include one or more active investment return series and thus are not passively investable. They are subjective in several respects, rendering their fashioning something of a black art. Moreover, they are devised by the funds’ staff and consultants, the same parties that are responsible for recommending investment strategy, selecting managers, and implementing the investment program. In other words, the benchmarkers have conflicting interests, acting as player as well as scorekeeper. To state the obvious, perhaps, RBs generally do not measure up to the standards of objectively determined, passively investable benchmarks used by scholars and serious practitioner-researchers..

Public fund portfolios often exhibit close year-to-year tracking with their RB. This results in part from how RBs are revised over time…

No doubt the benchmarkers see such tweaking as a way of legitimizing the benchmark so that it better aligns with the actual market, asset class, and factor exposures of the fund. It accomplishes that, to be sure. But it also reduces the value of the benchmark as a performance gauge, because the more a benchmark is tailored to fit the process being measured, the less information it can provide. At some point, it ceases to be a measuring stick altogether and becomes merely a shadow.

We talk about “hugging the benchmark” in portfolio management. Here is another twist on that theme: forcing the benchmark to hug the portfolio.

Ennis uses CalPERS as a case study because its behavior is typical:

CalPERS’s portfolio return tracks that of the RB extraordinarily closely. The 10-year annualized returns differ by all of 3 bps, 8.54% versus 8.51%. Year to year, the two-return series move in virtual lockstep, as demonstrated by the measures of statistical fit—an R2 of 99.5% and tracking error of just 0.5%—and even by simple visual inspection of the annual return differences. For example, excluding fiscal years 2012 and 2013, the annual return deviations from the RB are no greater than 0.4%. This is a skintight fit.

CalPERS’s EB return series also has a close statistical fit with CalPERS’s reported returns in terms of R2 and tracking error, although not as snug a fit as with the RB. Moreover, there is an important difference in the level of returns. Whereas CalPERS’s 10-year annualized return is virtually identical to that of its RB, it underperforms the EB by 114 bps a year. And it does so with remarkable consistency: in 10 years out of 10.

The return shortfall relative to the EB is statistically significant, with a t-statistic of –2.9. And it is of huge economic significance: A 114 bp shortfall on a $470 billion portfolio is more than $5 billion a year, a sum that would fund a lot of pensions.

In other words, the CalPERS example strongly suggests that the point of these highly customized benchmarks is to hide underperformance. Oh, and allow staff to get performance bonuses that they mainly don’t deserve (as in a portfolio might beat bona fide market performance by enough that its staff really have earned a reward).1

Benchmark gaming is far from the only way that public pension funds regularly mislead their so-called stakeholders. Over time, even more significant has been the use of overly high assumed return rates. These allow the funds to assume higher future investment earnings than they can attain. That results in the funds not requiring high enough annual contributions to provide for adequate funding. Mind you, not every defined benefit fund is significantly underwater. But most are. Deliberately adopting this “kick the can down the road” strategy has only dug their hole deeper. But given the long-term horizon of public pension funds, the folks in a position of authority have good reason to think they can get away with “IBG-YBG” just as Wall Street execs have for far too long.

_______

1 Ben Meng pushed for CalPER to pay investment office performance bonuses on total fund performance, not portfolio results. That seemed bizarre since someone working, say, in real estate, would have no impact on the results of the public equity portfolio.

Cost, Performance, and Benchmark Bias of Public Pension Funds in the United States- An Unflattering Portrait

This is outside my area of expertise, but everything I’ve been taught about long term investments amounts to KISS – use your financial muscle to buy up a range of relatively safe investments where your staff have personal knowledge and expertise, put a very small percentage (small enough that you can afford to lose) into high risk high return hedging type investments, and keep liquidity as low as needed. Adding additional complexity will not increase returns, it simply provides an opening to hide incompetence and corruption.

And complex oversized staff bonuses are an invitation for staff to game their investments for personal advancement. Better to pay them well, and encourage everyone to go home at 5.30pm.

> Better to pay them well, and encourage everyone to go home at 5.30pm.

4:30pm or, better, 4:00pm.

Thank you, Gentlemen.

Just to add to PK’s final sentence in the first paragraph, size does not increase returns, either. A decade ago, I went to see some analysts at Schroders to discuss the impact of structural reform, Liikanen, Vickers and Volcker, on, amongst others, the members of my trade association employer. The pair explained figures that showed balance sheets above USD100 billion begin to increase risks, including from corruption and incompetence, and diminish returns, especially from fines. There is / was a lot of support on the buy side and even in banks for structural reform and treating banks as utilities.

Work from home, put the money in index funds, check Yahoo Finance once a day to make sure nothing has happened, and don’t mess with things.

That is an important factor that. I mean not making a mess of things. Do the stuff that you are supposed to do and don’t invest in stuff that sounds too good to be true may be good choices.

A private equity or real estate investment incurs base plus incentive fees. The estimated total fees received by the general partner have been estimated to be in the range of 3 to 7% per year. Let’s say 5%. So for every $1B investment, a GP receives $50 million per year, an amount that greases a lot of political wheels

Performance doesn’t matter. Creating the appearance of decent performance is what matters, which explains why CalPERS hires 80 staff for its office of propaganda (“public affairs”) and why govt pensions pay enormous amounts to outside public relations and legal firms. Whatever it takes to keep the political campaigns well-funded, and using pension assets to do whatever the ruling politicians want

System works beautifully for the politicos, govt pension staff, all the hanger-ons. What about the regular Joe and Jane citizen?

I will ask mean possibly stupid questions — it’s my nature. The public pension funds use flattering benchmarks to hide their failures to beat indexing … but what can the beneficiaries do to ‘chastise’ the mismanagers of their retirement funds? And whither has Government regulation gone?

Where have old-style investments gone — investments that resulted in the production of a useful product, of lasting quality, that grew sales at an ordinary — not exponential — rate, earned a reasonable profit, paid dividends based on true earnings and made products that held their own in the marketplace for similar goods. What are investors, investing in now — whether through indexing or not? There seems to be a lot of sizzle, but very little beef in our steaks. Where will this all end?

It’s pretty self-evident that these benchmarks are designed to goose staff bonuses and renew consultant contracts. Meanwhile, the underperformance is exacerbated by gifting fees to grifters.

Follow the money…

For CalPERS and other government pensions, we can throw away the journal articles that describe ideal criteria for benchmark selection.

CalPERS has mastered its two criteria.

One, the benchmark selected must be easy to beat, so staff can collect max bonus no matter how bad returns are

Two, the benchmark must be custom and complex, so it’s impossible to understand

CalPERS board, staff, and consultants all share a common goal, create the illusion that they know what they are doing, even though readers of NC know otherwise

What are we supposed to do? Who can we speak to? Union representatives? Do these organizations have public meetings? Is anyone organizing to demand a return to traditional, passive investments with low fees? Information on any grassroots movements to address this would be very helpful for future articles! I’m at a loss. It’s hard to even find information like this when researching this topic.