Yves here. While this article on the acquisition of human language, I am not sure about the proposition that only humans have syntax, as in can assemble communication units in a way that conveys more complex meanings.

Consider the crow, estimated at having the intelligence of a seven or eight year old. There are many studies of crows telling each other about specific people, demonstrated by a masked experimenter catching a crow (which they really do not like), then being hectored by crows who were not party to the original offending conduct:

So how does one crow tell another crow that a particular human visage is a bad person? Perhaps one crow sees another crow scold a particular person. But particular masks elicit consistent crow catcalls for many years after the offending behavior. It still might be very good memories plus observation by their peers. But the detail from this study suggests a more complex mechanism:

To test his [Professor John Marzluff of the University of Washington’s] theory, two researchers, each wearing an identical “dangerous” mask, trapped, banded and released 7 to 15 birds at five different sites near Seattle.

To determine the impact of the capture on the crow population, over the next five years, observations were made about the birds’ behaviour by people walking a designated route that included a trapping site.

These observers either wore a so-called neutral mask or one of the “dangerous” masks worn during the initial trapping event.

Within the first two weeks after trapping, an average of 26 per cent of crows encountered scolded the person wearing the dangerous mask.

Scolding, says Mazluff, is a harsh alarm kaw directed repeatedly at the threatening person accompanied by agitated wing and tail flicking. It is often accompanied by mobbing, where more than one crow jointly scolds.

After 1.25 years, 30.4 per cent of crows encountered by people wearing the dangerous mask scolded consistently, while that figure more than doubled to around 66 per cent almost three years after the initial trapping.

Marzluff says the area over which the awareness of the threat had spread also grew significantly during the study. Significantly, during the same timeframe, there was no change in the rate of scolding towards the person wearing the neutral mask.

He says their work shows the knowledge of the threat is passed on between peers and from parent to child….

Marzuff says he had thought the memory of the threat would lose its potency, but instead was “increasing in strength now five years later”.

“They hadn’t seen me for a year with the mask on and when I walked out of the office they immediately scolded me,” he says.

In another example (I can’t readily find it in the archives) a city in Canada that was bedeviled by crows decided to schedule a huge cull. They called in hunters. They expected to kill thousands, even more.

They only got one. The rest of the crows immediately started flying higher than gun range.

Again, how did the crows convey that information to each other, and so quickly too?

The extend of corvid vocalization could support more complex messaging. From A Murder of Crows:

Ravens can in fact produce an amazing variety of sounds. Not only can they hum, sing, and utter human words: they have been recorded duplicating the noise of anti-avalanche explosions, down to the “Three…Two… One” of the human technician. These talents of mimicry reflect the general braininess of corvids – which is so high that by most standards of animal assessment, it’s off the scale.

By Tom Neuburger. Originally published at God’s Spies

While we wait for news — or not — from the Democratic convention, I offer this, part of our “Dawn of Everything” series of discussions. Enjoy.

Adam names the animals

Oh! Blessed rage for order, pale Ramon,

The maker’s rage to order words of the sea,

Words of the fragrant portals, dimly-starred,

And of ourselves and of our origins

—Wallace Stevens, “The Idea of Order at Key West”

We’re headed for prehistoric times, I’m sure of it, and as a result, human prehistory has been a focus of mine for quite a few years.

What were our Stone Age lives like? And who lived them? After all, “we” might be just homo sapiens, maybe 200,000 years old; or “we” might be broader, including our contemporary cousins, homo neanderthalensis, or even the ancient, long-lived homo erectus. Erectus had very good tools and fire perhaps. Neanderthals were much like us — we interbred — though evidence suggests, while they probably had some kind of language, we were far smarter.

One of the bigger questions is the one David Graeber and David Wengrow took on in their book The Dawn of Everything: Was it inevitable that the myriad of Stone Age cultures resolve to the single predatory mess we’re now saddled with?

After all, it’s our predatory masters — the Kochs, the Adelsons, the Pritzgers, the Geffens, their friends — whose mandatory greed (which most of us applaud, by the way) have landed us where we are, mounting the crest of our last great ride down the hill.

What’s the Origin of Syntax?

One set of mysteries regarding our ancient ancestors involves their language. How did it emerge? How did it grow? If the direction of modern languages is to become more simple — in English, the loss of “whom”; in French the loss of “ne”; the millions of shortenings and mergings all languages endure — how did that complication that we call syntax first come about?

The most famous theory is the one by, yes, Noam Chomsky, that humans are born with a “universal grammar” mapped out in our brains, and learning our first language applies that prebuilt facility to what we hear. His argument: No child could learn, from the “poverty of the stimulus” (his or her caregiver’s phrases), all the complexity of any actual language.

There’s, of course, much mulling and arguing over this topic, especially since it’s so theoretical.

Something that’s not theoretical though is this: a group of experiments that shows that syntax evolves, from little to quite complex, all on its own, as a natural byproduct of each generation’s attempt to learn from their parents.

The process is fascinating and demonstrable. The authors of this work have done computer simulations, and they’ve worked with people as well. The results seem miraculous: like putting chemicals into a jar, then thirty days later, finding a butterfly.

The Iterated Learning Model

I’ll explain the experiments here, then append a video that’s more complete. (There are others. Search for Simon Kirby or Kenny Smith.) The root idea is simple. They start where Chomsky starts, with the “poverty of the stimulus,” the incomplete exposure every child gets to his or her first language. Then they simulate learning.

Let’s start with some principles:

- All language changes, year after year, generation to generation. The process will never stop. It’s how we get from Chaucer to Shakespeare to you.

- Holistic language vs. compositional language: That’s jargon for a language made of utterings that cannot be divided in parts (holistic), versus one made up of those that can (compositional).For example, “abracadabra” means “let there be magic,” yet no part of that word means any part of its meaning. It’s entirely holistic. The whole word means the idea; it has no parts. “John walked home,” on the other hand, is compositional; it’s made up of parts that each contain part of the idea. (Note that the word “walked” is compositional as well: “walk” plus “ed”.)

This matters for two reasons. First, the closest our monkey cousins get to a language is a set of lip smacks, grunts, calls and alerts that each have a meaning, but can’t be deconstructed or assembled. If this is the ultimate source of our great gift, it’s a truly holistic one. No part of a chimp hoot or groan means any part of the message. The sound is a single message.

Because of this fact — the holistic nature of “monkey talk” — our researchers seeded their experiment with a made-up and random language, entirely holistic. Then they taught this language to successive generations of learners — both people and in simulations — with each learner teaching the next as the language evolved.

Remember, the question we’re interested in is: How did syntax start? Who turned the holistic grunts of the monkeys we were, into the subtle order of our first real languages.

The answer: Nobody did.

The Experiments

All of the experiments are pretty much alike; they just vary in tweaked parameters. Each goes like this:

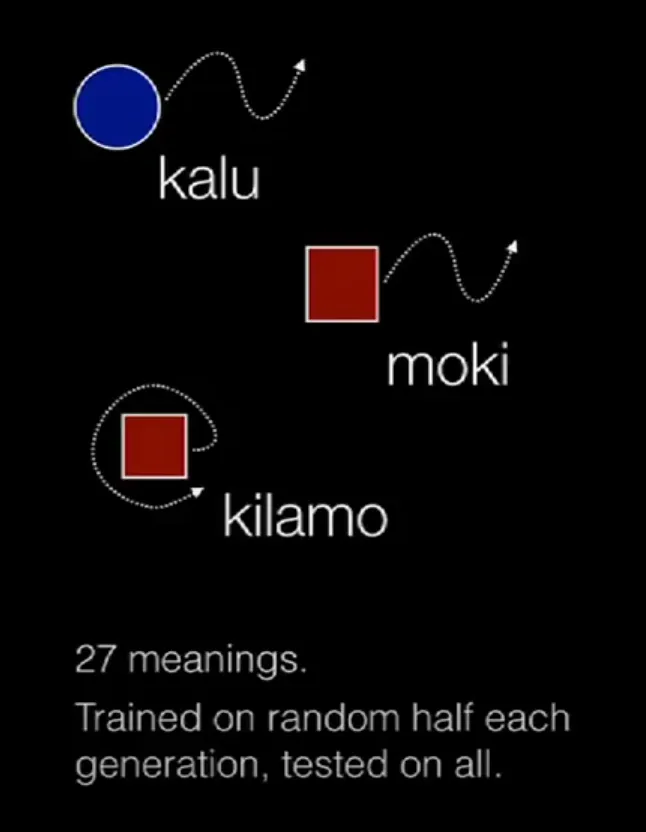

Step one. Create a small artificial, holistic language made up of nonsense words, where each word “means” a small drawing. In this case, each drawing has three elements: a shape, a color and a motion. Here are a few:

Since each “meaning” (symbolic drawing) has a color, a shape and a motion, and since there are three colors (blue, red, black), three shapes (circle, square, triangle), and three motions (straight, wavy, looping), there are 27 ideas (symbols) in the language and thus 27 words. Again, the words are randomly assigned.

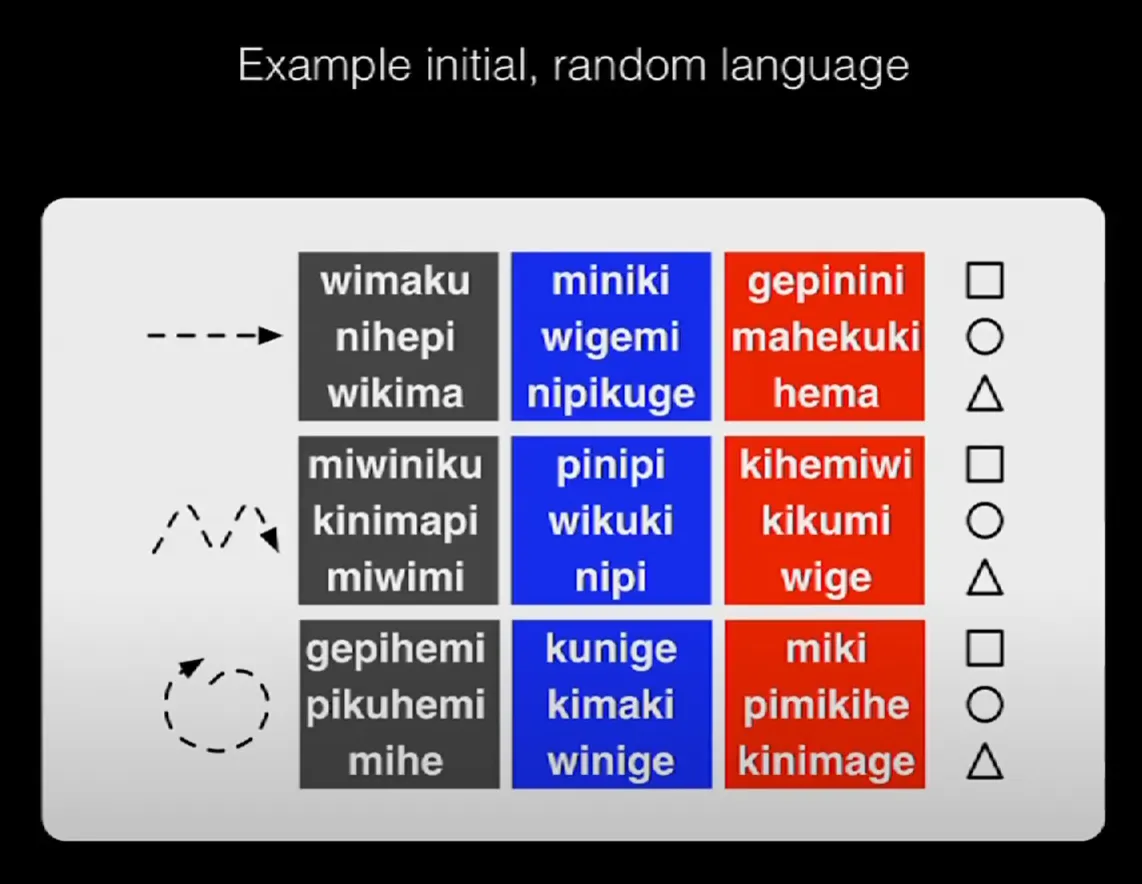

Following this pattern, a 27-word language might look like this:

A 27-word language where each word refers to a colored shape-with-motion. “Wimaku” means “black square with straight motion,” etc.

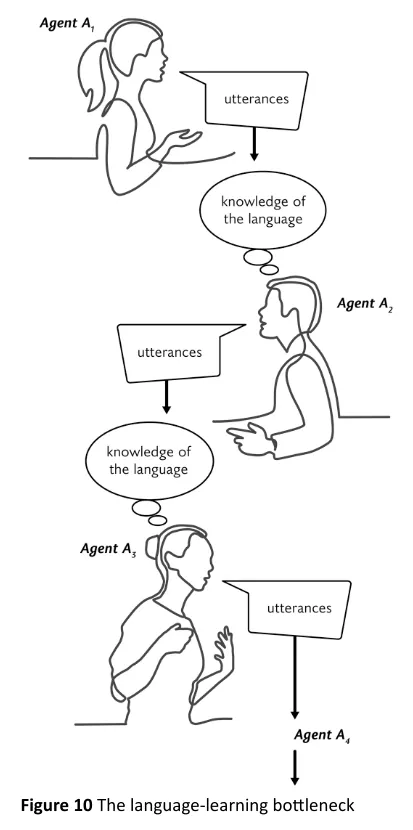

Step two. Teach the first “agent” (A1, the first learner) the whole language.

Step three. Let A1 teach A2, the second learner, just half of the language.

Step four. Test A2 on the whole language. She is shown all of the “meanings” (the symbols) and has to try to guess the names of the ones she doesn’t know.

Step five. Let A2 teach A3, the third learner in the chain, a random half of her language, filtering out duplicated words, words with two “meanings” (two associated symbols).

Step six. Test A3, as before, on the whole language. Show him all of the symbols and ask him to guess the names he hasn’t yet learned.

Step seven. Repeat the above as often as you like.

From Steven Mithen, The Language Puzzle

The researchers did this with people and by computer simulation. The beauty of a simulation is that you can iterate the process endlessly if you like (the number of generations from Sumerian writing to now is about 200). You can also vary parameters like population size (how many teachers and learners in each generation), as well as the bottleneck size (does each generation teach half the language, a third of it, or three-fourths?).

The Results

The results were astounding. The bottleneck — each student’s incomplete learning — always creates, over time, a compositional, syntactical language. As Steven Mithen put it in Chapter 8 of his book The Language Puzzle, the work that put me onto this idea:

Although rules [of a language] gradually change over time, just as the meaning and pronunciation of words change, each generation learns the rules used by the previous generation from whom they are learning language. As an English speaker, I learned to put adjectives before nouns from my parents, and they did the same from their parents and so forth back in time. That raises a question central to the language puzzle: how did the rules originate? Were they invented by a clever hominin in the early Stone Age, who has left a very long legacy because their rules have been copied and accidentally modified by every generation of language learners that followed? No, of course not. But what is the alternative?

The answer was discovered during the 1990s: syntax spontaneously emerges from the generation-to-generation language-learning process itself. This surprising and linguistically revolutionary finding was discovered by a new sub-discipline of linguistics that is known as computational evolutionary linguistics. This constructs computer simulation models for how language evolves by using artificial languages and virtual people.

Here’s what that looks like in a lab with people. Look again at the “language” above, the 27 words. At this stage, the words are holistic — “miniki” means “blue square straight” and “wige” means “red triangle wavy.” No part of a word means part of the associated symbol.

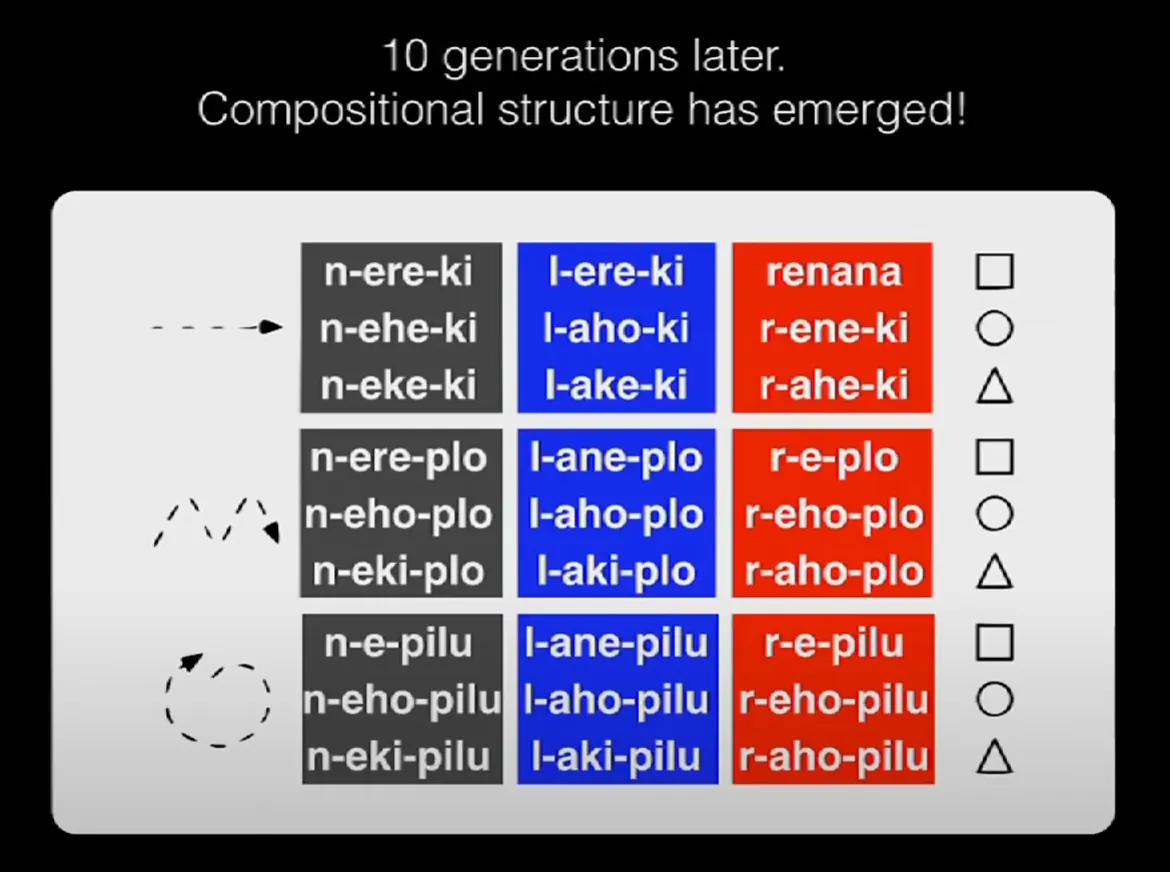

After just ten generations, this is what the language evolved into:

The hyphens were added to this slide for informational purposes; they weren’t part of the actual words. Anything starting with “n” is black in color; “ere” in the middle is starting to mean a square; anything ending in “plo” has a wavy motion.

Ten generations more and this would be smoother. Again, the order, the syntax, its compositional nature, emerges from the process itself, from the iterative act of one generation learning, then teaching, and the next group doing its best to fill in the blanks.

For a video describing these experiments, see below. I’ve cued it to start in the middle, at the point of interest.

I’d be wrong to say there aren’t those who disagree, but this is lab work, not theory, and repeatable, both with SimCity scenarios and actual people.

For me, it answers a question I’ve had almost forever: What first gave languages order? The answer: Speech itself.

though evidence suggests, while they probably had some kind of language, we were far smarter.

maybe just meaner, uniformitarianism ftw… what after all are our bettors but good at exploitation…

don’t mess with seattle crows… massive murders in the evening towards portage bay/batelle

Ravens are more emotive, a pair on the island has a punk or two each year they’re reserved and communicate clearly

apologies for going on, but the ravens talk in clicks and pops, situationally appropriate so only as loud as necessary so syntax for sure and a lot of it is communicating when they can’t see each other.

Pretty sure the weasels talk to each other too but it’s high pitched, high anxiety. Interestingly, the eagles owls and depending on size hawks are pretty much communicating location and for owls marking territory, I’ve had owls come very close when called because they’re like who the f are you?

I do less of that nowadays because encephalitis is a thing

https://safetyservices.ucdavis.edu/units/occupational-health/surveillance-system/zoonotic-diseases/birds

uc davis by the way lots of great there

I suppose this touched a nerve for me as I spend a lot of time listening to birds talking so I could go even further on…

they’re out there right now…

One of the fascinating things about language is just how little we know about it. Child language development has been intensively researched for well over a century, but there is still little scholarly consensus over what is really happening inside the brain as children learn to communicate, or for that matter, what happens when adults learn a second language. There is still no agreement over whether Chomsky is correct about language being embedded in our DNA or whether we are born with a blank language slate. Its way beyond my reading or understanding of Chomsky’s ideas but I’ve seen it suggested that a key problem with the acceptance of Chomsky’s theories is that beyond the wikipedia version (easy enough for anyone to understand), there is an enormous depth of complexity such that even within linguistics, not many people really understand his arguments – hence the somewhat casual disregarding of his work even at quite a high level of academia (there are plenty of high level linguists who are openly contemptuous of his ideas).

Its a long time since I’ve ready my Chomsky, but from memory, one aspect of his theory is that contrary to the widespread assumption, syntax and more advanced forms of language did not develop to communicate, it developed to think. In other words, we were ‘thinking’ in language before we were speaking in language. This makes sense in terms of research on animals like crows or dolphins – we assume that by somehow decoding their calls and squeaks we can understand how developed their ‘language’ is, but its possible that what is going on in a crows brain is far more advanced than they can themselves express, even to other crows. It may only be humans that feel the need to express every damn thought that goes through their minds to whoever happens to be in the room with them. Other animals are more pragmatic.

On a related point, one of Chomsky’s former colleagues, the Stanford linguist Stephen Krashen, has carried out a lot of research indicating that language is ‘learned’ in a very different way than, say, maths or history. It is more like an inherent skill that is acquired (not learned) through passive exposure only – i.e. we learn language (first or second languages) through attaching meaning to the sounds we hear or read, not by actively ‘learning’ syntax or vocabulary or grammar or, for that matter, by speaking or writing. It would certainly make sense that what those crows are doing is constructing in their brains a model of which humans can be trusted or not by associating a wide range of sounds and gestures from their fellow crows, and ‘understanding’ non-synthetical communication while constructing syntax in their brain. Maybe this is why our pets can form a far more sophisticated knowledge of the humans they life with than they are capable of externally communicating.

I’m currently trying to put these theories to the test by learning Korean solely through input (no active learning, just contextual listening and reading). I’ll let everyone know in a decade how I got on….

Good luck with that ;-) Like nirvana, I suspect pure passivity will prove a hard state to attain.

As to Chomsky’s theory that children “acquire” language effortlessly, without “learning”, I think it has been the greatest devaluation of child labor since Dickens. Anybody who has watched tantrums of a toddler frustrated in his ability to express his desires should be moved to compassion.

My favorite take on syntax remains How the Brain Evolved Language (with good short reviews here and here).

Heretical when first published 25 years ago, the theory has weathered quite well, except for its side trips into Sir John Eccles theories of the cerebellum. Since then about a dozen cases of cerebellar agenesis have been noted in the literature — people born without a cerebellum, at least several of whom nevertheless learned language and functioned quasi-normally. This consideration actually strengthens the theory’s central emphasis upon rhythmicity.

The relevant finding here is that syntax is nothing more than vertebrate serial behavior. Human syntax is a subvariant elaborated by a vocal tract unique in the animal kingdom for its ability to generate three characteristic variable formants, resonances that are the basis of vowels, seriated by consonants. The nearest analogues to human language and syntax are found among cetaceans and birds, because bipedal rhythm stabilizes learned seriations.

Beyond syntax, the theory has some deep implications for human “thought processes” in general, although nothing particularly useful for achieving (or preventing) thought control over others.

I think to elaborate, Chomsky and Krashen have never said children acquire language effortlessly – they say (and there is copious evidence to back this up), they need to acquire it before they can output (i.e. speak). Children always have a broader understanding of language that extends beyond their ability to speak. And as they point out, its a very strange household where children are actively ‘taught’ to speak their native language in the way we seem to think older children need to be ‘taught’ a second language.

And just to clarify, I don’t think acquiring is effortless – the point about input based learning is to focus on listening and reading to material that is just beyond your present capacity. By understanding the ‘messages’ (i.e. knowing what the YT video, book, film, etc) is about and what the character is trying to express, the ‘rules’ of the language develop in a subconscious way, just as it does with your first language. A lot of the material for this was developed by a linguist and physicist called J.Marvin Brown – he died a few years ago, a lot of his writings are available online for free. His autobiography is a fun read – I think its available for download from the ALG site.

In reality, people have been ‘learning’ multiple languages for millennia, without access to textbooks, teachers or Duolingo. The question is whether these have improved language learning, or whether they are just a method to make money out of language learning. Krashen suggests its the latter (he has lots of amusing anecdotes about this in his many talks you can find online).

As for Chomsky’s actual linguistic theories – its often suggested that they have fallen entirely out of favour, even by former Chomskites. The academic arguments over this are way beyond my pay grade, although so far as I’m aware his deeper theoretical work has come back into favour among those working on computational language models.

I may have misconstrued your sense of the word “passive”. Krashen would be the first to agree that to be a successful learner, child or adult, one must actively use language, ideally in a context where one’s use of the language has practical consequences. Otherwise one does not engage one’s ‘whole brain’: it is not enough to only exercise some discrete ‘language module’. The problem here is that adult language usage has practical consequences beyond the consequences of child language. Few adults will risk such exercise. Even today, the fluency of those who took the risk rarely transcended the narrow practical consequences of pidgins, creoles, and trade-language.

Knowledge of ‘grammar’ can be a boon to (older) second language learners. Here the problem is that even today the ‘official grammars’ are mostly retreads of medieval Latin grammar. These are at beat marginally pertinent outside of the Romance languages, and they are only made worse when they are augmented by cybernetic Chomskyan principles.

Have you read any of Steven Mithen’s books like The Singing Neanderthals or the The Language Puzzle mentioned in the article several times? The knowledge and ideas indeed have gone forward (or moved to some direction) a lot since Chomsky wrote about it.

At least from me Mithen gets bonus points for being easily approachable while still meticulously academical.

The obvious point, I suppose, is that many languages don’t have “syntax” as we understand it. Classical Latin indicated the relationship between word by changes in suffixes (as many western languages still do) although medieval Latin did absorb some syntactical elements from vernacular languages spoken at the time. African languages use a complex system of prefixes and suffixes to a root word to indicate function and relationships, and there is some evidence that in fact most early languages were like this. It can be speculated that, as language groups interacted, complex systems of conveying meaning like that were slowly worn away in favour of standardised declensions and ultimately syntax.

That said, the “rules” of syntax are not invariable. In English the adjective generally comes before the noun but not always: in poetic use (eg “darkness visible”) this can change, as in antiquated forms (“Chapel Royal”) where you can see the influence of French. And although we learn in school that in French the adjective comes after the noun, this isn’t always so, and in the time of Montaigne the adjective was frequently put before the noun. Even today, we talk of Napoleon’s “Grande Armée” not “Armée Grande.” In reality, the manipulation of syntax is highly complex and depends on the effect you seek and the customs you are used to.

By the way the discussion of syntax is confused: the loss of “whom” is grammar, not syntax, and “ne” in French is neither, it’s just the dropping of a word in informal conversation.

What linguists generally don’t quite understand is that language, like dress, is just fashion. In English, the continuity gender (with valences ‘continuous’ and ‘discrete’}, which requires quantification adjectives like ‘many’ and ‘much’ to agree with the continuity gender of the noun, is just fashion. And so are genders in all other languages. The same can ultimately be said for all aspects of language.

Chomsky,s saltationist universal grammar (which he was forced to retreat from step by step) was nothing more than an elaboration on grammar school English sentence diagramming. The genetic change that actually primed humans for language was not a cognitive improvement, but nothing other than increased tameness, and tendency to conform.

Chomsky’s grammar was an extrapolation from the Lisp programming language, and Church’s lambda calculus, in which ‘recursion’ was thought to be the ‘elegant’, ‘rule-based’ innovation that would enable Chomsky’s Ivy League MIA-funded machine translation team to crack the code of the Evil Empire.

A study by the team lead (George Miller and Stephen Isard, 1964) somewhat embarrassingly showed that humans don’t use recursion. Chomsky theorized (Aspects of the theory of syntax, 1965) that the human mind did use recursion but was somehow innately constrained. Nevertheless, despite their unconstrained Turing machine model, the team never successfully translated a single sentence. The military defunded the project to help fund the Vietnam War. Chomsky became a war protester. Good for him, but he never abandoned the core theory that the human mind was a limited computer.

It’s hard to convince a man when his salary…

As someone coming from more of a mathematical / theoretical comp. sci. background, I’ve never read Chomsky’s papers directly.

Just to double-check though, do you think that Chomsky and the people around him really believed the mind is processing language like a computer parser? Or more like behaviorist psychologists, were the formal models just clarifying and delimiting what happens outside the black box, even if nobody knew for sure what was happening inside?

It’s hard to know what people ‘believe’ when they ‘believe in a theory.’ My erstwhile dissertation advisor was George Miller, and I believe he really, really wanted to believe that the human brain — or at least its ‘language module’ — functioned like a pushdown store automaton.

In the late 1970s, I had to ‘fire’ him and abandon my Chomskyan dissertation topic because neural network models made more sense on the basis of general psychology. But we parted on good terms, and a few years later, he came out with his ‘connectionist’ “WordNet” model. At that point his salary apparently no longer depended upon Harvard-MIT cybernetics.

Unfortunately, WordNet was a crude model, but those were early days.

You ever notice that a phrase in English seems to often be shorter in length than the same phrase in other languages?

Brevity, and all that.

If you think English phrases are short, try Japanese. Even just putting Japanese into a translation app to English (or vice versa) its surprising how few words they sometimes use, especially when there is an ‘understood’ context and in non-formal language. In casual conversation, it sometimes seems that they can have an entire interaction with just one word (usually ‘oishii’ or ‘kawaii’.)

Did you ever study Latin? That is concision. And classical authors had the habit of dropping words to make their prose even more concise (such as: no need for the verb, after all the accusative of the noun already indicates there is movement so the reader can “easily” supplement the missing words). It also helps to have an extensive set of tenses for conjugating verbs.

Have a look at a bilingual Latin-whatever edition sometime; lines of the Latin text are often double-spaced while the modern language is type-set normally.

Indeed…

The wording on European coins from way back until the last hundred years was primarily in Latin, and they got a lot of bang for the buck, er Thaler.

Sort of. When it comes to spoken language, phrases tend to take the same amount of time, no matter how many recognized “words” within them.

A breath-group is pretty much a breath-group.

Crows might be smart enough to employ syntax but I don’t see anything about the detail from the Marzluff study that would suggest “a more complex mechanism” that gives rise to an inference they might be doing so. They’re passing on the scolding behavior from parent to offspring but that just seems to be the offspring learning the scolding behavior as a response to a conditioned stimulus, i.e., a particular “dangerous” mask. (Basically, the offspring crows are imitating their parents.)

As for the crows whom, I’d surmise, were in Chatham, Ontario, my guess is that their “communication,” if it was that, was more like some warning call, accompanied by flying higher, and the birds were immediately negatively reinforced (i.e., they were out of range of the bullets). And that “flying higher” behavior could be generalized behavior from previous encounters with hunters.

As for the syntax question and Tom Neuberger’s conclusions, he asks

And “ What first gave languages order? The answer: Speech itself.”

I’d say, instead, that, in a sense, everybody did and that it wasn’t “speech itself”—it was the verbal community passing on (and learning) the language acting within certain constraints. The conclusions that Simon Kirby at the University of Edinburgh reaches, in part, in the video make that clearer:

• Languages adapt to pass more easily from learner to learner.

• Linguistic structure is the solution that cultural evolution finds to the problem of being learnable.

It’s not, as Noam Chomsky long contended, some innate language faculty but a more specific instance of learning generally. It might be that what is “innate” in humans is a particularly strong susceptibility to reinforcement of their verbal behavior such that that behavior can become enormously complex. That’s very different from a “universal grammar” theorized by Chomsky.

You assume that the crows saw the parents scold. That is a leap. The large rise in scolding behavior, even over long periods where the offensive mask was absent, does not seem adequately explained by direct observation. The fact that Marzluff was surprised says he thinks that the math does not add up.

Classical Latin syntax was subject object verb, and the verb had to fill the role of punctuation too. Emphasis and poetic uses would see deviations from the rules, and even Caesar does this with his first line. The 2nd and 3rd lines make it clear he is breaking the rule for the sake emphasis and leaves no confusion that he broke the rule.

Worth emphasizing that these were the written conventions of the elite class, that they were intentionally crafted in order to provide a sense of distinctness and gravitas, and that spoken Vulgar Latin was likely much more fluid in its rules.

Interesting experiment how incomplete transmission of the language lead to the sound turning into concepts which were then combined to form compositional structure. In this case the mechanism worked from incomplete transmission but I would think that in our evolutionary history the mechanism would work the same but in reverse. In other words there wouldn’t have been a fully complete language able to explain all language that was not passed down in full, but rather language itself being incomplete and compositional structure emerging as what bits there were get applied to an ever increasing knowledge base of the population using the language.

“…All language changes, year after year, generation to generation. The process will never stop. It’s how we get from Chaucer to Shakespeare to you…”

This is incorrect.

Written languages change, spoken very little if at all.

All change to spoken languages can be imputed post 18th century. They were very stable over time.

It is also for this reason that you cant speak a language without accent, because you learn it from reading.

Let a French read an English text and you understand instantly how writing bastardizes language.

Children only can speak without accent because they have no access to writing.

Linguists are a fraud overall.

Because if they are able to uncover the past of the language through their phonetic and syntax laws why not tell us how language will be spoken in the future , say in 200 years and we will see how accurate their predictions will be?

In truth , there is no way to know how people pronounced words in ancient Greece or Rome, unless you have a recording.

The most it can be said about the origins of language is that it started as a metaphor and evolved. Still today, language is but metaphors. Julian Jayce has the best understanding of this imho.

And don’t forget Rupert Sheldrake’s morphic resonance which sounds like entanglement across spacetime. He references rats learning certain behaviors in the lab and the simultaneous learning of those behaviors half way around the world. Kinda sounds like the spontaneous permutations of syntax, some due to error which self corrects, is an entangled, emergent thing as well. Self correcting is interesting. Why do iterations cure the error? Due to the laws of nature, no doubt. This research doesn’t seem to include that backdrop of reality to form meaning – I’d say that little trick requires semantics based on real world objects. So that’s just one more thing to watch when it comes to AI. Exponentiating meaningless syntax.

Written languages change, spoken very little if at all.

Hast thou evidence?

Overwhelming evidence would be hard to come buy as one would need to have recordings of how people spoke languages that arent written over vast timeframes.

But I can cite you a case when a German pilgrim, Arnold von Harff travelling to Jerusalem in 1497 took a phonetical transcription of words in countries where he travelled.

One of them was Albania, which didn’t have a written tradition till beginning of 20th century.

A comparison of his phonetical transcripts shows that there was no change in 500 years as to how people pronounced the words.

One can argue that 500 years is a short time and one swallow doesn’t spring make and I agree, but I remain convinced that writing is usually what changes the language and eventually kills it.

All ancient written languages are dead today. Why? (Chinese doesnt count as they don’t use letters to write their language)

When considering the origins of language it also seems important to also consider the role of the human brain, the human larynx (voice box) and the capacity to communicate by signs (which seems to eventually involve putting together sound signs, that gradually become intentionally articulated or more a symbol rather than simply a sign. This ability to articulate significance may also be linked to the evolution of the brain to a mind which, in turn, seems linked to cultural processes.

It’s kind of funny, in so many areas of life, things go fine as long as the topic of discussion is not my area of professional expertise. But the multispectrum ignorance that I find when something like linguistics or syntax comes up, makes me realize how I must sound when pontificating outside of my wheelhouse. Thanks NC comment section!