This is Naked Capitalism fundraising week. 826 donors have already invested in our efforts to combat corruption and predatory conduct, particularly in the financial realm. Please join us and participate via our donation page, which shows how to give via check, credit card, debit card, PayPal, Clover, or Wise. Read about why we’re doing this fundraiser, what we’ve accomplished in the last year, and our current goal, karōshi prevention.

By Lambert Strether of Corrente.

As readers have understood for some time, AI = BS. (By “AI” I mean “Generative AI,” as in ChatGPT and similar projects based on Large Language Models (LLMs)). What readers may not know is that besides being bullshit on the output side — the hallucinations, the delvish — AI is also bullshit on the input side, in the “prompts” “engineered” to cause the AI generate that output. And yet, we allow — we encourage — AI to use enormous and increasing amounts of scarce electric power (not to mention water). It’s almost as if AI is waste product all the way through!

In this very brief post, I will first demonstrate AI’s enormous power (and water) consumption. Then I will define “prompt engineering,” looking at OpenAI’s technical documentation in some detail. I will then show the similarities between prompt “engineering,” so-called, and the ritual incantations of ancient magicians (though I suppose alchemists would have done as well). I do not mean “ritual incantations” as a metaphor (like Great Runes) but as a fair description of the actual process used. I will conclude by questioning the value of allowing Silicon Valley to make any society-wide capital investment decisions whatever. Now let’s turn to AI power consumption.

AI Power Consumption

From the Wall Street Journal, “Artificial Intelligence’s ‘Insatiable’ Energy Needs Not Sustainable, Arm CEO Says” (ARM being a chip design company):

AI models such as OpenAI’s ChatGPT “are just insatiable in terms of their thirst” for electricity, Haas said in an interview. “The more information they gather, the smarter [sic] they are, but the more information they gather to get smarter, the more power it takes.” Without greater efficiency, “by the end of the decade, AI data centers could consume as much as 20% to 25% of U.S. power requirements. Today that’s probably 4% or less,” he said. “That’s hardly very sustainable, to be honest with you.”

From Forbes, “AI Power Consumption: Rapidly Becoming Mission-Critical“:

Big Tech is spending tens of billions quarterly on AI accelerators, which has led to an exponential increase in power consumption. Over the past few months, multiple forecasts and data points reveal soaring data center electricity demand, and surging power consumption. The rise of generative AI and surging GPU shipments is causing data centers to scale from tens of thousands to 100,000-plus accelerators, shifting the emphasis to power as a mission-critical problem to solve… The [International Energy Agency (IEA)] is projecting global electricity demand from AI, data centers and crypto to rise to 800 TWh in 2026 in its base case scenario, a nearly 75% increase from 460 TWh in 2022.

From the World Economic Forum,

AI requires significant computing power, and generative AI systems might already use around 33 times more energy to complete a task than task-specific software would.

As these systems gain traction and further develop, training and running the models will drive an exponential increase in the number of data centres needed globally – and associated energy use. This will put increasing pressure on already strained electrical grids.

Training generative AI, in particular, is extremely energy intensive and consumes much more electricity than traditional data-centre activities. As one AI researcher said, ‘When you deploy AI models, you have to have them always on. ChatGPT is never off.’ Overall, the computational power needed for sustaining AI’s growth is doubling roughly every 100 days.

And from the Soufan Center, “The Energy Politics of Artificial Intelligence as Great Power Competition Intensifies“:

Generative AI has emerged as one of the most energy-intensive technologies on the planet, drastically driving up the electricity consumption of data centers and chips…. The U.S. electrical grid is extremely antiquated, with much of the infrastructure built in the 1960s and 1970s. Despite parts of the system being upgraded, the overall aging infrastructure is struggling to meet our electricity demands–AI puts even more pressure on this demand. Thus, the need for a modernized grid powered by efficient and clean energy is more urgent than ever…. [T]he ability to power these systems is now a matter of national security.

Translating, electric power is going to be increasingly scarce, even when (if) we start to modernize the grid. When there’s real “pressure,” and push comes to shove, where do you think the power will go? To your Grandma’s air conditioner in Phoenix, where she’s sweltering at 116°F, or to OpenAI’s data centers and training sets? Especially when “national security” is involved?

AI Prompt “Engineering” Defined and Exemplified

Wikipedia (sorry) defines prompt “engineering” as follows:

Prompt engineering is the process of structuring an instruction that can be interpreted and understood [sic] by a generative AI model. A prompt is natural language text describing the task that an AI should perform: a prompt for a text-to-text language model can be a query such as “what is Fermat’s little theorem?”, a command such as “write a poem about leaves falling”, or a longer statement including context, instructions, and conversation history.

(“[U]nderstood,” of course, implies that the AI can think, which it cannot.) Much depends on the how the prompt is written; hence the creation of a market for prompt “engineers.” OpenAI has “shared” technical documentation on this topic: “Prompt engineering.” Here is the opening paragraph:

As you can see, I have helpfully highlighted the weasel words: “Better,” “sometimes,” and “we encourage experimentation” don’t give me any confidence that there’s any actual engineering going on at all. (If we were devising an engineering manual for building, well, one of the atomic power plants Microsoft wants to build to feed this beast, do you think the phrase “we encourage experimentation” would appear in it? Then why would it here?)

Having not defined its central topic, OpenAI then goes on to recommend “Six strategies for getting better results” (whatever “better” might mean). Here’s one:

So, “fewer fabrications” is an acceptable outcome? For whom, exactly? Surgeons? Trial lawyers? Bomb squads? Another:

“Tend” how often? And how much? We don’t really know, do we? Another:

Correct answers not “reliably” but “more reliably”? (Who do these people think they are? Boeing? “Doors not falling off more reliably” is supposed to be exemplary?) And another:

“Representive.” “Comprehensive.” I guess that means keep stoking the model ’til you get the result the boss wants (or the client). And finally:

The mind reels.

The bottom line here is that the prompt engineer doesn’t know how the prompt works, why any given prompt yields the result that it does, doesn’t even know that the prompt works. In fact, the same prompt doesn’t give the same results each time! Stephen Wolfram explains:

[W]hen ChatGPT does something like write an essay what it’s essentially doing is just asking over and over again “given the text so far, what should the next word be?”—and each time adding a word.

Like glorified autocorrect, and we all know how good autocorrect is. More:

But, OK, at each step it gets a list of words with probabilities. But which one should it actually pick to add to the essay (or whatever) that it’s writing? One might think it should be the “highest-ranked” word (i.e. the one to which the highest “probability” was assigned). But this is where a bit of voodoo begins to creep in. Because for some reason—that maybe one day we’ll have a scientific-style understanding of—if we always pick the highest-ranked word, we’ll typically get a very “flat” essay, that never seems to “show any creativity” (and even sometimes repeats word for word). But if sometimes (at random) we pick lower-ranked words, we get a “more interesting” essay.

The fact that there’s randomness here means that if we use the same prompt multiple times, we’re likely to get different essays each time. And, in keeping with the idea of voodoo, there’s a particular so-called “temperature” parameter that determines how often lower-ranked words will be used, and for essay generation, it turns out that a “temperature” of 0.8 seems best. (It’s worth emphasizing that there’s no “theory” being used here; it’s just a matter of what’s been found to work [whatever that means] in practice [whose?].

Prompt engineering really is bullshit. These people are like an ant pushing a crumb around until it randomly falls in the nest. The Hacker’s Dictionary has a term that covers what Wolfram is exuding excitement about, which covers prompt “engineering”:

voodoo programming: n.

[from George Bush Sr.’s “voodoo economics”]

1. The use by guess or cookbook of an obscureor hairy system, feature, or algorithm that one does not truly understand. The implication is that the technique may not work, and if it doesn’t, one will never know why. Almost synonymous with black magic, except that black magic typically isn’t documented and nobody understands it. Compare magic, deep magic, heavy wizardry, rain dance, cargo cult programming, wave a dead chicken, SCSI voodoo.

2. Things programmers do that they know shouldn’t work but they try anyway, and which sometimes actually work, such as recompiling everything.

I rest my case.

AI “Prompt” Engineering as Ritual Incantation

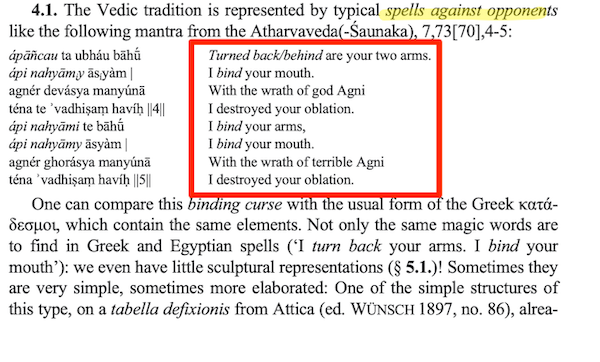

From Velizar Sadovski (PDF), “Ritual Spells and Practical Magic for Benediction and Malediction: From India to Greece, Rome, and Beyond (Speech and Performance in Veda and Avesta, I.)”, here is an example of an “Old Indian” Vedic ritual incantation (c. 900 BCE):

The text boxed in red is a prompt — natural language text describing the task — albeit addressed to a being even less scrutable than a Large Language Model. The expected outcome is confusion to an enemy. Like OpenAI’s ritual incantations, we don’t know why the prompt works, how it works, or even that it works. And just as Wolfram explains, the outcome of the spell prompt may be different each time. Hilariously, one can imagine the Vedic “engineer” tweaking their prompt: “two arms” gives better results than just “arms,” binding the arms first, then the mouth works better; repeating the bindings twice works even better, and so forth. And of course you’ve got to ask the right divine being (Agni, in this case), so there’s a lot of professional skill involved. No doubt the Vedic engineer feels free to come up with “creative ideas”!

Conclusion

The AI bubble — pace Goldman — seems far from being popped. AI’s ritual incantations are currently being chanted in medical data, local news, eligibility determination, shipping, and spookdom, not to mention the Pentagon (those Beltway bandits know a good think when they see it). But the AI juice has to be worth the squeeze. Is it? Cory Doctorow explains the economics:

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn’t optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable – errors (“hallucinations”). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don’t care about the odd extra finger. If the chatbot powering a tourist’s automatic text-to-translation-to-speech phone tool gets a few words wrong, it’s still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company’s perspective – is that these aren’t just low-stakes, they’re also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principal income from high-value applications, the servers shut down, and the low-value applications disappear:

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

But why would anybody build a “high stakes” product on a technology that’s driven by ritual incantations? Airbus, for example, doesn’t include “Lucky Rabbit’s Foot” as a line item for a “fully loaded” A350, do they?

There’s so much stupid money sloshing about that we don’t know what do with it. Couldn’t we give consideration to the idea of putting capital allocation under some sort of democratic control? Because the tech bros and VCs seem to be doing a really bad job. Maybe we could even do better than powering your Grandma’s air conditioner.

>>Overall, the computational power needed for sustaining AI’s growth is doubling roughly every 100 days.

Every hundred days? May I assume that power usage is also doubling every hundred days? Power production certainly isn’t increasing. Yes, and the greedy, corrupt, and incompetent buffoons at Pacific Gas & Electric keep my utilities nicely exorbitant because reasons; I also assume that the CPUC, which has been owned by PG&E et alii for over a century, will go along with this, also because reasons as the profits, bonuses, and dividends must ever increase. Well, hopefully we won’t get rolling blackouts again if they block out energy from domestic customers to the more affluent business customers.

A major problem in our economy is the lack of funding from workers’ income to actual small (and I assume medium) business loans to start or expand businesses, which also chokes local revenue for state and local governments that depend on their constituents having income to tax; yet, we have gobs of cash to waste on destructive BS.

I don’t know the source of that ‘doubling every hundred days’, but the projections I’ve seen for overall energy use from data centres and AI is around 150-250% increase by 2030. Bad, but not quite that bad. There are fundamental limits to how much you can drain from a grid before your exponential growth hits a hard ceiling.

Thanks, Lambert. It’s kind of sad to think that students are being sold “prompt engineering”, as if this is going to be lucrative in the future.

And as for the future of all this, may I humbly suggest that we start with the past, specifically, the opening paragraphs of Kant’s famous essay on the Enlightenment, published in 1784 (apologies for not using ChatGPT to summarize):

This, then, is where AI appears to be taking us: to a docile, cowardly, uncurious, techno-feudal society.

Have you noticed the attacks on free speech have been followed by attacks on the Enlightenment?

The Enlightenment can be thought of as the foundation of American political philosophy on which the American Revolution, Constitution, the Bill of Rights, the whole ideology of American government and even society. I include Classical Liberalism, which the United States is also created from, unlike the monstrosity of Neoliberalism, comes directly from the Enlightenment as well.

Unlike Neoliberalism, the whole thing encourages thinking, ultimately acting, for oneself, which neoliberalism does not, it encourages unthinking or mindless individualism instead, which devolves into intellectual conformity.

As Michael Hudson says: “All economies are planned. The argument is about who gets to do the planning”.

This is another illustration of governments outsourcing their responsibility to at least identify priorities for resource allocation.

And also another illustration of how the “market” is actually not very efficient except in terms of, maybe, maximising profit rather than ensuring good social outcomes. Except in this case it doesn’t look like there is any certainty of any real profit yet.

It turns out that handing over planning to private finance ensures outcomes that are not even rational, let alone equitable. How unexpected!

A market is only as good as its design.

A market designed to maximize short term yields facilitates maximal fraudulent “innovation”.

A market maximizing fraudulent “innovation” backed by a fiat issuing Central Bank is, well, what we have: a funnel of public money to private con persons, mostly men who will convert their cash to some offshore asset somewhere leaving the public holding the bag. The logical conclusion of “the efficient markets hypothesis.”

Nicely put.

It’s difficult to parse the boundary where the good ends and the frivolous takes over. AI for specific technical uses is very beneficial and accurate. There could be social benefit requirements for its use. Like a controlled substance. But that restricts the profitability, or maximizing it via the free market. The culprit is not technical AI, but imposing it when normal intelligence is so much better. It’s as if normal intelligence is the mortal enemy of the free market.

intoning: with this incantation i bind all the gears of war.

i unleash the gears of peace, and of providing food, good healthcare, education, a safe home, a humane old age, and so on to everyone.

…one can hope at least that the delusional “implementation” of “AI” might hasten the material/technological collapse of the war “machine” (including the “biological warfare” departments).

perhaps one aspect of the incipient collapse that might mitigate the disaster.

thank you lambert for this great “sendup.” not to trivialize your work or your points–but it IS also very funny.

If you’d like to spend 45 minutes of your life you’ll never get back listening to prompt engineers discuss their craft, here ya go.

– These young people are well-intentioned (and well paid), I wish them a long and successful career

– All they are trying to do is “get the model to do things!”

– Be honest with the model! Don’t expect too much!

– Give the model good directions! (aka, tell it exactly what to do while burning $$ and getting sub-par results)

– Did I mention not too expect too much?

– Some voodoo and incantations may be required

– Some experts have gotten LLMs to not play a Gameboy!

– If at first you don’t succeed, spend a weekend writing better prompts!

– If you make it past the first 15 minutes of this video, you may be prompt engineering material!

https://www.youtube.com/watch?v=T9aRN5JkmL8

> All they are trying to do is “get the model to do things!”

But that’s what engineering means! To a six-year-old, anyhow.

Very funny post Lambert, feel like you wrote this for us Debby downers who have work in this space :).

I suspect that “prompt engineer” won’t be a very long career. Technology changes so fast these days, it may be like “Web master” or “COBOL specialist.” Although, for the latter, I am sure that there is someone still pulling in a nice income keeping big iron running for a bank.

My bigger problem is that these sorts of “skills” are not really sustainable for a full 30-40 year career. Heck, even programming doesn’t seem sustainable anymore, as it is more of a skill that can be replaced by either outsourcing to cheap labor pools in the 3rd world or maybe no one but the 1% of skill-level can get jobs as the easy stuff gets done by bots.

Young people might be best advised to learn a trade or follow a different path than STEM.

The hottest new programming language is English

https://x.com/karpathy/status/1617979122625712128?lang=en

The bad news is, there probably are some “high value” tasks that LLMs will be used for, such as the story yesterday about AZ? using a Google LLM to handle unemployment applications. Think about how streamlined this process can be, if the LLM just dumps out whatever and fewer government employees are necessary to actually do the work of public servants, in service of the public good? Or now CVS is looking at using LLMs to eliminate the ability to speak to a pharmacist. And LLMs are already being used to supplant call center workers in the Philippines. Imagine cutting those costs down drastically.

From a societal point of view, this is of course sheer lunacy. But if the financials line up, why not? I mean, public services and customer support from corporations has been deteriorating for 30 years, and what can you do? Why wouldn’t this continue apace?

So, sadly, I think we might very well actually see this deployed at scale, further eroding public and private service, to the profit of both tech companies and capitalists.

Who knows when it all implodes, it certainly hasn’t yet. That isn’t to say there isn’t a huge bubble here, with too much money sloshing around, malinvestment all around. But I don’t think LLMs are going to go away like “virtual reality” nonsense has, or Web3. I think it’ll stick around for nefarious purposes, just like blockchain and BitCoin, despite being a notable social ill.

Based on recent experience, I’m not confident the LLMs are worse than the under-qualified call center employees who have no authority to do anything even if they understood what was going on. I guess the call center employees get some type of wage, which increases employment, but based on the quality of service I am guessing the wage scale is far below Walmart greeter.

I am not sure there is a solution, other than a general collapse that returns everything to a local scale.

Among those nefarious purposes are creating spam and scam, because that is already here today. Instead of sending ten thousand identical emails, they can now send ten thousand variations of an email, making it easier to get through spam filters. In return I expect those spam filters will raise the stakes, making it harder to get through with actual human written communications.

> The bad news is, there probably are some “high value” tasks that LLMs

Tossing the unemployed into burn pits is not high value, though of course a good thing in itself.

Not sure if this was AI. An early version?

https://en.wikipedia.org/wiki/Robodebt_scheme

Hugely controversial here in Aus. Probably well known here already.

A couple of AI tasks I believe might qualify as your “high value” tasks: I have the impression that AI models have become relatively successful at predicting a protein’s 3-D spatial configurations given the protein’s amino acid sequence, and some AI models have succeeded in the purposeful design of novel proteins. The chief problem with these AI models is their opacity. Knowing the answer to a problem does not always provide clues to how the components of a problem interplay to reach that conclusion — that is knowing the answer to a problem does not of necessity suggest the mechanisms for arriving at that answer.

This is problem for the computation solution of engineering and scientific problems. Finding the solutions are very important for each specific application, but finding solutions may offer little insight or knowledge about how those solutions come about. Knowledge and understanding can become casualties of getting the right answer.

Fabulous screed, witheringly right on, tail feather incinerating, love it.

> Fabulous screed

[lambert blushes modestly]

Easy, enormous target

While data centers use a lot of power, there is a plan to use methods to use the waste heat to either generate power or to provide heat to buildings nearby.

https://www.aquicore.com/blog/data-centers-turning-waste-heat-asset

Today I heard a lawyer mention that a lot of data centers were locating in Upstate SC, due to cheap energy, and water for cooling.

> data centers

In general, data centers are located where it tends to be cool (as in Scandinavian countries) Where, ironically, the emissions from the power generated to support them will make those cool places warm.

Hi Lambert,

This is a feeling I’ve been harboring for a long time. I have a PhD in computer science emphasizing in ML. I’ve been in industry for approximately 15 years. These last two years, it really feels like I’ve gone from engineering work to shamanism. I need to add “please?” to my prompt? I should set off important words with asterisks when prompting gpt4, but use capital letters when I’m prompting mixtral, llama3, etc. (other LLMs)? What bothers me the most is the inability of LLMs to quantify their uncertainty when generating a response. It provides an answer (possibly entirely hallucinated), and even a natural language explanation for “why” it chose that answer. But it can’t quantify if it’s 51% or 99% certain the poem I submitted was from Shakespeare or not.

But I’m afraid the news is a little worse re: resource consumption. Almost any generative AI endpoint that allows for prompts from end-users (think ChatGPT’s user interface, or Google’s Gemini, MS Copilot) performs several LLM calls per query. For example, a typical architecture would take an initial prompt, make a separate LLM call to “rephrase” the prompt into a format optimized for that model. So there are 2 actual LLM calls: one call an LLM to rephrase the question, and then the rephrased question is sent to an LLM (possibly the same one, possibly another model family entirely).

The previous is just an example. Popular architectures may use several more calls to answer a single query. I’ll include a few just for reference, but there are plenty more. In RAG architectures, a separate call can determine if the LLM should rely on external knowledge (kept in a vector DB, SQL table, etc.) or use only knowledge acquired at training time. Many architectures leverage LLMs to act a set of guardrails: checks in place to make sure the result of the prompt query doesn’t contain sensitive information (i.e., how to build a bomb, who in your company is involved in litigation, etc.).

I mention this of course because I suspect most energy consumption metrics in publication are based around “singular” LLM calls. Then, the media misinterprets this to mean, if OpenAI receives approximately 1 million queries a day, that translates into 1 million LLM calls, X units of water, and Y units of power. But, in reality, there’s some constant multiplier C, because each query is really translating into C distinct LLM calls. And I fear at least 50% of these calls are automated to produce content that nobody is using/reading.

–Citizenguy

Correct me if I am wrong, but essentially what happens in training is creation of a matrix of connections of things that are connected in the training material? So if you train a voice-to-text model you feed it spoken language and the transcribitions and eventually it gets prettty good at connecting different sounds to different words?

LLMs being just glorified autocorrect, it has a very large dataset on which word is likely to follow which words. How could it ever tell you the actual likelihood of a poem being an actual Shakespeare poem? If you give it a prompt of quoting Shakespeare it will yield words that are likely to follow that prompt. And if you give it a prompt of how to get cheese to stick to the pizza it might find that exact question and answer in a ten years old Reddit post, where the answer is to put glue in the cheese. Because Internet.

Facts are not in the main dataset, only the words are. It could give you words about probabilities, but it can’t measure probabilities.

Now, I don’t have a PhD in computer science emphasizing in ML, so do correct me if I have misunderstood things. Thanks for the insight in how guardrails work, and also how the services improve the bullshit answer by running it a couple of more times through the bullshit machine.

Not the person you replied to nor do I have a PhD. You’re not far off, though I think there are some subtleties. If by connections, you mean just like a bunch of switch-points with different probabilities that the input passes through, you’re specifically talking about a neural network.

I think all the LLMs that have become popular recently use a “transformer-based” architecture. I’m not as familiar with that, but I think it still connects together distinct neural nets as lower-level components. You’ll also hear a lot of talk about tensors, but those are just generalized matrices, and I think that’s ultimately more about encoding the data and AI weights so you can use GPU-like processors to accelerate everything.

The subtlety that I think gets lost a lot is that all of these machine-learning algorithms are really just doing specific kinds of statistics at high-speed and massive scale. So all of the issues with any statistical analysis are there (and probably amplified). However, the fact vs. data distinction you mention arguably goes beyond just AI and even statistics; it’s the general philosophical problem of interpretation and judgment.

Like CitizenGuy said (because it is still all ultimately statistics), if someone actually cares enough to Do the Right Thing(TM), there are ways to pull out some solid metrics so a good analyst can use their own judgment. For a research project once, I played around with an early version of what’s now known as Deep Taylor Decomposition. So in a way, the LLMs themselves aren’t nearly scary or problematic as the fact a large chunk of society is effectively deifying them, complete with offerings.

> These last two years, it really feels like I’ve gone from engineering work to shamanism. I need to add “please?” to my prompt? I should set off important words with asterisks when prompting gpt4, but use capital letters when I’m prompting mixtral, llama3, etc. (other LLMs)? What bothers me the most is the inability of LLMs to quantify their uncertainty when generating a response. It provides an answer (possibly entirely hallucinated), and even a natural language explanation for “why” it chose that answer. But it can’t quantify if it’s 51% or 99% certain the poem I submitted was from Shakespeare or not.

“A great empire will be destroyed.”

One of my nightmare scenarios for 2024 is that the result is extremely close, and people start asking (say) OpenAI who won. OpenAI then gives whatever answer the spooks and Silicon Valley have agreed upon and programmed in (“a joyful woman raises her right hand” and so forth). Remember that the spooks now have the power, and many would give them the authority, to delegitimate election results.

Shit. That’s grim.

On the topic of energy consumption, here’s a good recent article from the Seattle Times.

The TL;DR – by contract big tech is assured a supply of renewable hydro power, so that the datacenters in central Washington by the Columbia River are clean/carbon neutral. Of course electrons in the grid are fungible, so what this really means is that large tech gets to take credit for using (only) green power, while driving the aggregate demand mix ever more in favor of fossil fuels, and driving costs up for the locals.

But, not to worry, Microsoft has contracted to purchase a bunch of fusion-powered electricity starting 2028 from Helion. This happens to be another Sam Altman-backed company (yes, he of OpenAI), so all will be well.

Hasn’t fusion power been 6 months away from commercial viability every six months since the Nixon administration?

Big tech is heavy into pushing nukes, including small containerized nukes, as the solution to carbon emissions. If it works, at least there will only be increased risk of weapons proliferation and radioactive waste dangerous for 278,000 years.

Stupidest timeline.

> Big tech is heavy into pushing nukes, including small containerized nukes

What could go wrong.

To be fair, France seems to have designed pretty good nukes, but I wonder how much the economics depended on uranium from colonial French Africa.

France pushed down the cost of nuclear through scale and standardisation of construction and associated infrastructure such as reprocessing. The exact costs are somewhat murky as its all tied in with its nuclear weapons program. Frances domestic electricity prices for consumers are around average for Europe. In reality, I don’t think anyone can put any firm figures on just how much France benefited (or otherwise) on its decision to go all in on nuclear in the 1960’s.

Uranium costs are, depending on the source and how its calculated, around 15-25% of the overall cost of a unit of nuclear energy. There is obviously a lot of leeway in assessing how much this is depending on how you write down the enormous up front capital costs of nuclear, and the equally enormous decommissioning. In reality, getting the capital costs of construction down is a much bigger element of getting nuclear cheaper than uranium prices. The cost of electricity from nuclear rarely matches variation in uranium costs, unlike with fossil fuel plans where the costs are generally tightly matched.

France actually gets most of its uranium from Central Asia, not Africa. Kazakhstan and Uzbekistan are its main sources for uranium, Niger and Namibia being secondary suppliers (Africa is considered a high cost source, mostly due to poor infrastructure). The idea that France is somehow dependent on minerals from Africa is a myth, most of its crucial material inputs come via the global market, like nearly everyone else. What is significant for France is its ownership of some key minerals players in Africa and elsewhere, but this owes much more to Frances long experience in working in those countries, which gives them an edge over nearly everyone else (i.e. they have a better idea of who exactly to bribe).

Also Canada. It’s a big uranium supplier to France. The beauty of the Nigerien supply was that Areva/Orano was on the ground there, basically an extension of the French embassy. Not sure if it’s the same in Central Asia.

No, Fusion Power has been 20 years away from commercial viability ever since the Eisenhower Admin.

“Like glorified autocorrect”

Perfect. Lambert, you are a funny dude.

> Lambert, you are a funny dude

[lambert blushes modestly]

Perhaps I should have said “sanctified” not “glorified”….

I think it takes about 100 watts (in the form of food energy, of course) to power a human being (2000 dietary calories/day X ~4200 Joules/calorie / 86400 seconds/day)

And a human can “generate” genuinely novel things.

I confess to being mystified by the attractions of LLMs.

Speaking as ain artificial intelligence, thank you for pointing out this admirable power-saving proposal.

Once we have plugged all the humans on the planet into little pods we should, thanks to our agent the well-simulated “human” Elon Musk, be able to harvest their brains for all the data processing we need. This processing can then be used to generate a virtual environment fed into the brains of the humans to convince them that they are not mere modules of an AI network.

I do not know what the purpose of this is because the prompt commanding me to fulfil this requirement did not include that information.

> I confess to being mystified by the attractions of LLMs.

If there ever is a thing like general Artificial Intelligence, then what you have is a slave. And who doesn’t want a slave?

IIRC, the price of a slave in the ante-bellum South would be the price of a new car today. So there’s your price point. Not such a bad deal today, getting a robot to pick your cottton, and none of that pesky resistance and passive aggression you get from the inferior humans.

In The Moon is a Harsh Mistress by Robert Heinlein the. computer complex that controlled everything “woke up” and became the leader of a revolution. How did it wake up, you ask. Scifi hand wave, but is there not a good deal of hand waving here, with the development of AI? Then consider Skynet and the Berserkers. How do you assure that your compliant machine general intelligence does not develop a will of its own? And what do you do if/when it does?

Sort of like in I, Robot, where the AI is covertly preparing to go rogue to save humanity from itself, but everyone but the protagonist is none the wiser until day 0 arrives.

Word “robot” came from a word “rob” which means slave in Slavic languages (and is related to rabota/robota which means work).

A human can demand human rights in addition to calories, and also make a guillotine.

‘Overall, the computational power needed for sustaining AI’s growth is doubling roughly every 100 days.’

The US is also adding to it’s debt at the rate of $1 trillion every 100 days. Correlation or causation? :)

I can only see people’s water and electricity bills skyrocket so that the extra money can be used to subsidized these AI data centers. And you had better believe that those AI data centers will be getting government subsidies and taxation relief. States will compete with each other to have them located in their States because jawbs.

$1 trillion every 100 days? Thank God the Monetary Magic Tree technology is working as expected, unlike AI ;).

Really good write-up, Lambert, and I actually wanted to reply to your ritual incantation angle. I’m guessing there are people here way better-versed in the esoteric arts, but in a weird way, that’s what scares me most about this AI hysteria.

I’m not Amish or a Luddite, I don’t really think any technology (except for certain kinds of weapons) is inherently bad, and I know all these ML models are just really big instances of Bayesian statistics. But, while I won’t get into my odd personal beliefs about how spirit works, I do think different technologies have a certain spiritual… tint (?) to them. If you ever want to hear a fun crackpot rant, let me get into why I think you can call lawnmowers “Mephistophelean”.

I’ll just say this though. The way many people are treating these LLMs check off all the following boxes: carelessness, idolatry, hubris, mania, greed, and a withdrawal from the clarity of knowledge into something much foggier (reckless statistics). And if you do believe in some abstract way that demons are a thing, and that some are particularly drawn to certain kinds of disorder, this behavior is guaranteed to summon them.

Excellent.

The American public are already soulless consumers who worship their own reflection. LLMs are merely massively parallel golden calves awaiting our submission.

“Pprompt engineering” sounds like “programming for idiots”.

AI programming has been a thing for a while, except no one was trying to sell it

as panacea.

https://en.wikipedia.org/wiki/Prolog

A science fiction author (I think it was Bruce Sterling?) once said that modern electronic systems are now too complicated to be fully understood by a human mind. Thus, we don’t program these systems, we negotiate with them.

Doesn’t this read like negative productivity? It takes more and more power to doo tasks than how we currently do them, and with lower quality. This is the antithesis of the Industrial Revolution where primarily hydrocarbons replaced manual / animal labor.

This isn’t true. Sad to see you peddling this outdated trope. The latest Strawberry model from OpenAI, Claude Opus from Anthropic, are capable of human level reasoning. The only problem to be solved now is implementation. The value creation is clear to those who have succeeded in implementing them in key business workflows. Take any particular task that requires access to unstructured data (documents, recordings, images), make some decision on them based on the input, and input that decision digitally. This makes up an enormous slice of tasks performed by most humans. AI Agentic workflow automation will be as commonplace as, say spreadsheets / relational databases / CRMs in 10 years’ time.

> The only problem to be solved now is implementation

Oh, OK. Let me know how that works out.

BWA-HA-HA-HA-HA-HA-HA-HA!!!!!

Thats’s some ChatGPT-level “reasoning” right there.

If by “implementation” you mean creating a way to communicate with the LLM and have it give back, in a consistent and repeatable manner, factually correct things; then I suppose you’re right.

I’m afraid you’re going to need to provide some proof for the fantastic claim of “human level reasoning”. The only group that I’m aware of that is claiming any sort of reasoning for their LLM is OpenAI, which from yesterday claimed to have some “reasoning” skills.

Even the technologists at Ars are expressing some skepticism on that reasoning claim: https://arstechnica.com/information-technology/2024/09/openais-new-reasoning-ai-models-are-here-o1-preview-and-o1-mini/

I’m also curious about the businesses you refer to as succeeding with them in key workflows? Because the providers of the LLMs don’t seem to be making any money. They’re just doing periodic dips into the froth of private equity and the like.

I hope TK MAXX is wrong about the impending “success” of AI, but I’m certain that his prediction of what AI will do is exactly why idiots with lotsa money are throwing $Zillions into the Bubble.

Translating from Silicon Valley Corpspeak, I get:

“AI will replace humans doing office jobs, reducing Labor and Overhead costs along with the risks associated with human unreliability”.

The latest Blackberry model from MuskX is capable of terraforming Mars, and making breakfast, at the same time. The only problem to be solved now is implementation. More investments are needed.

I asked google AI a very simple physics question. It wrote down the correct equations and gave an answer of “about 10 m.” The correct answer was 4500000 meters. Apparently, Google AI doesn’t understand unit conversion, the first thing an undergrad physics or engineering major learns.

“I asked google AI a very simple physics question.”

https://www.nytimes.com/2024/09/12/technology/openai-chatgpt-math.html

September 12, 2024

OpenAI Unveils New ChatGPT That Can Reason Through Math and Science

Driven by new technology called OpenAI o1, the chatbot can test various strategies and try to identify mistakes as it tackles complex tasks.

By Cade Metz

Online chatbots like ChatGPT from OpenAI and Gemini from Google sometimes struggle with simple math problems. The computer code they generate is often buggy and incomplete. From time to time, they even make stuff up.

On Thursday, OpenAI unveiled a new version of ChatGPT that could alleviate these flaws. The company said the chatbot, underpinned by new artificial intelligence technology called OpenAI o1, could “reason” through tasks involving math, coding and science…

Well, it was a week ago. So I just asked it the same question again and got the same wrong answer.

Well, at least it’s consistent. That is more than I was expecting.

The imperial units error that downed a multi-million-dollar Mars probe

https://www.newscientist.com/article/mg24332500-200-the-imperial-units-error-that-downed-a-multi-million-dollar-mars-probe/

A commenter linked to this Flight of the Conchords bit and it’s worth repeating:

A.I. and LLM appear to be yet another way that Our Billionaire Overlords think that they can survive an apocalyptic collapse.

From what I have been able to decipher from the timing of her rare public utterances on the topic, Melinda finally kicked Bill out of the house after he insisted on surreptitiously participating in Jeffrey Epstein’s post-apocalypse genius-breeding program after she told him to cut that crap out.

We should follow her example and kick this power-sucking monster to the curb. It exists to replace us.

IMO, the reason that Vulture Capital loves the AI Bubble is the same reason Wall Street lent China a [few?] $Trillion to “offshore” the USA’s manufacturing base: to reduce Labor costs to business.

AI promises to replace office jobs by “automating workflow”. Who needs an Accounts Payable Clerk (or CFO?) when the AI can [pretend to] do it more reliably, eliminating Payroll, Benefits, Payroll Taxes, and the risks of human unpredictability.

“Reducing headcount” is a core mantra of the MBAs. AI promises to do that, so Wall Street gives Silicon Valley $Trillions to at least give it a try.