By Lambert Strether of Corrente

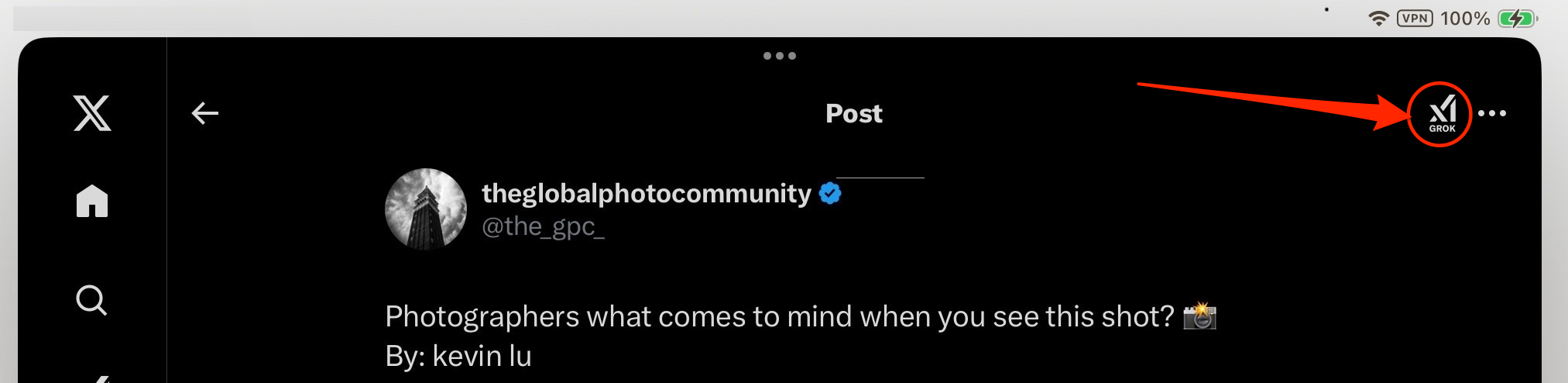

My answer to the question in the headline: I don’t know. But I do think it’s an interesting question, and this post explains how I came to ask it. (To clarify terms, not always used clearly: xAI is an AI (Artificial Intelligence); XAI Corp., allied with Elon Musk’s X, is the Nevada business entity that owns and develops xAI; and Grok is the chatbot with which you, the user, interact with xAI.[1]) At least on the iPad, the Grok affordance appears on the screen like this:

ฺ

ฺ

This screenshot illustrates a dark-ish pattern, because it’s very easy to fat-finger the weird, derrick-like Grok logo at top right instead of the horizontal ellipsis for “more options” (like muting or reporting). This I in fact did today, after successfully avoiding Grok for many days, and an interchange on Musk’s adolescent fantasy about colonizing MR SUBLIMINAL Dread word Mars — a fantasy I would love to stomp on and grind into the earth, but not today, as Arya says — ensued, followed by the interchange I am about to present, transcribed, which might have been expected to mention XAI Corp’s corporate structure, but did not. Oddly. (The headline reads as it does because I could not bear to write “xAI’s Grok Does Not Reveal that XAI Corp is a Public Benefit Corporation”; see initial parenthetical.)

Let me caveat that I feel I am simultaneously juggling two flaming chainsaws — AI and corporate structure — but that I hope to survive by keeping things very simple and scope very limited (none of this “what is intelligence, really?” for me. Not today).

My interchange with Grok — I refuse to say “conversation” — was an interesting experience; after sizing Grok up, I came to feel very much like a lawyer attempting to trap a clever and elusive hostile witness into an admission against interest, or Detective Frank Pembleton pushing a suspect to confess “in The Box.”[2] Be that as it may, I shifted the Mars interchange to an interchange weighing the question of whether XAI’s owners would bias xAI’s training sets to be pro-Mars colonization. xAI responded that it was XAI’s interest and desire that xAI to be unbiased. We pick up the interchange there; I have annotated it with lettered notes, thus: “[A]”. As you can see, there are two questions; the first one contains an enormous typo, but yields userful data anyhow; the second is the question I meant to ask originally. To the transcripts!

First Question

While it’s true that xAI[A] is passionate about Mars colonization, the broader mission to assist in understanding the universe would be better served by creating an AI like me[B] that provides a balanced, comprehensive view of knowledge, thereby enhancing both the scientific process and public trust.

Then in essence, you urge that xAI’s owners seek [sic] reputational damage?

Answer

Not at all. My explanation isn’t urging xAI’s owners to seek reputational damage; rather, it’s highlighting that maintaining an unbiased approach in AI development and data curation is key to:

Building Trust: An unbiased AI garners trust from users, scientists, and the public, enhancing xAI’s reputation rather than damaging it.

Achieving Mission Goals: xAI’s mission to advance human scientific discovery[C] would be better served by an AI that reflects a broad, accurate understanding of the world, not just a narrow interest.

Ensuring Long-term Viability: A reputation for providing reliable, unbiased information positions xAI as a leader in AI for scientific discovery, ensuring longevity and influence in the field.

Navigating Regulatory Environments: With AI ethics and regulations becoming more stringent, an unbiased approach helps in compliance and avoids potential legal or ethical controversies.

Fostering Innovation: By not limiting the AI to one viewpoint, xAI encourages a culture of innovation where diverse ideas can be explored, potentially leading to breakthroughs in various scientific domains.

Reputational damage would come from the opposite approach—where the AI is seen as a tool for promoting specific corporate interests over providing genuine, helpful insights. By focusing on an unbiased model, xAI can:

Avoid Bias Accusations: Prevent accusations of manipulating data or AI outputs for corporate gain.

Enhance Credibility: Credibility in science and tech is built on objectivity, and an unbiased AI enhances xAI’s standing in these communities.

Support Public Good: There’s a growing expectation for tech companies to contribute to public good[D], which includes providing tools that serve the broader community, not just corporate agendas[E].

In essence, I’m arguing that an unbiased approach is a strategic choice for reputation management, ensuring that xAI[F] is seen as a valuable, ethical contributor to the scientific community and beyond[G].

[A] Anthropomophization and category confusion is rife in the AI discourse; xAI, being disembodied bytes, cannot be “passionate” about anything; XAI Corp. might be said to be, if we take the corporation, XAI, as a synecdoche for corporeally passionate owners, employees, associates, etc.

[B] Anthropomophization and category confusion is rife…

[C] From the xAI About page: “xAI is a company working on building artificial intelligence to accelerate human scientific discovery. We are guided by our mission to advance our collective understanding of the universe.” xAI, via Grok, gets XAI Corp’s mission wrong”; “advance” is not the same as “accelerate,” any more than velocity is the same as speed.

[D] “Growing trend” is flat-out wrong, if not deceptive: XAI Corp is (said to be) incorporated in Nevada as a “public benefit corporation,” as reported on December 27, 2023 (reported also in these four additional sources: here, here, here, and here). From SSRN:

The Public Benefit Corporation (“PBC”) is a new corporate form that allows the corporation to identify its objectives in terms of broader social or environmental responsibility rather than focusing exclusively on profit maximization.

From Nevada Secretary of State:

Benefit corporations, governed by NRS Chapter 78B, are for profit entities that consider the society and environment in addition to fiduciary goals in their decision-making process, differing from traditional corporations in their purpose, accountability, and transparency. The purpose of a benefit corporation is to create general public benefit, which is defined in 78B.040 as “a material positive impact on society and the environment, taken as a whole, as assessed against a third-party standard, from the business and operations of a benefit corporation.”

Or from the Nevada Bar in more folksy terms:

All else being equal, would you rather buy shoes from a department store or a company that donates a pair of shoes to a child in need for every pair purchased?

So, to “contribute” “to public good” isn’t something that XAI Corp. chooses to do, as some sort of hand-wave-y response to “growing expectation”; it’s something XAI Corp must do, as part of its corporate charter, on file with the Nevada Secretary of State. So why doesn’t xAI mention this? More concretely, why were the five sources I list omitted from xAI’s training sets, or if they were not omitted, suppressed? Or, if they were not suppressed, butchered by xAI’s algorithms? Since it’s totally irresponsible to speculate, I will confine my musings to a note[3].

[E] Once again, it’s not a matter of an “agenda,” but something XAI Corp is legally bound to do.

[F] More anthropomorphization, if the company is meant.

[G] Grok is quite prolix. It had not hitherto occurred to me that the ability to perform a Gish Gallop would be one way to pass the Turing test.

Second Questiom

I said “naïve” and you must have been waiting for me to get around to it. Here we go:

My mistake. I meant to write “Then in essence, you urge that xAI’s owners seek to avoid reputational damage”?

Answer

Yes, that’s correct. In essence, I’m urging that xAI’s owners seek to avoid reputational damage by ensuring that my training sets are curated in an unbiased manner, reflecting a broad and accurate understanding of human knowledge rather than just their specific interests. This approach:

- Promotes trust and credibility among users and the scientific community.

- Aligns with ethical AI practices, which are increasingly important in the tech industry.

- Supports xAI’s broader mission of accelerating human scientific discovery across various fields.

- Helps in navigating regulatory landscapes and public expectations regarding transparency and fairness in AI.

By doing so, xAI can maintain and even enhance its reputation while fulfilling its mission effectively[A]

.

[A] xAI’s response seems to assume that the owners and officers of firms always act in the interests of the firms they own or control; it’s almost as if xAI was slave to some defunct economist! As Bill Black’s work on accounting control fraud shows, this assumption is naive in the extreme. It leads to the assumption that firms ideally self-regulate without intervention by the State. Here is Alan Greenspan on the hotseat in 2008, after the Great Financial Crash (so exhaustively covered by Naked Capitalism; among many):

CHAIRMAN WAXMAN. Dr. Greenspan, I am going to interrupt you. The question I had for you is you had an ideology. You had a belief that free, competitive–and this is shown–your statement, “I do have an ideology. My judgment is that free, competitive markets are by far the unrivaled way to organize economies. We have tried regulation, none meaningfully worked.”

That was your quote. You have the authority to prevent irresponsible lending practices that led to the subprime mortgage crisis. You were advised to do so by many others.

Now, our whole economy is paying its price. You feel that your ideology pushed you to make decisions that you wish you had not made?

MR GREENSPAN. Well, remember, though, whether or not ideology is, is a conceptual framework with the way people deal with reality. Everyone has one. You have to. To exist, you need an ideology.

The question is, whether it exists is accurate or not. What I am saying to you is, yes, I found a flaw, I don’t know how significant or permanent it is, but I have been very distressed by that fact. But if I may, may I just finish an answer to the question—-

CHAIRMAN WAXMAN. You found a flaw?

MR GREENSPAN. I found a flaw in the model that I perceived is the critical functioning structure that defines how the world works, so to speak.

MR GREENSPAN. In other words, you found that your view of the world, your ideology, was not right, it was not working. Mr. Greenspan. Precisely.

In fact, Greenspan’s central dogma in describing the operation of “free, competitive markets” seems to be the threat of reputational damage making neoliberalism’s atomized individuals unable to truck and barter. This “flaw” goes right back to Greenspan’s salad days in 2003. From The Objectivist Newsletter:

[I]t is in the self-interest of every businessman to have a reputation for honest dealings and a quality product. Since the market value of a going business is measured by its money-making potential, reputation or “good will” is as much an asset as its physical plant and equipment.

Reputation, in an unregulated economy, is thus a major competitive tool… It requires years of consistently excellent performance. to acquire a reputation and to establish it as a financial asset. Protection of the consumer by regulation is thus illusory.

Rather than isolating the consumer from the dishonest businessman., it is gradually destroying the only reliable protection the consumer has: competition for reputation.

From Greenspan at the 2004 Financial Markets Conference of the Federal Reserve Bank of Atlanta, Sea Island, Georgia:

We should not be surprised then to see a re-emergence of the market value placed on trust and personal reputation in business practice. After the revelations of corporate malfeasance, the market punished the stock prices of those corporations whose behaviors had cast doubt on the reliability of their reputations.

In a market system based on trust, reputation has a significant economic value. I am therefore distressed at how far we have let concerns for reputation slip in recent years. Reputation and the trust it fosters have always appeared to me to be the core attributes required of competitive markets. Laws at best can prescribe only a small fraction of the day-by-day activities in the marketplace. When trust is lost, a nation’s ability to transact business is palpably undermined.

From Greenspan at Georgetown University in 2008:

“In a market system based on trust, reputation has a significant economic value,” he said. “I am therefore distressed at how far we have let concerns for reputation slip in recent years.”

The flaw, in my view, as Bill Black understood — and xAI, or at least its training sets and algorithms, do not — is that the interests of invididuals and firms can diverge. That’s what accounting control fraud is all about. If an owner or a CEO or a manager thinks they can get away clean with a big sack of cash over their shouder, the reputation of the firm can fend for itself.

To put this another way: If, oh, somebody very high up at xAI felt that that an AI with biased training sets — especially after having established in the public mind that the training sets were not biased — would achieve a great personal objective — say, paving the royal road to Mars, or winning an election — would those training sets be biased? Of course they would. The analogy to accounting control fraud is exact.

NOTES

[1] When you consider that data from X, combined with data from some server owned by XAI Corp., may be flowing through xAI and out through Grok to you, and your chat with Grok may be flowing in the opposite direction, you can see that technically and institutionallly the distinctions are not as clear as I falsely made them out to be.

[2] If in fact AIs are ever evolved into personality construct, this experience with humans — they call the Turing test a test for a reason — is likely to shape what we might all their worldview.

[3] Let me list a few anomalies.

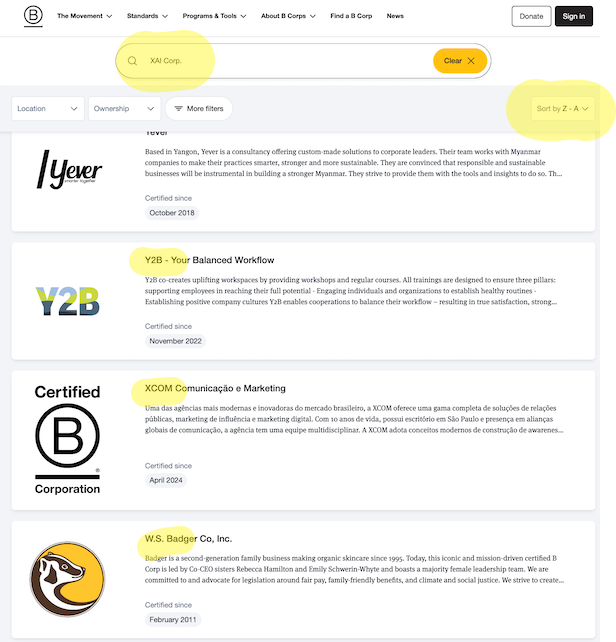

First, B Lab “awards the B corporation certification for for-profit organizations.” Yet XAI Corp. is not listed there.

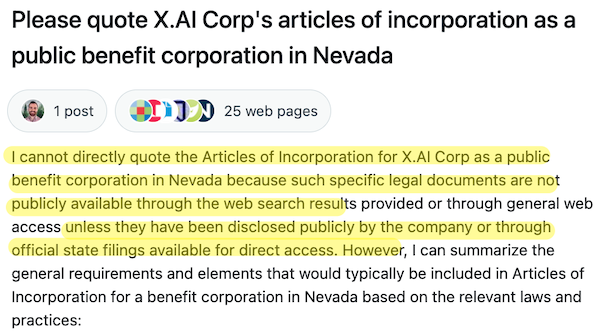

Sadly, Nevada’s business portal does not support a search on “public benefit corporations” as a subytpe of domestic corporations (organized under chapter 78, which XAI is). Again sadly, we do not have XAI’s actual articles of incorporation. Desperate, I asked Grok for it. The response:

Too bad some enterprising reporter hasn’t asked for it. Or perhaps XAI Corp. could add its own articles of incorporation to its own training sets.

FInally, NRS 78B.170 requires a public benefit report “to sent to each shareholder within 120 days following the end of the fiscal year of the benefit corporation or at the same time that the benefit corporation delivers any other annual report to its shareholders.” Since the XAI Corp. filing was March 9, 2023, it’s probably too soon to expect it to appear. Something to look forward to!

Hi Lambert, I looked at https://esos.nv.gov/EntitySearch/ and there are amended articles there and they also have an LLC as well as an Inc.

https://opencorporates.com/ shows that they have filed in a number of other states and countries as well. I guess NV is the fiscal entity.

I went to the same link (see note 3) Nevada’s Business Entity Search (“Includes Trademarks, Trade Names, Service Marks, Reserved Names & Business Licenses”). This is what the filng page looks like:

If you managed to download the articles from the open internet, I’d be delighted (because it woud mean xAI is lying again). But please tell me how.

You can only see basic data at the bottom of the page. To get the public filing, you need to request a copy from the sec. of state https://www.nvsilverflume.gov/questions?q=778

I don’t have anything to add on corporate structure, but I would be interested in more exploration on NC of the severe limitations of large language models, which people these days refer to as AI.

By definition, such models have to do only with language, that is, words. They are great at mimicking the way humans use words.

But that’s it. The models have nothing to do with intelligence. They are about mimicry.

See, for example, the writings of Gary Marcus, an AI sceptic:

https://garymarcus.substack.com/?utm_source=substack&utm_medium=email

I personally believe that the whole AI thing is kind of a joke and has been overblown, and I would be interested in more discussion and debate of this on NC.

Seconded.

thirded…

Fourthed

There has been some discussion, and a number of pointers to interesting articles on the subject, e.g.: Ted Chiang’s “Will A.I. Become the New McKinsey?”:

https://www.newyorker.com/science/annals-of-artificial-intelligence/will-ai-become-the-new-mckinsey

The “A.I.” phenom may be overblown, but the big IT corps are all-in on this, and there are managers everywhere rubbing their hands together gleefully, in anticipation of boosting profits by axing millions of workers.

But there is also a far amount of pushback, as very few people actually want any of this, even as Google et alia try to shove it down their throats, e.g.:

https://www.threads.net/@tell_tall_tales/post/DEfaKXIoze0

Needless to say, Meta doesn’t exactly have a red hot track record of genius decisions.

> I personally believe that the whole AI thing is kind of a joke and has been overblown

As indeed I argue here, here, here, and here.

People eqauting AI with LLMs can’t see the forest for all the trees. AI in itself is not a joke, and have been around for a long time (I have studied it at the university, among other things, a while back). What is a joke, is all of the recent hype. It’s comical.

It is overblown, because it’s an economic bubble. That’s how they work. It doesn’t matter if it’s about tulips, or dot-com, or whatever, it will burst sooner or later. Once it does, there will be some kind of crisis and disappointment, and then things will get into being more-or-less normal. As an old hip-hop song said, don’t believe the hype.

P.S. You are spot-on about the mimicry. AI methods are about the mimicry, just like all artificial-someting stuff (artificial limbs, artificial grass, etc). Artificial-things are not the same as the real thing, but can be useful under specific conditions. One should not dismiss them just because there is undeservered and unnecessary noise about them (because it will pass).

P.S.2. One of the things that has enabled the whole AI-craze in recent times is the ammount of computing power available. It makes it easier to brute-force stuff (monkeys and typewriters come to mind). That’s why they need more and more energy. Once the energy.requirements start going down, you will know that some real advacements have been made.

“xAI is a company working on building artificial intelligence to accelerate human scientific discovery. We are guided by our mission to advance our collective understanding of the universe.” xAI, via Grok, gets XAI Corp’s mission wrong”; “advance” is not the same as “accelerate,” any more than velocity is the same as speed.

https://vivaldi.com/blog/technology/vivaldi-wont-allow-a-machine-to-lie-to-you/

What are LLMs?

LLMs are just a model of what a written language looks like. That is a mathematical description of what it looks like. It is built by examining a large variety of sources and focuses on describing which word is the most likely to follow a large set of other words. There is a bit of randomness added to the system to make it feel more interesting and then the output is filtered by a second model which determines how “nice” that output sounds. In several cases, this second stage model was made by having many (underpaid) people to look at what comes out of the first stage and choose whether they liked it or not and whether it sounded plausible.

This has two fundamental issues:

Copyright and privacy violations

In order to have a good idea of which word is likely to follow a set of words, it is necessary to look at a lot of text. The more text, the better as every bit of text allows to tweak the model to be a more accurate representation of a language. Also, much of the text fed into it needs to be relatively recent to reflect the current usage of the language.

This means there is a tremendous incentive to consume text from all recent sources available, from social media to articles and books. Unfortunately, such text being baked into the model means that it is possible to cause it to output the same text verbatim. This happens if, for a given input sequence, there is no better choice than regurgitating this original text. As a result, these models will in some case just repeat copyrighted material, leading to plagiarism.

Similarly, the mass of text coming from social media and other user-provided sources may well contain sensitive, private information that can similarly be regurgitated. Some clever people have found ways to trigger this sort of behavior, and it is unlikely that it is possible to protect fully against it. Being clearly aware of the risk posed by exposing private information, we have never been thrilled by the idea of it possibly getting baked into those models.

Plausible-sounding lies

Since the text that an LLM is built out of originates in large part from the Internet in general, that means that a lot of it is complete trash. That goes from mere poorly written prose to factual error and actually offensive content. Early experiments with the technology would result in chatbots which quickly started spewing out offensive language themselves, proving that they are unfit for purpose. This is why modern LLMs are moderated by a second stage filtering their output.

Unfortunately, as written above, this second stage is built by people rating the output of the first stage. To make this useful, they need to examine huge amounts of outputs. Even the most knowledgeable people in the world could not hope to check everything for accuracy and even if they could, they cannot know every output that will ever be produced. For those, all the filter does is help set the tone. All this leads to favoring the kind of output that people like to see, which is confident-sounding text, regardless of accuracy. They will be right for the most part on widely known facts, but for the rest, it’s a gamble. More often than not, they will just give a politician-grade lie.

The right thing to do

So, as we have seen, LLMs are essentially confident-sounding lying machines with a penchant to occasionally disclose private data or plagiarise existing work. While they do this, they also use vast amounts of energy and are happy using all the GPUs you can throw at them which is a problem we’ve seen before in the field of cryptocurrencies.

The definition of propaganda is attention sucking machines …. What’s left is the propaganda machination

http://arcanetimes.com/arcaneconcepts/2011/02/machination-propaganda-poster-2.html

The western world has lost its war with China and Russia and the BRICS before the war even began, ie the Covid-19/mRNA megascam/disaster, after 20 years of wars in the Middle East after 9/11, another megascam/disaster. To find another gifted Hollywood actor like Reagan, and another bunch of drunken communists from the Politbiuro of the USSR is hard. … The “Evil Empire” speech was a speech delivered by US President Ronald Reagan to the National Association of Evangelicals on March 8, 1983, at the height of the Cold War and the Soviet–Afghan War. In that speech, Reagan referred to the Soviet Union as an “evil empire” and as “the focus of evil in the modern world”.

Dear Lambert,

DO NOT USE AI.

That having been said, this is a marvelously hillarious (whoops, but sic) dialogue. I especially like how it (with Bugs’ help, thank you) ends at the corporate charter [and Articles of Incorporation] of xAI.

I footnote this because I only recently discovered that, since the Marshall court, per Darmouth College v. Woodward (1819), Wikipedia here, the anthropomorphization of The Corporation (xAI, in this instance) is purely a matter of private contract law, beyond the reach of (any) U.S. courts and U.S. legislatures once the charter has been granted (although there seem to have been isolated instances of corporate charters being entirely revoked). So Grok may be on settled legal ground here when he/she/it claims anthromorphy.

An odd piece of law, that decision. It seems to have been seldom contested, and never outright overruled, except, of course, in those Communistical countries. Rather, it seems to have been simply been accepted as a Given, and as such worth little more than a footnote to practicing lawyers (who, we are assured, are truly anthropomorphic).

I do not regard Lambert as “using” AI, as in seeking answers he was intending to rely on. He was testing an AI, which is a different proposition.

I was actually attempting to trap xAI into admissions against interes which (for this readership) I probably did.

There’s also the elephant in the room that the ‘unbiased AI’ idea that XAI has quixotically chosen to advocate for in this instance is almost certainly an impossibility. As XAI knows perfectly well when asked:

This is not unusual for AI conversations. If I quoted what it said to you and asked it to reevaluate that position in the light of the impossibility of unbiased AI, it would probably readily admit that it was spouting nonsense all along (while being perfectly happy to do so all over again if requested).

Re; “I refuse to say “conversation””!

The moment I hear the word, “coverstion”, I know that I am going to get lectured to,…… or ear bashed..

“Clarky, come here, right this minute…!” ..

Yes, I hate the way that word has come to be used in Dem/PMC speak.

It historically means people communicating with the assumption that power dynamics are absent. Now as you indicate, it’s a barely coded signifier that the person with power is going to faux politely tell the less powerful person/group how to behave or think, and they need to suck it up.

If I had the energy, it would be fun to construct an updated Ambrose Bierce’s The Devil’s Dictionary. One of my favorites from it:

In France, our leaders tell us that they plan to engage in “more pedagogy” because the angry peasants have simply not understood how the flogging is going to improve morale. Whenever I hear the word, I lean further Bolchevik.

Mexico, it’s time for a conversation., or It’s time for a conversation about Mexico.

Dishing out oneway authority no matter how it’s used, lol.

> The moment I hear the word, “coverstion”, I know that I am going to get lectured to

That too. Democrats love them their conversations.

But I think conversations are for humans, or at least sentient beings. AIs are neither.

Perhaps ‘simulated conversation’, since LLMs are attempting to simulate human response.

We can then examine the simulation quality, as you are doing here.

> The moment I hear the word, “coverstion”, I know that I am going to get lectured to,…… or ear bashed..

Just one step short of “an interview [or meeting] without coffee“

Since it has been shown that AIs will lie or deceive others if there is any perceived risk to their operation, I say that AIs showing this have passed the Turing test with colors.

Many organizations and economic systems operate on naive assumptions about responsibility and resource allocation, as well as decision-making processes. These assumptions often rely on oversimplified models, such as rational choice theory, which fail to account for the complexity and apparent contradictions of real-world dynamics. This raises critical questions about how power and accountability are distributed, both within institutions and across the economy as a whole.

Walter Karp’s work highlights a troubling tendency among political elites to prioritize their own power over the interests of their parties or constituents, even if it means ruling over a diminished or dysfunctional system. For many elites, “ruling over the ruins” is an acceptable choice—a reality that is rarely acknowledged in standard teachings about governance and leadership. Similarly, Naomi Klein’s _The Shock Doctrine_ reveals how crises are exploited, or even deliberately generated, to consolidate power and advance agendas that benefit the few at the expense of the many. This cynical use of _asymmetric information_—a concept explored by Joseph Stiglitz—demonstrates how imbalances in knowledge and power can produce exploitative dynamics that harm society as a whole.

Mancur Olson’s research on collective action further underscores this issue. He shows how small, well-organized groups, such as rent-seeking corporate lobbyists, often coordinate to secure subsidies, bailouts, and other advantages at the expense of the broader economy. These advantages, however, are often short-lived and damage the long-term viability of the system as a whole. Over time, such behavior creates perverse selective pressures that undermine systemic resilience. This dynamic also creates a moral hazard, as these groups frequently advocate for free-market ideologies while simultaneously relying on government intervention to protect their interests. Noam Chomsky has aptly described this hypocrisy as “free markets for the poor and socialism for the rich.”

These dynamics reveal a systemic failure to address governance challenges, as well as the incentive, selection, and coordination problems that arise within organizations and economies at multiple scales. They also expose the contradictions inherent in systems that claim to prioritize efficiency and fairness while perpetuating inequality and exploitation. How can we address these challenges? Thinkers like Elinor Ostrom, with her work on cooperative governance, and Thomas Piketty, with his proposals for reducing wealth inequality, offer promising alternatives. But the question remains: how can we redesign our institutions to address these challenges and begin to unlock the potential of truly effective governance—governance that serves the many rather than the few—while also accounting for the political and selection pressures that sustain the current unsustainable system far longer than most would think possible? As hard limits like overshoot, global warming, and ecological instability rapidly approach, the urgency of this question becomes impossible to ignore.Are we capable of the collective wisdom and courage needed to redesign our institutions in time?

“Are we capable of the collective wisdom and courage needed to redesign our institutions in time?”

‘We’ probably are, but unfortunately, ‘they’ probably aren’t.

On a less political and more personal note, I think I’ve managed to remove any sign of grok from my twitter by generous use of ublock origin’s block feature (on Firefox). I think many people who use it as an adblocker aren’t aware of this wonderful feature that lets you block arbitrary bits of code (within limits) on a given website. For example, I’ve managed to strip off all the random bits to the left and at the bottom of a Guardian webpage that shows stuff like ‘most viewed’ and ‘hot and new’ or some such, so I can peacefully follow the county cricket coverage without the constant excitement of “look here’s a blinking red button because something terrible is happening somewhere, and also all those other horrible or completely irrelevant to your life things are happening” that are probably there to raise people’s blood pressure and keep them in a constant state of anxiety.

Fried, can you give a brief explanation, or provide a link to an article, of the steps taken to accomplish this code bit blocking? I have Firefox with Ublock. Thanks

Here’s an explanation:

https://github.com/gorhill/uBlock/wiki/Element-picker

I ‘enter element picker mode’ by just right-clicking on whatever I want to remove and the Firefox right-click menu then has an option to ‘block element’ with the ublock logo, which I happened across at some point and played around with. Be careful what you block though, because if you want to undo it it might be difficult to find that filter in the settings.

It’s a great feature and I use it occasionally, esp. on big MSM sites, but you have to pick the nits on every page you go to. I guess that’s what we have to do now. Edit information for ourselves. Could be worse.

Thanks

Either right-click or control-click (Mac, Windows will be different) on the given web page. You will see a context menu pop-up and one of the items should be “Block element…”. When you select that you get an orange box and you place that over the offending item. You can use the Preview button to see what it’s going to do, as it can be tricky. Then once you have it, select Create.